Locating the Coordinator

DNS?

Happily all properly configured hosts should know how to query DNS. It also supports delegation which allows the RAMCloud DNS entries to be managed separately. It also can help deal with Coordinator failure by providing a list of possible future Coordinators.

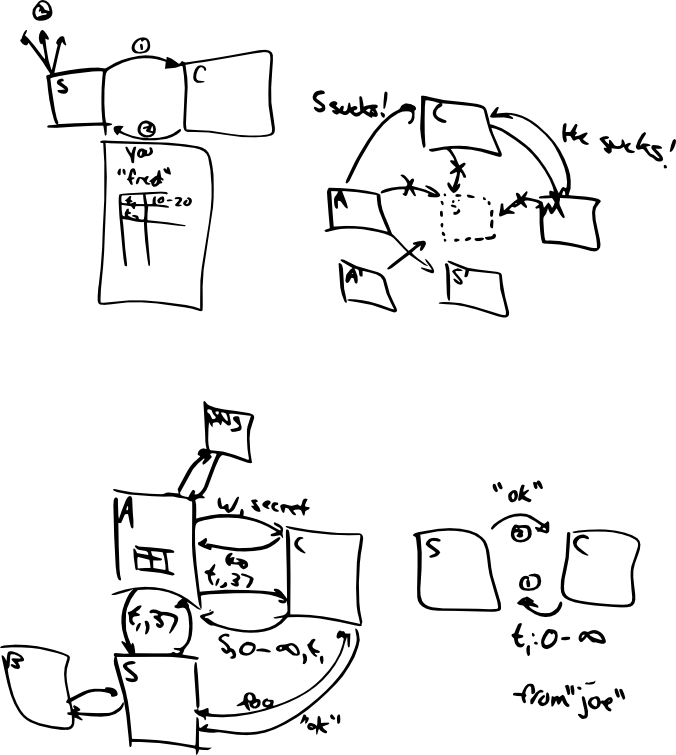

Authentication

Users

See Security for the current proposal. Briefly, clients/users will provide a secret to the Coordinator which the Coordinator will verify and issue a token. Applications must provide this token on RAMCloud requests which the Master will confirm with the Coordinator (and cache).

The Coordinator stores (persistently) a shared secret with the users. It also houses the tokens (ephemerally), we may want some persistence on this to keep from flooding a new Coordinator with authentication requests after a Coordinator recovery.

ACLs/Workspaces

For the moment access to a Workspace is all or nothing (or perhaps even conflated with the user) hence the Coordinator stores (persistently) a list of workspaces the user owns (or in the conflated case, the secret associated with each Workspace).

Servers

Master -> Coordinator

This step helps us with naming later as well. Since Masters must authenticate to the Coordinator and it assigns the Master roles it can then slot the Master into its naming tables (ephemerally).

Backup -> Coordinator

Master -> Backup

Problem: A Master may disclose data to a non-RAMCloud machine if a machine a machine address is reallocated for use as a non-RAMCloud machine. Possible solutions: ignore it or encrypt data.

Naming

Host Addressing

A lookup table of logical hosts to (ephemerally) RPC addresses.

Aside: I don't really believe the Master -> RPC Addr mapping will need to be replicated, nor the Backup -> RPC Addr one. This is problematic - it probably makes the above state (persistent).

Tables/Tablets and Indexes

(workspace, table, start id, end id, logical host address) relation

(persistent)

Placement

(network host address, rack)

(persistent)

Location/Discovery

Perhaps we've got a story for this with DNS?

Reconfiguration

Recovery

Choosing Replacements

For crashed M choose a new network host address (under no constraints?). Issue a shootdown of old machine address?

Crash Confirmation

When do we notice crashes? Who heartbeats?

If Coordinator notices failure or has one reported to it heartbeat M, if failed contact other hosts (backups or masters) one inside the same rack and one outside. If failure is agreed then we must broadcast new mapping. How can we guarantee no client will see old master? We could disallow backups, then if backups are on on the old master it would kill itself once its backup failed. If it is a non-durable table this would be problematic except that there is no way to restore it anyway.

Broadcast Notifications

Perhaps delegate some of the work.

Partition Detection

Statistics

Logging

Metrics

Configuration Information

Rack Placement

Machine Parameters

Summary of Coordinator State

- Workspace list

- Possibly users

- User or workspace secrets

- (soft) Issued security tokens

- (soft) Logical host naming

Assume 16K machines.

Authentication data:

(application id, secret)

Size: Negligible

Churn: Negligible

Logical host mapping:

(logical host address, network host address/service)

Size: 16 bytes * 16K machines = 256K

Churn: Low (2 entries per 15 minutes)

Machine configuration and placement information:

(network host address, rack, machine configuration)

About the same properties as above, might be able to join the two depending on whether we wrap backup together with masters or whether we want independent services.

Tablet Mapping:

(logical host address, workspace, table/index, start id, end id)

Size: 640K/tablet/server

Churn: Dependent on reconfiguration. Could be more or less frequent than crashes.

Unallocated machines:

(network host address)

Size: Negligible

Churn: Similar to logical host mappings

Fast Path Operations

...

Important Problems from Design Meeting

- Exactly how does a master select backups?

- How do we make sure a master is dead (to prevent divergent views of tables)?

- How does a new master know when it has received all segments (e.g. knows it has the head of its log)?

- How does host-to-host authentication work

- In particular, how does the application authenticate the Coordinator?

Further Things to Walkthrough in Design Meetings

- How does one install a new machine?

- How does one update RAMCloud software?

| Code Block |

|---|

== Bootstrapping C Discovery ==

1) DNS

- Slow

+ Preconfigured on hosts

+ Delegation

+ Provides a way to deal with Coordinator failure/unavailability

== Summary of Actions ==

-- Auth -------------------------------

App authentication 6, 7

App authorization 7

Machine authentication 8, 9

Machine authorization 9

-- Addressing -------------------------

Find M for Object Id 1

Lookup LMA to NMA 4

-- Backup/Recovery --------------------

List backup candidates 3

Confirm crashes 4

Start M recovery 1, 4, 5

Notify masters of B crash 4

Create a fresh B instance 5

-- Performance ------------------------

Load Balancing 1, 10

Stats, Metrics, Accting 10

-- Debugging/Auditing ----------------

Logging 11

== Summary of State ==

Name Format Size Churn Refs

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

1 tabletMap (workspace, table, start, end, LMA) ~40b/tablet/master Only on load balance Per workspace, table, id miss

3 hostPlacementMap (NHA, rack) ~16b/backup 2 entries/15m/10K machines After ~n/k segs written/master

4 logicalHostMap (LMA, NHA) ~16b/host 2 entries/15m/10K machines Per host addressing miss (on Ms, As, or Bs)

5 hostFreeList (NHA) ~8b/spare host ~0 Every 15m

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

6 appAuthentication (appId, secret, workspace) ~24b/principal ~0 Once per A session

7* appAuthorization (token, workspace) ~16b/active session Once per new A access to each M

8 machineAuthentication Perhaps "certifcate"? Every 15m (except during bootstrap)

9* machineAuthorization (token, role) ~16b/active host 2 entries/15m/10K machines ~Twice per first time pairwise host interaction

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

10 metrics ? (LHA, [int]) # dropped reqs/s etc. ? ?

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------

11 logs ? Large High ~0

Data structures:

1 Find M for Object Id

(workspace, table) -> [(start, LMA)] Hashtable with ordered lists as elements

Start M recovery

LMA -> (workspace, table, start, end) Hashtable

3 List Backup Candidates

Return n and rotate them to the back of the list List

4 Lookup LMA to NMA

Confirm crashes

LMA -> NHA Hashtable

Start M Recovery

Remove entry in Hashtable, insert one

Notify masters of B crash

Needs list of all masters NMA, just walk buckets

5 Start M recovery Hashset (insert/remove)

Remove M from hostFreeList**

Create a fresh B Accidentally conflated these free lists

Remove B from hostFreeList**

== Persistence/Recovery Ideas for C ==

1) Replication

2) Disks, WAL/LFS

3) RAMCloud Tables

- Serious circular dependencies and bootstrapping issues

? Recovery latency

+ It works, it's durable

+ Code reuse

+ Super-cool

4) Decentralized

5) Punt/High-availability/special hardware/VM replication

=== Replication ===

- Performance & Complexity (2PC?)

=== RAMCloud-based C recovery ===

Choices:

1) C uses Bs

2) C uses (local) M

NOTE: this approach presupposes that key-value store is good bet for

this data; might not always be true (e.g. indexes)

PROBLEM: Authenticate hosts during recovery without state?

Can use small key RSA.

As below, except where PROBLEM is We know that our CM crashed when the

previous C did. Now - we need to just bootstrap the backup system

which already relies on broadcast.

start C

create empty tempLogicalHostMap

create tempHostFreeList and tempHostPlacementList from NHA range passed on the command-line

create empty tempTabletMap

broadcast to tempHostFreeList

// BOOTSTRAP and/or RECOVER

backupsOnline = 0

while backupsOnline < k {

pop tempHostFreeList

check tempHostPlacementList

if new rack {

start B

add B to tempLogicalHostMap

backupsOnline++

} else {

pushBack B tempHostFreeList

}

}

start M on localhost

add (0, self network address) to tempLogicalHostMap

add (0, logicalHostMap, 0, inf, M) to tempTabletMap

notify M of change and tell it to recover

insert(0, logicalHostMap, (0, logicalHostMap, 0, inf M))

map insert(0, logicalHostMap) tempLogicalHostMap

map insert(0, freeList) tempHostFreeList

3) C is an A

PROBLEMS: Can't count on C for starting recovery, else if master with data fails we're done

Needs to deal with NHAs sometimes, which is different than a normal app

start C

create empty tempLogicalHostMap

create tempHostFreeList and tempHostPlacementList from NHA range passed on the command-line

create empty tempTabletMap

broadcast to tempHostFreeList

receive a response from one host {

// RECOVER

// PROBLEM - what if master went down in the meantime, neither can recover

} else {

// BOOTSTRAP

backupsOnline = 0

while backupsOnline < k {

pop tempHostFreeList

check tempHostPlacementList

if new rack {

start B

add B to tempLogicalHostMap

backupsOnline++

} else {

pushBack B tempHostFreeList

}

}

pop tempHostFreeList; start M; add M to tempLogicalHostMap

add (0, logicalHostMap, 0, inf, M) to tempTabletMap; notify M of change

insert(0, logicalHostMap, (0, logicalHostMap, 0, inf M))

map insert(0, logicalHostMap) tempLogicalHostMap

map insert(0, freeList) tempHostFreeList

}

|