...

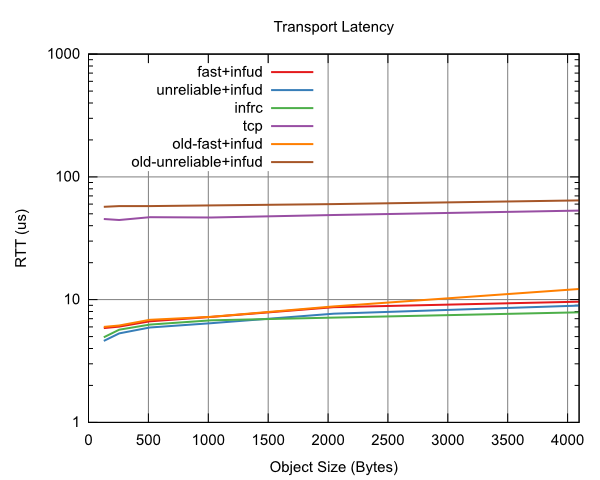

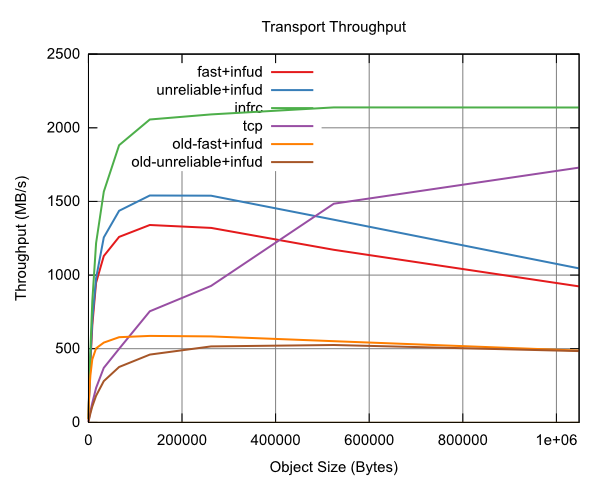

We've written a simple benchmark that runs a single RAMCloud client against a

single RAMCloud server, and reads a single objects of a given size repeatedly.

With small object sizes, this allows us to measure the latency of our transport

protocol, and with large objects, we can measure throughput. On top of this

benchmark, we created scripts to plot latency and throughput stats from various

transports for various object sizes. These scripts were used to generate the

graphs on near the bottom of this page and have been helpful in tracking our progress.

Metrics

When working on RAMCloud's recovery system, we created a special-purpose system

for gathering performance counters and timer data from the different servers.

The metrics and their presentation was specific to recovery, but the basic idea

is applicable to this project as well.

...

4) InfUdDriver previously had only one transmit buffer, which serialized work

between the server thread and the NIC. In particular, the server thread would

stall while the NIC was DMAing this buffer. We changed this to a fixed number

of buffers, which improved throughput by a factor of 2 or 3.

Results

In our initial project plan we laid out a goal of having RTT within 500 ns of

unreliable transmissions. The graph below shows that after the changes we

listed above we should be able to meet that goal.

Before performance fix (1) (shown by old-unreliable+infud) latency for small

messages was worse than TCP through the Linux kernel. After fixes, however,

unreliable+infud is slightly faster for small messages than Infiniband's native

reliable transport (infrc) which is implemented in hardware. Because fast+infud

is so close to infrc in performance and is bounded by unreliable+infud, we believe

we may actually be able to beat the hardware-based transport protocol in software.

This means we'll continue on plan with our efforts to optimize the code for

small messages with focused optimizations using our metrics system.

When it comes to throughput fast+infud has more interesting problems. Though our

measurements show we're already improved fast+infud's performance by three-fold,

it is still slower than hardware reliable Infiniband.

This graph shows that fast+infrc has an algorithmic issue which causes its

throughput to decrease as message size increases. The decrease is severe

enough that the highly optimized Linux TCP stack is able to overcome its

high overheads and beat fast+infud. More investigation will flush out the

offending code. The solution will depend on the precise problem.

Once these issues are worked out we'll need to start considering how

FastTransport behaves in a more dynamic situation. Things we need to

consider or work on:

- How does FT behave with many machines?

- In particular, several clients with one server.

- How should we deal with congestion?

- What about incast on concurrent responses from many machines?

- How should we set the window size?

- How should we set retransmit timeouts?