The intention of this page is to present experiments with non-CRUD data operations.

An aggregation operations adds up the values of a number of objects. When executing such an operation in RAMCloud three questions, among others, are of interest:

The experiments below are centered around the question about where to execute the operation. Three different scenarios are implemented:

for(uint64_t i = 0; i < range; ++i)

{

LogEntryHandle handle = objectMap.lookup(tableId, i);

const Object* obj = handle->userData<Object>();

int *p;

p = (int*) obj->data;

sum += (uint64_t)*p;

}

|

/**

* Aggregation Callback

*/

void

aggregateCallback(LogEntryHandle handle, uint8_t type,

void *cookie)

{

const Object* obj = handle->userData<Object>();

MasterServer *server = reinterpret_cast<MasterServer*>(cookie);

int *p;

p = (int*) obj->data;

server->sum += (uint64_t)*p;

}

|

/**

* Aggregation via traversing all SegmentEntries throughout the entire Log

*/

uint64_t

Log::aggregate()

{

uint64_t sum = 0;

//Iterate over all Segments in the Log

foreach (ActiveIdMap::value_type& idSegmentPair, activeIdMap) {

Segment* segment = idSegmentPair.second;

//Iterate over all SegmentEntries in a Segment

for (SegmentIterator i(segment); !i.isDone(); i.next()) {

SegmentEntryHandle seh = i.getHandle();

//Check that it is an Object

if (seh->type()==560620143) {

const Object* obj = seh->userData<Object>();

int *p;

p = (int*) obj->data;

sum += *p;

}

}

}

return sum;

}

|

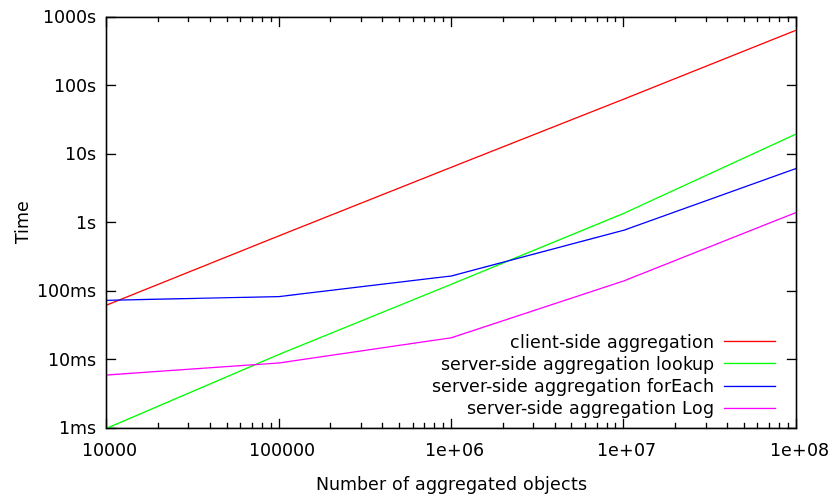

The benchmarks below have been executed using separate machines (out of the Stanford RAMCloud cluster) for client and server which are connected via Infiniband. After each run, the equality of the client-side and server-side calculated sum has been checked. During all runs, the hash table size was set to 5GB.

In this set of benchmarks, all objects which are stored in a MasterServer are included in the aggregation operation. Consequently, this means that if 1.000.000 objects are aggregated, there are only 1.000.000 objects stored within a MasterServer.

#number of objects |

client-side aggregation |

server-side aggregation |

server-side aggregation |

server-side aggregation |

|---|---|---|---|---|

10.000 |

63 ms |

1 ms |

74 ms |

6 ms |

100.000 |

648 ms |

12 ms |

84 ms |

9 ms |

1.000.000 |

6485 ms |

127 ms |

168 ms |

21 ms |

10.000.000 |

64258 ms |

1378 ms |

781 ms |

142 ms |

100.000.000 |

652201 ms |

19854 ms |

6245 ms |

1422 ms |

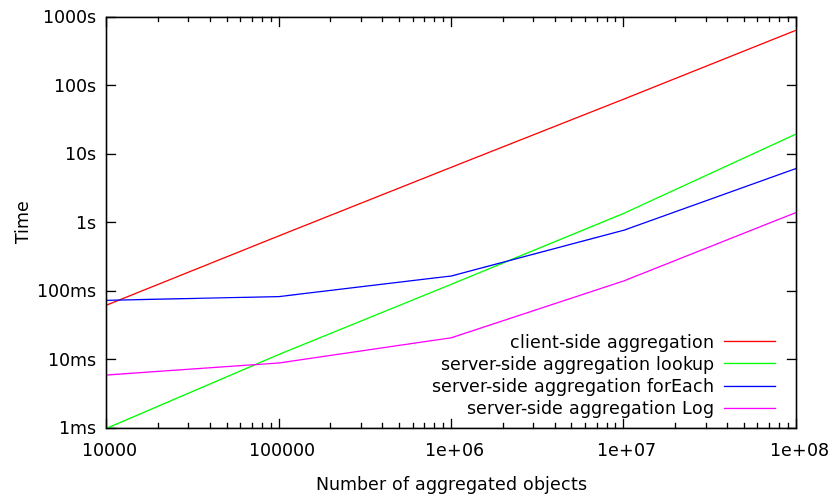

In this set of benchmarks, only a subset of 10% of the objects which are stored in a MasterServer are included in the aggregation operation. Consequently, this means that of 1.000.000 objects are aggregated, there are 10.000.000 objects stored in total within a MasterServer.

#number of objects |

client-side aggregation |

server-side aggregation |

server-side aggregation |

server-side aggregation |

|---|---|---|---|---|

10.000 |

63 ms |

1 ms |

74 ms |

6 ms |

100.000 |

648 ms |

12 ms |

84 ms |

9 ms |

1.000.000 |

6485 ms |

127 ms |

168 ms |

21 ms |

10.000.000 |

64258 ms |

1378 ms |

781 ms |

142 ms |

100.000.000 |

652201 ms |

19854 ms |

6245 ms |

1422 ms |

This previous benchmarks allow the following conclusions: