Current Design

Goals

- Fast time-to-recovery (TTR) for all data in the system

Achieving fast TTR of a single machine from a single machine is not practical because reconstituting 64 GB at disk rate requires several minutes. Thus, we need to bring more disk heads or SSDs to bear on the problem. To that end we introduce shards. A shard is, simply, a contiguous key range. The key range for each shard is chosen such that the shard represents approximately some fixed size in bytes with some hysteresis. Shards can be split/merged also to allow key ranges to move between servers for request load balancing, but this is secondary to the TTR goal. (Question: What changes if we have shards be contiguous machine/address ranges allowing objects to move between shards? One problem: dealing with client-side key-to-machine lookups.) We expect to maintain, therefore, some near constant number of shards for a single master's main memory. Each of these shards is backed-up by z other (hopefully distinct) machines.

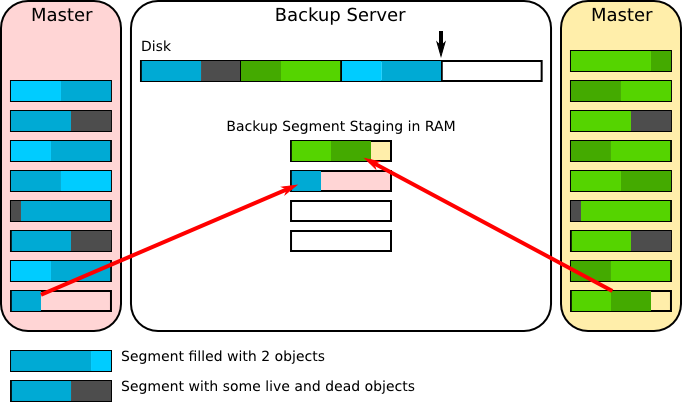

A master for some shard receives all writes to that shard. All those writes are logged in the master and forwarded to the backups. The backups log the write in memory in precisely the same way. Once the in-memory backup log reaches a configurable length that log is committed to disk (we call these fixed length shard-specific log fragments segments). This allows us a knob to control how much buffering we need to achieve some target write bandwidth for our disks. Necessary segment sizes depend on the write bandwidth and seek latency of the logging devices. Standard disks seem to need about 6 MB segments to achieve 90% write bandwidth whereas SSDs seem they will need 128 to 512 KB segments.

Recap

Assumptions

- Must be just as durable as disk-storage

- Durable when write request returns

- Almost always in a state of crash recovery

- Recovery instantaneous and transparent

- Records of a few thousand bytes, perhaps partial updates

...

- Idle standbys versus homogeneous load

- Parameters - replicas (z) and shards; how do we choose them? On what granularity are they?

- Major failures: How long to bring up if we lose DC power?

- Reconstituting failed backup disks? Don't?

- Adds to the reason for more, smaller disks in a single machine

- More heads = more write bandwidth per node

- If we partition the shards to only log to a specific disk then we only have to replicate 1/4 as many shards when a single disk fails

- The downside, of course, is that disk failures will occur more often, and cost

- All backups must be able to initiate reconstruction. Why?

- Flash may be worth another look, particularly if we can play games in the FTL.

Old Notes

Issues

- Disk write bandwidth

- Best approach to achieve good write bandwidth on disk: have at least 3 disks, one for write logging, one archiving the last amount of log data, one for compaction. Using this scheme we can completely eliminate seeks which should give us about 100 MB/s. Unfortunately, we'll need RAID as well so the total is more than 3 disks just to achieve the write bandwidth of 1 disk.

- Network bandwidth

- Incast issues on reconstruction

- Particularly at TOR switches, etc.

- Otherwise lower-bound on machine reconstruction is about 1 min.

Looming Questions

- Read/Write mix?

- Merge recent writes

- Making data available during recovery

- If backups are going to have to serve shards while recovering a master why recover at all?

Alternative - No Recovery

- Soft state masters, backups

- Once a master or backup fails a new one is chosen and the state is sent there using remaining backups/masters.

- All soft state, fixes many consistency issues on recovery

- Hold most recent writes in a partial version of the shard in RAM

- LRU

- Don't evict values from RAM on write-back (this allows fast recovery if backups need to compare recent values for consistency)

- We get write coalescing for cheap

- Can maintain on-disk structure in a compact and quickly serviceable layout

- ?? Is this going to be impossible to maintain with decent performance, though?

- If so, we can still do logging, but how quickly can we service requests then?

- On master failure

- For each shard served one of the backups for that shard will be elected master

- The election will be aware of the load on each server so the master will tend to the servers that are less loaded

- Compare backups after a master is elected to check consistency

- The master could've failed while writes were in flight

- Version numbers make this easy

- Only the most recently written value can be inconsistent, so we only need to compare k values for k backup shards

- This is much cheaper than an agreement protocol for the backups

- After getting no response on a write to a master the client will need to retry the write to one of the backups, which will redirect the client to the new master, if the write was already seen the new write will fail harmlessly, if the write was not seen it will succeed.

- To repair locality we'll punt to a higher level service which will eventually migrate the data

- Backups are not recovered either

- On non-responsive backups a new backup is chosen based on load and location (must be outside of rack, etc.) and bootstrapped

- Bootstrap from the primary or from the other backup?

Questions

...

- We'll need some target ratios based on the failure rate of machines in order to trigger migration of shards to less loaded servers

...

- Static?

- Dynamic?

...

- Shard and store along with the values

- How do we shard, though, since it is ordered on a different key

...

10/7 Discussion (Logging/Cleaning on Backups)

Knobs/Tradeoffs

- Time to on disk durability (TTD)

- Also determines how much "NVRAM" we need

- Memory needed to "stage" or buffer writes

- Write bandwidth - (WE is (new data amount)/(write bandwidth))

- Time to Recover a shard/machine (TTR)

- Shards are intended to speed recovery time

Cluster writes in chunks ("shardlets") to be batch written to segments on disk

- From previous discussion, achieves about 90% WE using 6 MB chunks per shard

- 3z GB RAM for 512 shards

- 25z TTD in case of power failure

- 90% RE providing TTR of 5z s per shard

Question remains: Log compaction or checkpointing to keep replay time from growing indefinitely

No-read LFS-style cleaning

- Masters write to 6MB shardlets in log style

- Writes are dispatched to all backups just as before

- For each shard the backup maintains a shardlet image in RAM that is in lock-step with the servers

- Once the server is done placing writes on a shardlet the backup commits it to disk

- The master recovers space from deleted or updated old values by performing compaction

- This is simply an automatic write of data from two or more old shardlets to a new one

- This allows the old shardlets to be reclaimed

- To allow the backups to reclaim the space in their on-disk logs the master sends a short trailer after finishing writes to a shardlet describing which previous shardlets are now considered "free"

- The backup maintains a catalog of where active data for each shard is (its shardlets) on disk and periodically writes this to the log

Back of the envelope

Master cleans shardlets with less than some amount of utilization

Shardlet Util. | Disk/Master Memory Util. (Median Shardlet Util.) | TTR Off Optimal | Remaining Disk BW Off Optimal | Combined WE with 90% approach from earlier |

|---|---|---|---|---|

50% | 75% | 1.33x slower | ~50% | 45% |

10% | 55% | 1.82x slower | ~88% | 80% |

- Need to take a serious look at the distribution, however. Will need to force bimodality of shardlet distribution, which will bank on some locality (temporal will be just fine)

- We tie our memory management of the server to the disk layout

- Specifically, we can't afford to have the memory be under utilized, but we won't benefit much if shardlets are dense

Fast (fine-grained, shard interleaved) logging + shardlets

- May be worth looking at some more, but back of the envelope numbers suggest that on top of the 10 s or so to restore a shard from the shardlet log another 2 minutes or so would be needed to replay the log on top of it

- A possible approach is to do shardlets are multiple granularities, but exploring this is probably only worth it if we feel we aren't inside our time budget for TTD against power failures