Contents)

- Cluster Outline

- User's Guide

- User setup

- Running RAMCloud

- Development and enhancement

- Performance result:

- clusterperf.py

- TBD) recovery.py

- System administrator's guide

- System configuration

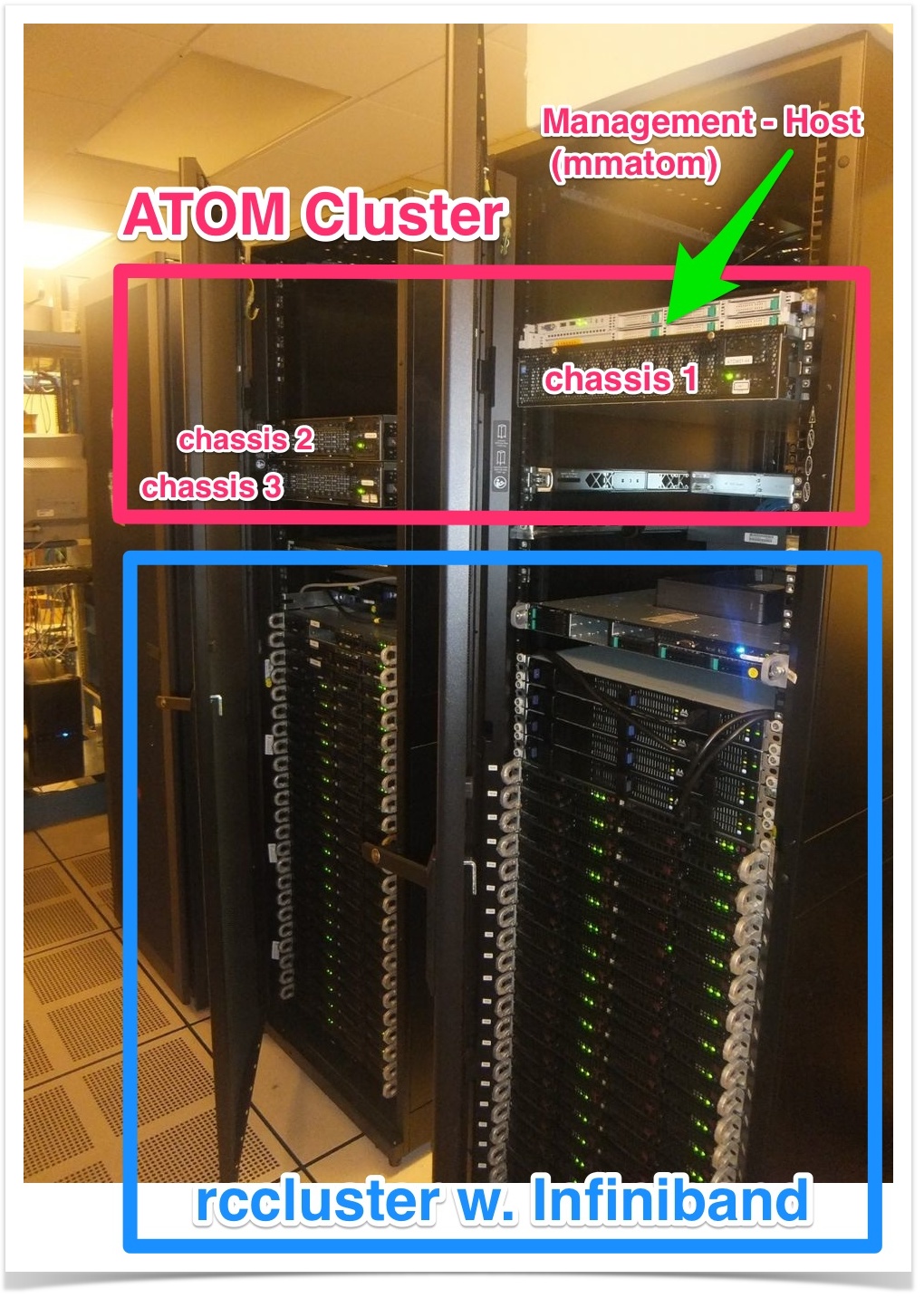

- System photograph

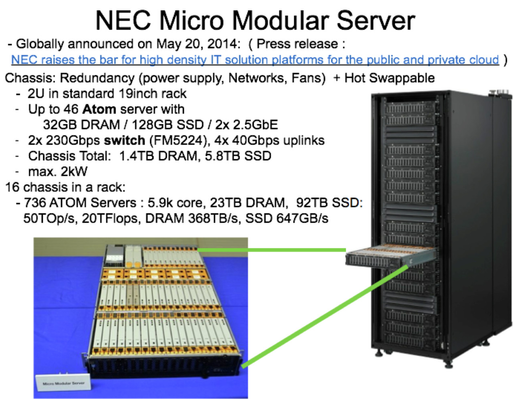

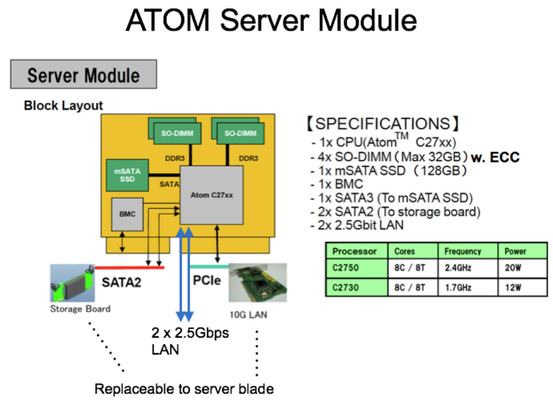

- Server module specification

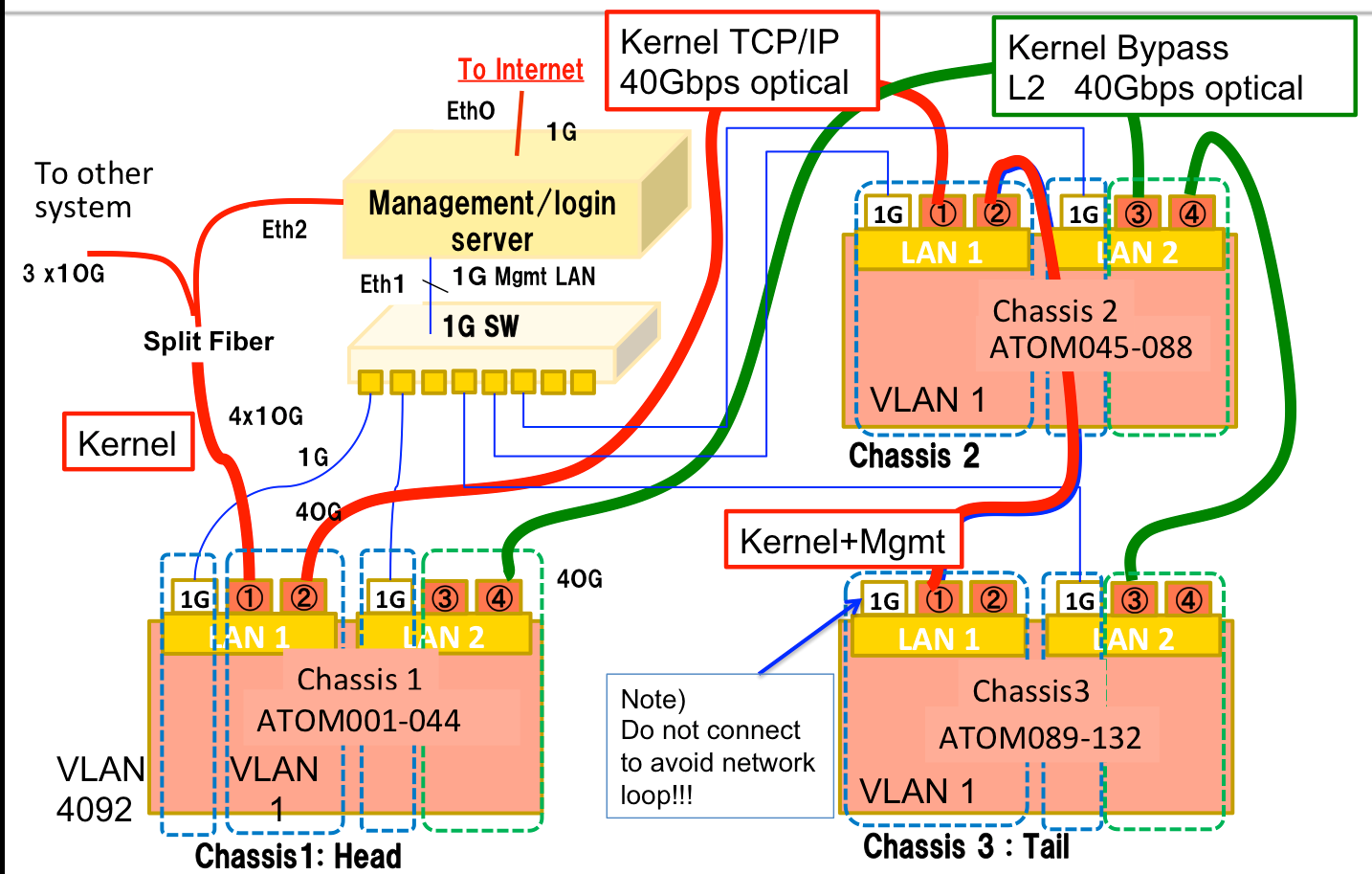

- Network connection

- System administration guide

- Security solution

- System configuration

Cluster Outline)

Basically the procedure is the similar to rccluster except that:

- Use local git repository located on 'mmatom'

- The source code and scripts are pretested for the ATOM based 'RAMCloud in a Box'.

- The cluster has 132 ATOM servers connected to dedicated management server 'mmatom.scs.stanford.edu', which is directly connected to the Internet. Please take a look at System configuration below for detail.

User's Guide)

User setup)

- ssh login to management server 'mmatom.scs.stanford.edu' with public key authentification.

- Install your 'key pair for the cluster-login' to ~/.ssh . For existing RAMCloud users they are already copied from your home in rcnfs.

- Add the cluster-login-public key to ~/.ssh/authrized_keys .

Note) Your home is shared with all the ATOM servers with NFS, so you can login to all atom servers with public key authentification.

- Add the cluster-login-public key to ~/.ssh/authrized_keys .

- Initialize known_host:

- You can use /usr/local/scripts/knownhost_init.sh

- Usage) /usr/local/scripts/knownhost_init.sh <ServerNumberFrom> <ServerNumberTo>

If the host is already initialized result of 'hostname' on remote machine is displayed, otherwise you are prompted whether you will add the host to known_host database, where you should type 'yes'. - Example)

$ knownhost_init.sh 1 20

atom001

atom002

:

- Usage) /usr/local/scripts/knownhost_init.sh <ServerNumberFrom> <ServerNumberTo>

- You can use /usr/local/scripts/knownhost_init.sh

Compile RAMCloud on the host 'mmatom' )

- Gig clone RAMCloud, which is pre-tested for the ATOM cluster

- Directory structure:

- Compile

- cd ramcloud; make DEBUG=no

Run clusterperf.py from 'mmatom') - You have RAMCloud source compiled.

- Setting is defined in localconfig.py, config.py and imported to clusterperf.py through cluster.py.

- Basic settings to run RAMCloud application on ATOM servers are provided in config.py . So far we have tested followings. We are going to test more commands:

- clusterperf.py basic (equivalent default parameter to rccluster: replica=3, server=4, backup=1)

- TBD: clusterperf.py (running all of the standard performance tests)

- TBD: recovery.py

- Reserve or Lease ATOM servers:

- rcres will be ported to 'mmatom' too.

- Edit config.py for your servers reserved.

- Run clusterperf.py

$ scripts/clusterperf.py

Performance result)

Clusterperf.py

Recovery.py

System administrator's guide)

System configuration)

1. system photograph)

2. ATOM server module specification)

- 3 chassis are installed (The rack in above picture contains 16 chassis.)

- Installed ATOM modules are with C2730 (1.7GHz, 8 core/8 threads, 12 W)

3. Network connection)

- Due to historical reason and considering future experiment, VLAN configuration is different in chassis

- Management server is directly connect to the internet, the cluster is isolated from other Stanford servers.

- Management server works as firewall, login server, firewall, NIS server, DHCP server, and PXE server for reconfiguring ATOM servers.

Security solution)

Reference: