Contents)

- Cluster Outline

- User's Guide

- User setup

- Running RAMCloud

- Development and enhancement

- Performance result:

- clusterperf.py

- TBD) recovery.py

- System administrator's guide

- Adding user with dedicated script.

- Hardware maintenance

- Security solution

- Stanford's ATOM Cluster - configuration, etc

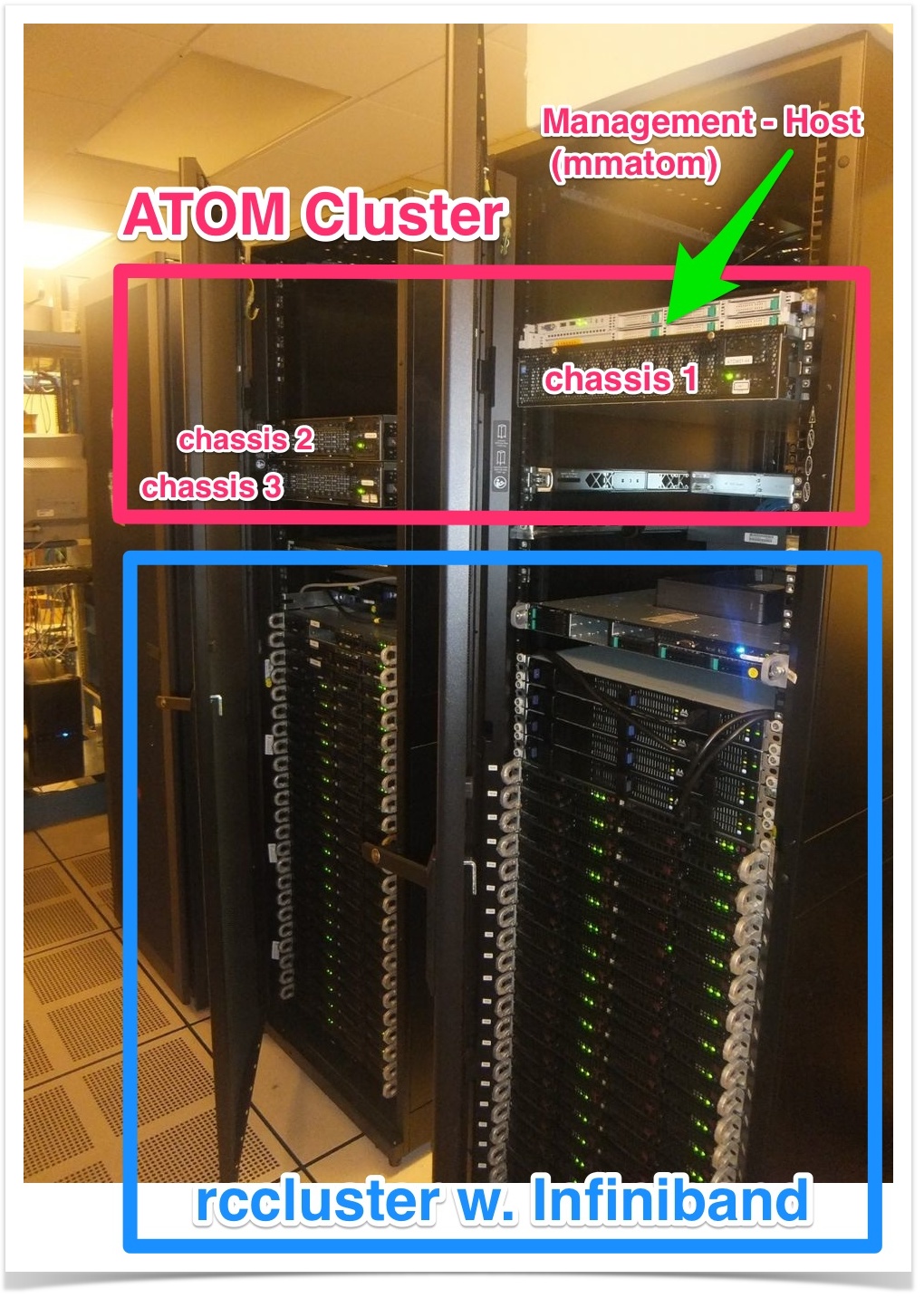

- System photograph

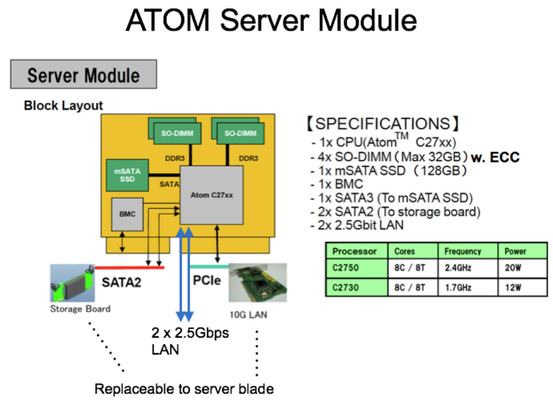

- Server module specification

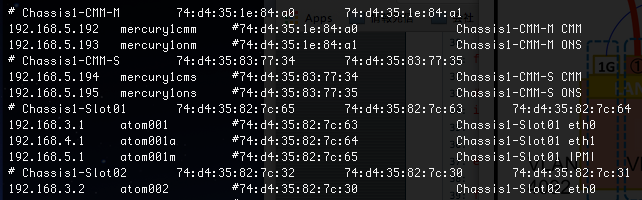

- Network connection

Cluster Outline)

Basically the procedure is the similar to rccluster except that:

NOTE) I have created a repository for test in /home/satoshi/ramcloud-dpdk.git and tested. You can clone the latest ramcloud on ATOM for testing. Please try it. The instruction is written below.

- The cluster has 132 ATOM servers ( Total : 1,056 cores, 4.1TB DRAM, 16.5 TB SSD) connected to dedicated management server 'mmatom.scs.stanford.edu', which is directly connected to the Internet. Please take a look at System configuration below for detail.

- Unstable server can be disconnected with management tool, and the continuous IP-address/hostname given. IP-address and hostname is always associated. You will find the details in System-Administrator's guide:Hardware maintenance.

User's Guide)

User setup)

- ssh login to management server 'mmatom.scs.stanford.edu' with public key authentification and the special SSH port.

- Install your 'key pair for the cluster-login' to ~/.ssh . For existing RAMCloud users, it is already copied from your home directory.

- Add the cluster-login-public key to ~/.ssh/authrized_keys .

Note)

- Your home is shared with all the ATOM servers with NFS, so you can login to all atom servers with public key authentification.

- Do not copy your private key for mmatom login. Please create different key pair to ssh to AROM cluster from mmatom.

- keypair can be generated with the command such as:$ ssh-keygen -t rsa -b 2048

- Add the cluster-login-public key to ~/.ssh/authrized_keys .

- Initialize known_host:

- You can use /usr/local/scripts/knownhost_init.sh

- Usage) /usr/local/scripts/knownhost_init.sh <ServerNumberFrom> <ServerNumberTo>

If the host is already initialized result of 'hostname' on remote machine is displayed, otherwise you are prompted whether you will add the host to known_host database, where you should type 'yes'. - Example)

$ knownhost_init.sh 1 20

atom001

atom002

:

- Usage) /usr/local/scripts/knownhost_init.sh <ServerNumberFrom> <ServerNumberTo>

- Note) You may see the following error:

- Permission denied (publickey).

Sometimes it happens because the remote user information is not created on atomXXX because we do not use NIS or LDAP so far. You need ask your administrator to setup remote user on atomXXX. See : 'System administrator's guide' below.

- Permission denied (publickey).

- You can use /usr/local/scripts/knownhost_init.sh

Compile RAMCloud on the host 'mmatom' )

- Git clone from local RAMCloud repository, which is pre-tested for the ATOM cluster. Directory structure is almost the same as Stanford RAMCloud.

$ git clone /var/git/ramcloud-dpdk.git$ git clone /home/satoshi/ramcloud-dpdk.git // my local repository debugged.

$ cd ramcloud-dpdk

$ git submodule update --init

Note) DO NOT add '--recursive' option to git because git creates the following entry in 'logcabin/.git/config' to refer an obsolete repository and results timeout.

> [submodule "gtest"]

> url = git://fiz.stanford.edu/git/gtest.git

- Compile RAMCloud as typing make without any argument.

$ make

Note)

Following make options are now default and specified in GNUmakefile.Debug=noARCH=atom

CC, CXX, AR is directly specified in order to use '/usr/bin/gcc (4.4.7)' instead of '/usr/local/bin/gcc (4.9.1)' for compiling RAMCloud.

Run clusterperf.py from 'mmatom' on the atomXXX in the cluseter)

- You need to have RAMCloud compiled.

- Reserve ( Lease ) ATOM servers with /usr/local/bin/mmres

- mmres has been ported from rcres. It manages resources for RAMCloud, eg. backup file, and DPDK resource file, etc. The backup replica is preserved until the mmres lease expires, so you can reuse the backup in different program while the lease continues.

- Check usage with: $ mmres --help

Example)

- Edit config.py for your servers reserved. (Default is 1..12)

- Run clusterperf.py :

default parameter is equivalent to rccluster, which is replica=3, server=4, backup=1 except that it currently runs only the basic test.

Quick Hack: call through /usr/local/bin/mmfilter

$ mmfilter clusterperf.py basic

- Run clientSample : It is a simple clientSample code.

Note) Limitation original code repeat overwriting 1M times, however, it hangs after 104,000 iteration. It could be a log cleaning problem. Currently the test success after 100,000 iteration.

- $ cd clientSample

- $ make

- $ make run

- Benchmarks/samples to be done..

- Working: clusterperf.py to run all the default tests. Now test failes at "readDistRandom" after "multiWrite_oneMaster"

- TBD: recovery.py

- NOTE)

- The scripts/config.py is customized for the ATOM Cluster so that we can run RAMCloud tests with default option

- Limitations)

- DPDK debug messages is printed to stdout at first. We are working to suppress the messages to make the result more readable.

- mmres does not refers your TZ variable. Please check use localtime where the cluster is located. We would like to modify mmres to refere 'export TZ=xxx' like 'date' command.

Performance result)

Clusterperf.py

Note) We are now trying to move the disturbing DPDK log from standard out to some logfile.

Measured on Wed 25 Feb 2015 12:31:42 PM PST :

basic.read100 13.456 us read single 100B object (30B key) median

basic.read100.min 12.423 us read single 100B object (30B key) minimum

basic.read100.9 13.837 us read single 100B object (30B key) 90%

basic.read100.99 17.698 us read single 100B object (30B key) 99%

basic.read100.999 24.356 us read single 100B object (30B key) 99.9%

basic.readBw100 4.7 MB/s bandwidth reading 100B object (30B key)

basic.read1K 20.425 us read single 1KB object (30B key) median

basic.read1K.min 19.593 us read single 1KB object (30B key) minimum

basic.read1K.9 20.786 us read single 1KB object (30B key) 90%

basic.read1K.99 24.306 us read single 1KB object (30B key) 99%

basic.read1K.999 36.569 us read single 1KB object (30B key) 99.9%

basic.readBw1K 33.4 MB/s bandwidth reading 1KB object (30B key)

basic.read10K 52.461 us read single 10KB object (30B key) median

basic.readBw10K 125.6 MB/s bandwidth reading 10KB object (30B key)

basic.read100K 358.567 us read single 100KB object (30B key) median

basic.readBw100K 187.6 MB/s bandwidth reading 100KB object (30B key)

basic.read1M 3.449 ms read single 1MB object (30B key) median

basic.readBw1M 212.1 MB/s bandwidth reading 1MB object (30B key)basic.write100 43.307 us write single 100B object (30B key) median

basic.write100.min 41.031 us write single 100B object (30B key) minimum

basic.write100.9 47.528 us write single 100B object (30B key) 90%

basic.write100.99 86.543 us write single 100B object (30B key) 99%

basic.write100.999 38.363 ms write single 100B object (30B key) 99.9%

basic.writeBw100 542.6 KB/s bandwidth writing 100B object (30B key)

basic.write1K 63.481 us write single 1KB object (30B key) median

basic.write1K.min 60.453 us write single 1KB object (30B key) minimum

basic.write1K.9 66.720 us write single 1KB object (30B key) 90%

basic.write1K.99 126.391 us write single 1KB object (30B key) 99%

basic.write1K.999 41.500 ms write single 1KB object (30B key) 99.9%

basic.writeBw1K 4.9 MB/s bandwidth writing 1KB object (30B key)

basic.write10K 199.648 us write single 10KB object (30B key) median

basic.writeBw10K 12.0 MB/s bandwidth writing 10KB object (30B key)

basic.write100K 1.508 ms write single 100KB object (30B key) median

basic.writeBw100K 17.8 MB/s bandwidth writing 100KB object (30B key)

basic.write1M 41.949 ms write single 1MB object (30B key) median

basic.writeBw1M 21.8 MB/s bandwidth writing 1MB object (30B key)# RAMCloud multiRead performance for 100 B objects with 30 byte keys

# located on a single master.

# Generated by 'clusterperf.py multiRead_oneMaster'

#

# Num Objs Num Masters Objs/Master Latency (us) Latency/Obj (us)

#----------------------------------------------------------------------------1 1 1 23.0 22.99

2 1 2 28.3 14.15

3 1 3 33.2 11.07

9 1 9 49.5 5.5050 1 50 168.7 3.37

60 1 60 235.5 3.93

70 1 70 209.1 2.99

Recovery.py

System administrator's guide)

Adding User)

So far we do not use NIS/LDAP for account management. Please use a script to setup new users.

User setup procedure) Still debugging the tool, please wait to use it.....

- /usr/local/mmutils/mmuser/mmuser.sh <UserName>

The account on mmatom is copied to all the atomXXX.

Hardware maintenance)

- Getting module's slot/chassis information and Mac address for a hostname or IP address.

- You will find the information in mmatom: /etc/hosts :

IPaddress hostname Mac Address Installed slot and host port connected.

- For atomXXX(Y), XXX is always corresponds to final digit of IP address:

eg. atom100 == 192.168.3.100, atom110a == 192.168.4.110

- You will find the information in mmatom: /etc/hosts :

Security solution)

- SSH into management server

- Job spawning to cluster servers from management server

- Cluster management

- Server console

- IPMI to CMM, BMC

- USB connection to ONS from front panel

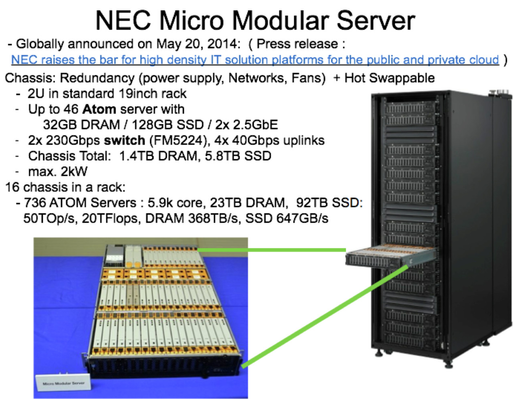

Stanford's ATOM Cluster - configuration, etc)

1. system photograph)

2. ATOM server module specification)

- 3 chassis are installed (The rack in above picture contains 16 chassis.)

- Installed ATOM modules are with C2730 (1.7GHz, 8 core/8 threads, 12 W)

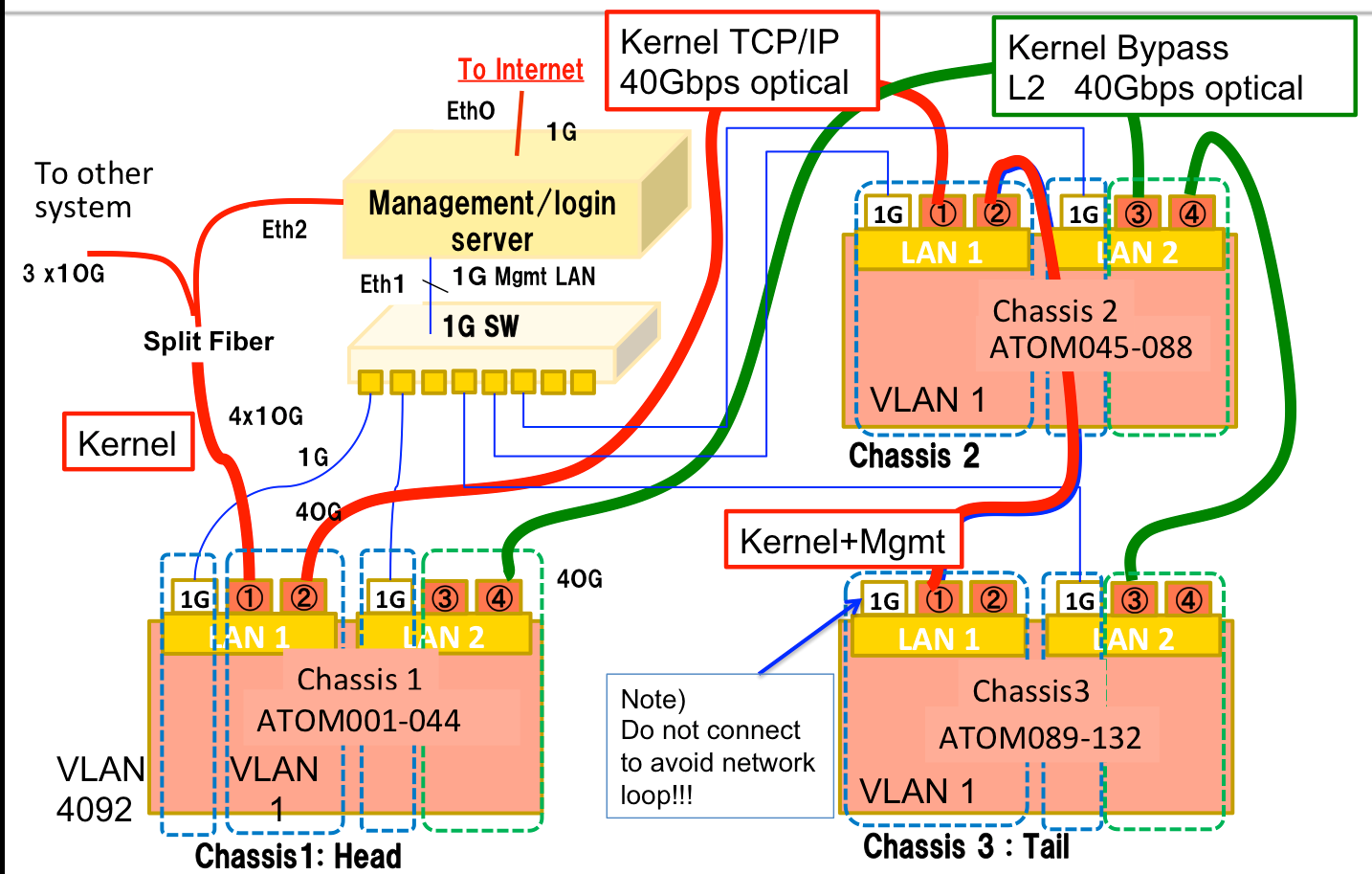

3. Network connection)

- Due to historical reason and considering future experiment, VLAN configuration is different in chassis

- Management server is directly connect to the internet, the cluster is isolated from other Stanford servers.

- Management server works as firewall, login server, firewall, NIS server, DHCP server, and PXE server for reconfiguring ATOM servers.