Some application assumptions feeding into this discussion:

- most data are written once

- read dominated workload (writes may be less than 10% or even less than 1%)

- while there is little locality, most requests refer to recent data (useful for power management

Technology review

The following table compares the key characteristics of storage technologies. When possible, the values below focus on the raw potential of the underlying technology (ignoring packaging or other system issues). In other cases, I use representative numbers from current systems.

|

Capacity |

BW |

Read Latency |

Write Latency |

Cost |

Power Consumption |

|---|---|---|---|---|---|---|

Server Disk |

1TB |

100MB/s |

5ms |

5ms |

$0.2/GB |

10W idle - 15W active |

Low-end Disk |

200GB |

50MB/s |

5ms |

5ms |

$0.2/GB |

1W idle - 3W active |

Flash (NAND) |

128GB |

100MB/s |

20us |

200us |

$2/GB |

1W active |

DRAM (DIMM) |

4GB |

10GB/s |

60ns |

60ns |

$30/GB |

2W - 4W |

PCM |

50x DRAM? |

DRAM-like? |

100ns |

200ns |

disk-like? |

1W idle - 4W active? |

Caption: capacity refers to a system (not chip), BW refers to max sequential bandwidth (or channel BW), read and write latency assume random access (no locality).

Other interesting facts to keep in mind:

- Flash can be read/written at ~2KB pages, erased at ~256KB pages

- Durability: 10^6 cycles for Flash,10^8 for PCM

- Disks take seconds to get out of standby while Flash and PCM take usec.

- Flash can be a USB, SATA, PCIe, ONFI, or DIMM device. No clear winner yet.

- FTL (Flash Translation Layer) should be customized to match access patterns

----System-level Comparison (from the FAWN HotOS'09 paper)

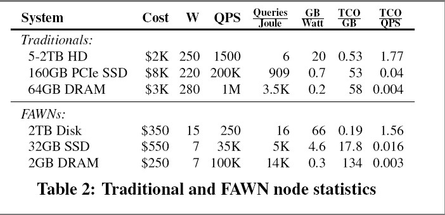

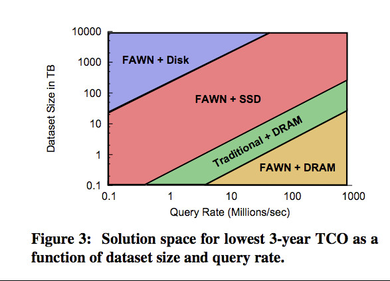

What does this mean at the system level? The FAWN HotOS paper tried to quantify both performance and total cost of ownership (TCO) for servers using DRAM, disks, and Flash when servicing Seek (memcached) and Scan (hadoop) querries. The also consider two processor scenarios: conventional CPUs (Xeon) and wimpy CPUs (Geode).

Some caveats about the FAWN graphs:

- No consideration to access latency

- Some components or packaging options are suboptimal or not state of the art (e.g., Flash, CPU).

- It's not clear that their design points are balanced

----Flash for RAMCloud - Why not use flash instead of RAM as the main storage mechanism

- What is flash latency?

- SSDs:

- Current claims for x25-E: 75 µs read, 85 µs write

- Typical ONFI:

- Micron MT29H8G08ACAH1, 8, 16, 32 Gb

- Read 30 µs, Write 160 µs, Erase 3 ms

- Micron MT29H8G08ACAH1, 8, 16, 32 Gb

- SSDs:

- Can it be made a low enough so it doesn't impact RPC latency?

- What is the latency of typical flash packaging today? 100 µs?

- What is flash latency?

- Does flash offer advantages over disk as the backup mechanism?