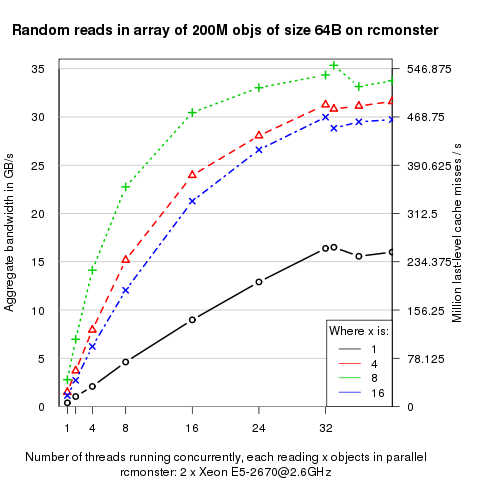

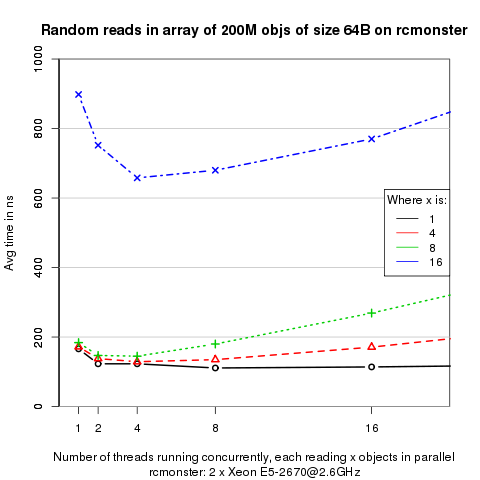

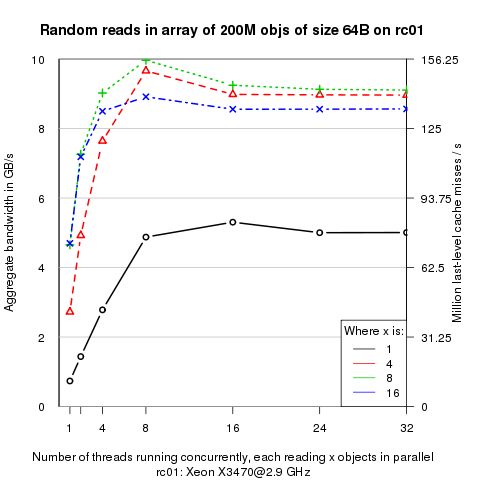

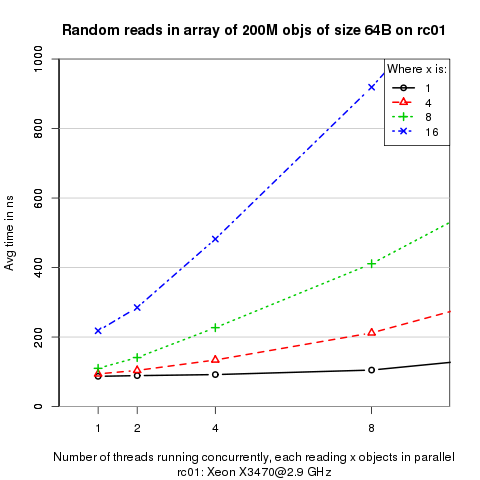

Memory benchmark for last level cache misses

What wanted to measure the rate at which a single CPU chip can service last-level cache misses.

Here's how the benchmark runs, explained in three stages:

1. One thread makes a serialized stream of random references to a very large array (> 1GByte total array size). It starts with a random location in the array, then in each iteration it reads that location and uses the contents of that location to compute another random location to read in the array. Each reference depends on the results of the previous reference, so the references are totally serialized. Each reference to the array causes a last-level cache miss.

In the following graphs, this is the leftmost black point.

2. Then the thread manages x (4, 8 and 16 in different experiments) of these serialized streams in parallel. It starts with x random locations in the array, then in each iteration through its inner loop it reads those x locations and uses their values to compute x new locations to read in the next iteration. The iterations are totally serialized as before, but the Xeon's instruction-level parallelism should allow any or all of the x references in each iteration to proceed in parallel.

In the following graphs, different lines show measurements for different values of x.

3. Then we several of these threads concurrently.

In the following graphs, this is shown on the x axis.

On the y-axis, the first and third graphs show the bandwidth in GB/s and the num of last level cache misses / s. Second and fourth show the avg time in ns for reading x objects in parallel.