Intel 530 Performance

This page describes an unresolved (early 2014) performance issue that Diego is having with Intel 520 SSDs. Feedback and ideas are welcome: ongaro at cs stanford edu.

Update Tue, 25 Feb 2014 00:21:06 -0800: added "Effects of Write-Caching" section.

Update Tue, 25 Feb 2014 13:12:01 -0800: added "Larger Writes" section.

Update Sat, 01 Mar 2014 19:31:34 -0800: updated "Larger Writes" section with big graph, updated microbenchmark section to link to improved benchmark code.

Contents:

Summary

I'm optimizing a system in which synchronous disk writes are a key factor in performance. My current drives are too slow. I have a single Intel 520 drive that performs well enough (<1ms), but I need five drives. I bought five Intel 530 SSDs in hopes they would perform like the Intel 520, but instead they take 10ms to write a single byte to disk under various versions of Linux. Curiously, if I connect the Intel 530 drives over a USB-to-SATA adapter instead of using SATA directly, they're much faster (~200us per write). If I turn off write-caching, on some machines they take 1.6ms per write. What's wrong?

Microbenchmark

Here's the original microbenchmark, which calls write() and fdatasync() on a single byte of data 1000 times: https://gist.github.com/ongardie/9177853 I run this as "time ./bench" and divide the wall time by 1000 to get the approximate average time per write.

A much improved and extended version of the benchmark is at https://github.com/ongardie/diskbenchmark .

I normally run this on ext4. (I've tried it on a raw device as well; see negative results section below).

Machines

These are the machines I've tried. The Intel 530 over SATA takes 10ms per write on each of these, and other SSDs take <1ms per write on each of these.

| name | type | description | model | distro | kernel | SATA | USB | Purchased |

|---|---|---|---|---|---|---|---|---|

| rc66 | server | This is the machine type I'd like to use (we have 80 of them). | Colfax CX1180-X4 / Supermicro 5016TI-TF | RHEL6 | 2.6.32 | 3gbps | 2.0 | 2011 |

| rcmonster | server | This is a newer, beefier machine with Intel 520 drives, but also stuck on RHEL6. | Colfax CX1265i-X5 | RHEL6 | 2.6.32 | 6gbps | 3.0 | 2013 |

| flygecko | desktop | This is a newer desktop machine with a newer version of Linux. | Arch | 3.12 | 3gbps | 2.0 | 2013 | |

| x1 | laptop | This is a laptop with a newer version of Linux. | Lenovo Thinkpad X1 Carbon | Debian Jessie | 3.12 | mSATA 6gbps | 3.0 | 2012 |

Disks

These are the disks I've tried and their performance:

| model | qty | capacitor | performance |

|---|---|---|---|

| Crucial M4 | 160 | 3.7ms per write on rc66 | |

| Intel 320 (SSDSC2CW120A3) | 1 | yes | ~200us per write on rcmonster |

| Intel 520 (SSDSC2CW120A3) | 2 | no | <1ms per write on rc66, rcmonster (440us) |

| Intel X25-M (SSDSA2M120G2GC) | 1 | 210us per write on flygecko | |

| Intel 530 (SSDSC2BW120A4) | 5 | no | 10ms per write on rc66 (9.8ms), rcmonster (10.1ms), and flygecko (9.7ms) |

| Intel 530 attached over USB-to-SATA adapter | 1 | no | <1ms per write on rc66, rcmonster, flygecko, and x1 |

SanDisk X100 (SD5SG2128G1052E) | 1 | 830us per write on x1 | |

| Cheap USB thumb drive | n | 7.6ms per write on x1 |

My goal is to get the Intel 530 drives to run fast on rc66 and similar machines.

Negative Results

- The first thing I did was update from the DC22 firmware to the current DC33 firmware. No effect.

- I tried a machine with 6gbps SATA (rcmonster). No effect.

- I tried a machine with a newer kernel (flygecko). No effect.

- I tried disabling APM power saving with hdparm -B. No effect.

- I wasn't able to change NCQ sizes with /sys/block/sdc/device, but it's stuck at 1 on flygecko and 31 on rcmonster.

- I tried changing the I/O scheduler. This shouldn't have an effect since there's only one I/O outstanding at a time. No effect.

- I tried running the benchmark on the raw block device rather than an ext4 partition. This helped but only slightly, reducing latency per write from 10ms to about 9ms.

- I tried doing bigger writes to see if they would be faster. See "Larger Writes" section below. Didn't help.

Additional Questions

- One of the differences between the Intel 520 and Intel 530 is that the 530 does more aggressive power saving. Is there a way to turn that off?

- Are the software paths under Linux for the Intel 520 and the Intel 530 identical?

- Would Intel be willing to trade my five 530s in exchange for five 520s or similarly performing drives?

blktrace measurements

I ran blktrace while running the benchmark on flygecko with the Intel X25-M and the Intel 530 SSDs. Here's the summary produced by btt:

Intel 530:

ALL MIN AVG MAX N

--- ----------- ----------- ----------- -----

Q2Q 0.000000943 0.010212746 0.736672437 1015

Q2G 0.000001300 0.000002340 0.000022269 16144

G2I 0.000001200 0.000002133 0.000020969 16144

Q2M 0.000000173 0.000000526 0.000000858 112

I2D 0.000006150 0.000068040 0.023831449 16144

M2D 0.000014449 0.000021722 0.000026137 112

D2C 0.000099355 0.002885890 0.160061067 1016

Q2C 0.000125742 0.002958056 0.160093994 1016

Intel X25-M:

ALL MIN AVG MAX N

--- ----------- ----------- ----------- -----

Q2Q 0.000001345 0.000214151 0.000508142 1015

Q2G 0.000000668 0.000001025 0.000005047 16080

G2I 0.000000720 0.000000966 0.000019082 16080

Q2M 0.000000237 0.000000374 0.000000968 176

I2D 0.000000320 0.000003889 0.000020007 16080

M2D 0.000015045 0.000021314 0.000028772 176

D2C 0.000078657 0.000084969 0.000281596 1016

Q2C 0.000083605 0.000091020 0.000317568 1016

I'm no blktrace expert, but I think the most interesting numbers are D2C (driver to completion) and Q2Q (queue to queue). It's safe to focus on just the average numbers (I looked at a plot of the D2C latencies and another of the per-write times in userspace, and both have little variation).

With the Intel 530, it looks like each write takes the driver and device 2.9ms to complete, but does not complete end-to-end for 10ms. With the Intel X25-M, each write takes the driver and device 85us to complete, and this completes end-to-end in 214us.

So that raises two questions: why does the Intel 530 take so much longer in the driver/device, and why does it take even longer end-to-end?

Effects of Write-Caching

Tom Lyon suggested I try to enable write-caching (https://twitter.com/aka_pugs/status/438148846476996608). Write-caching was already enabled on all the machines I tried, but disabling it had some interesting effects. I used hdparm -W 0 /dev/sdx to toggle write-caching.

| host | disk | write caching off | write caching on |

|---|---|---|---|

| rcmonster | 520 | 100us per write | 440us per write |

| rcmonster | 530 | 10.4ms per write | 10.1ms per write |

| rc66 | M4 | 1.5ms per write | 2.7ms per write |

| rc66 | 530 | 1.7ms per write | 9.8ms per write |

| flygecko | X25-M | 1.1ms per write | 210us per write |

| flygecko | 530 | 1.5ms per write | 9.5ms per write |

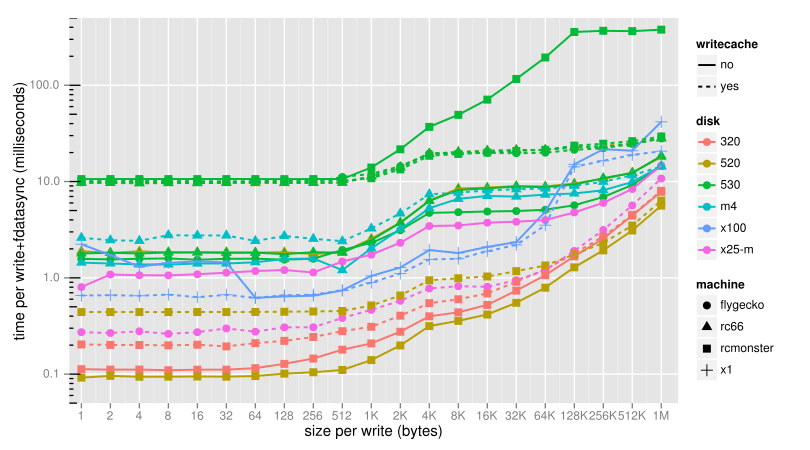

Each drive seems to behave differently. For the 530, write-caching seems to have no effect on rcmonster but improves latency for one-byte writes by a factor of six on rc66 and flygecko. This opens up a lot of questions... See also Larger Writes section below.

Larger Writes

Many people have suggested that writing 1 byte is not efficient and writing more bytes should be faster. I tried it both with write-caching on and off.

The writes were done at 0 and 512 byte offsets into the file, and the whole experiment was repeated 5 times. The best time is shown for each point. Each data point represents only a small number of writes, though, so the error may be high. Also, the "x1" machine is my laptop, so it wasn't always idle.