RPC Latency Profile

RAMCloud Benchmarks

A single backup operation (ClusterPerf with 100-byte writes, 1 master, 3 backups). For each operation, a timeline of events was logged. Not all timelines had the same "shape", as not all backup operations are handled by the same sequence of events. Thus, the most common timeline "shape" was chosen, and the details below present statistics about the most common timeline shape. This procedure was done for both the backup and the master. Based on source tree at commit 8f30e4e6d84fc2a13578ac0c92c84065047c0581.

On the Master

Averaged over 1912 same-shape timelines.

0 us --- Begin backup (BackupManager::sync()) | | 1.8 us --- First write RPC sent out | | 3.2 us --- Second write RPC sent out | | 4.4 us --- Third write RPC sent out | | | [~ 4 us "dead time"] | | 8.2 us --- First write RPC completes (duration: 6.4 us) | 9.8 us --- Second write RPC completes (duration: 6.6 us) | 10.7 us --- Third write RPC completes (duration: 6.3 us) 10.8 us --- End backup

Major time sinks in issue path

- Acquiring Dispatch::Lock in TransportManager::WorkerSession::clientSend for every write RPC

- Cost: 3 x ~300ns

- InfRcTransport<Infiniband>::getTransmitBuffer(): waiting for free tx buffer for every write RPC

- Cost: 3 x ~200ns (first write RPC more expensive than 2nd and 3rd)

- Calling into Infiniband transport: postSendZeroCopy (unavoidable?)

- Cost: 3 x ~400ns (first write RPC more expensive than 2nd and 3rd)

More detailed timeline

Below is a more detailed version of the timeline above which presents information about each and every recorded event in the code path:

( Cumulative ) | Delta | [ Std. Dev. ] Event Description ( Min Mean Max ) | Min Mean Max | [ ] ( ) | | [ ] ( 0.0 ns 0.0 ns 0.0 ns ) | 0.0 ns 0.0 ns 0.0 ns | [ 0.0 ns ] Invoking sync() to backups ( 90.0 ns 139.0 ns 531.5 ns ) | 90.0 ns 139.0 ns 531.5 ns | [ 35.0 ns ] Invoking Send 'write segment' RPC ( 202.5 ns 280.2 ns 783.4 ns ) | 112.5 ns 141.1 ns 251.9 ns | [ 12.7 ns ] Invoking client.send() ( 218.5 ns 296.7 ns 802.8 ns ) | 16.0 ns 16.5 ns 19.4 ns | [ 0.8 ns ] Invoking session->clientSend() ( 441.4 ns 605.4 ns 5.4 us ) | 222.9 ns 308.8 ns 4.6 us | [ 119.6 ns ] Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend ( 497.0 ns 708.3 ns 5.6 us ) | 55.6 ns 102.8 ns 183.4 ns | [ 16.1 ns ] Invoking Instantiate new ClientRpc ( 566.9 ns 841.6 ns 16.9 us ) | 69.9 ns 133.4 ns 11.3 us | [ 256.0 ns ] Completed Instantiate new ClientRpc ( 583.9 ns 862.9 ns 16.9 us ) | 17.0 ns 21.3 ns 45.0 ns | [ 2.3 ns ] Invoking rpc->sendOrQueue ( 891.4 ns 1.3 us 21.4 us ) | 307.5 ns 387.9 ns 4.5 us | [ 97.0 ns ] Invoking postSendZeroCopy ( 1.3 us 1.8 us 22.6 us ) | 455.4 ns 587.0 ns 1.2 us | [ 47.2 ns ] Completed postSendZeroCopy ( 1.5 us 2.0 us 31.6 us ) | 127.5 ns 196.4 ns 9.0 us | [ 202.4 ns ] Completed rpc->sendOrQueue ( 1.5 us 2.1 us 31.7 us ) | 54.5 ns 73.2 ns 94.4 ns | [ 2.8 ns ] Completed session->clientSend() ( 1.5 us 2.1 us 31.7 us ) | 16.0 ns 16.9 ns 20.5 ns | [ 0.8 ns ] Completed client.send() ( 1.6 us 2.1 us 31.8 us ) | 16.0 ns 21.8 ns 35.5 ns | [ 2.3 ns ] Completed Send 'write segment' RPC ( 1.6 us 2.2 us 32.0 us ) | 37.5 ns 50.6 ns 224.0 ns | [ 15.5 ns ] Invoking Send 'write segment' RPC ( 1.7 us 2.3 us 32.2 us ) | 75.0 ns 98.3 ns 148.0 ns | [ 9.2 ns ] Invoking client.send() ( 1.7 us 2.3 us 32.2 us ) | 16.0 ns 16.6 ns 19.4 ns | [ 0.8 ns ] Invoking session->clientSend() ( 1.9 us 2.6 us 35.7 us ) | 175.9 ns 307.6 ns 3.5 us | [ 143.2 ns ] Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend ( 1.9 us 2.7 us 35.7 us ) | 33.4 ns 45.3 ns 70.9 ns | [ 1.3 ns ] Invoking Instantiate new ClientRpc ( 1.9 us 2.7 us 35.8 us ) | 45.0 ns 49.1 ns 78.4 ns | [ 4.3 ns ] Completed Instantiate new ClientRpc ( 2.0 us 2.7 us 35.8 us ) | 16.0 ns 16.6 ns 20.5 ns | [ 0.8 ns ] Invoking rpc->sendOrQueue ( 2.1 us 2.8 us 36.1 us ) | 95.1 ns 114.6 ns 270.0 ns | [ 9.4 ns ] Invoking postSendZeroCopy ( 2.3 us 3.2 us 40.9 us ) | 288.1 ns 334.2 ns 4.8 us | [ 104.3 ns ] Completed postSendZeroCopy ( 2.4 us 3.2 us 41.0 us ) | 53.5 ns 58.3 ns 107.4 ns | [ 4.6 ns ] Completed rpc->sendOrQueue ( 2.4 us 3.3 us 41.1 us ) | 52.5 ns 71.7 ns 90.0 ns | [ 3.0 ns ] Completed session->clientSend() ( 2.5 us 3.3 us 41.1 us ) | 16.0 ns 16.3 ns 30.0 ns | [ 0.6 ns ] Completed client.send() ( 2.5 us 3.3 us 41.2 us ) | 16.0 ns 22.5 ns 64.1 ns | [ 3.7 ns ] Completed Send 'write segment' RPC ( 2.5 us 3.4 us 41.3 us ) | 29.0 ns 44.6 ns 69.5 ns | [ 6.5 ns ] Invoking Send 'write segment' RPC ( 2.6 us 3.5 us 41.4 us ) | 69.5 ns 88.6 ns 148.0 ns | [ 10.8 ns ] Invoking client.send() ( 2.6 us 3.5 us 41.5 us ) | 16.0 ns 19.2 ns 35.5 ns | [ 3.3 ns ] Invoking session->clientSend() ( 2.8 us 3.8 us 45.5 us ) | 167.0 ns 302.3 ns 4.0 us | [ 177.7 ns ] Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend ( 2.8 us 3.8 us 45.5 us ) | 21.5 ns 30.0 ns 68.5 ns | [ 5.0 ns ] Invoking Instantiate new ClientRpc ( 2.8 us 3.9 us 45.6 us ) | 42.6 ns 52.9 ns 109.4 ns | [ 6.1 ns ] Completed Instantiate new ClientRpc ( 2.8 us 3.9 us 45.7 us ) | 16.0 ns 16.5 ns 62.0 ns | [ 1.3 ns ] Invoking rpc->sendOrQueue ( 3.0 us 4.0 us 46.0 us ) | 119.0 ns 146.3 ns 259.4 ns | [ 9.2 ns ] Invoking postSendZeroCopy ( 3.3 us 4.4 us 50.9 us ) | 311.9 ns 368.3 ns 4.9 us | [ 147.6 ns ] Completed postSendZeroCopy ( 3.3 us 4.5 us 59.8 us ) | 53.5 ns 60.1 ns 8.9 us | [ 202.2 ns ] Completed rpc->sendOrQueue ( 3.4 us 4.5 us 59.9 us ) | 52.5 ns 56.5 ns 101.9 ns | [ 4.9 ns ] Completed session->clientSend() ( 3.4 us 4.5 us 59.9 us ) | 16.0 ns 16.7 ns 39.5 ns | [ 1.0 ns ] Completed client.send() ( 3.4 us 4.6 us 59.9 us ) | 16.0 ns 34.4 ns 43.0 ns | [ 8.4 ns ] Completed Send 'write segment' RPC ( 4.0 us 8.2 us 68.4 us ) | 581.9 ns 3.6 us 8.5 us | [ 714.2 ns ] Reaped outstanding RPC ( 4.3 us 9.8 us 116.3 us ) | 287.0 ns 1.6 us 47.9 us | [ 1.4 us ] Reaped outstanding RPC ( 4.6 us 10.7 us 121.5 us ) | 276.5 ns 959.9 ns 5.2 us | [ 646.2 ns ] Reaped outstanding RPC ( 4.6 us 10.8 us 126.1 us ) | 48.1 ns 60.2 ns 4.6 us | [ 104.0 ns ] Completed sync() to backups

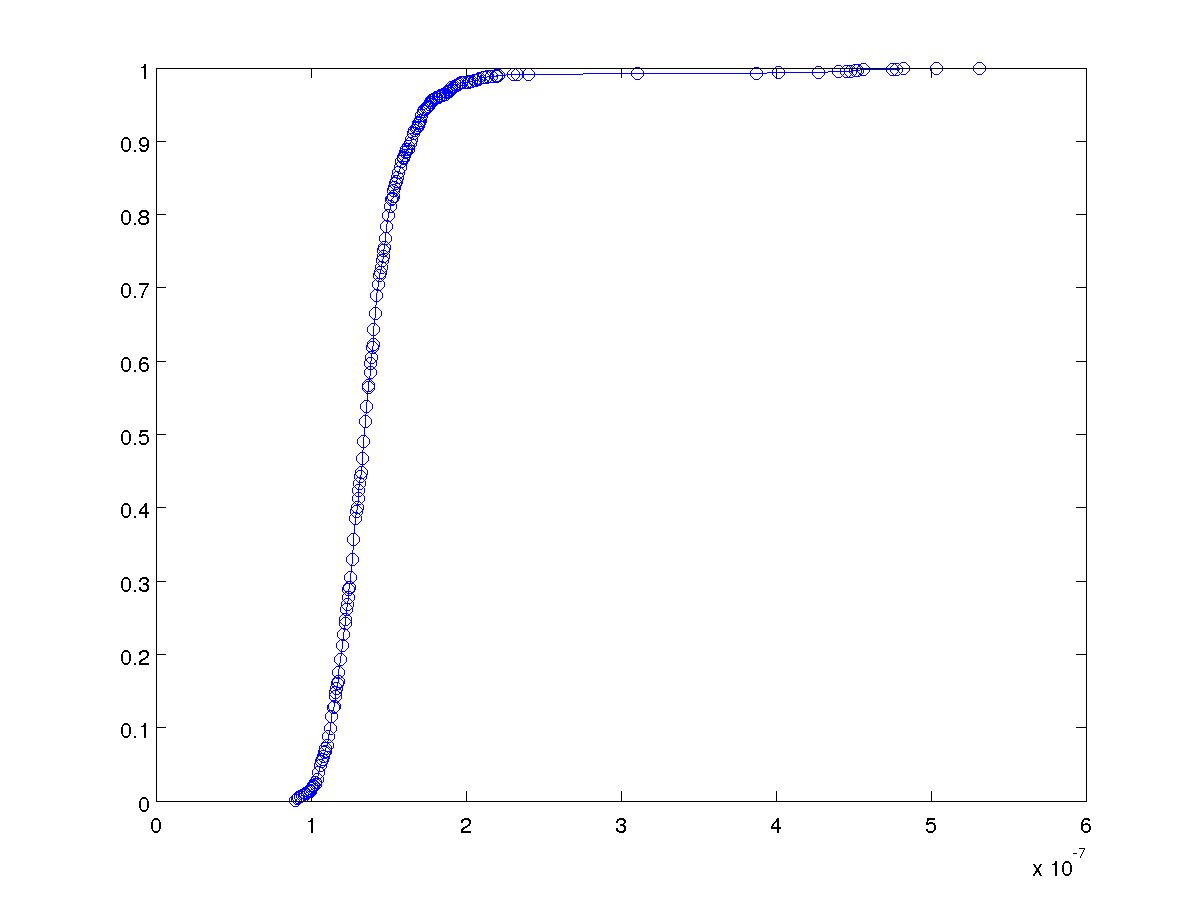

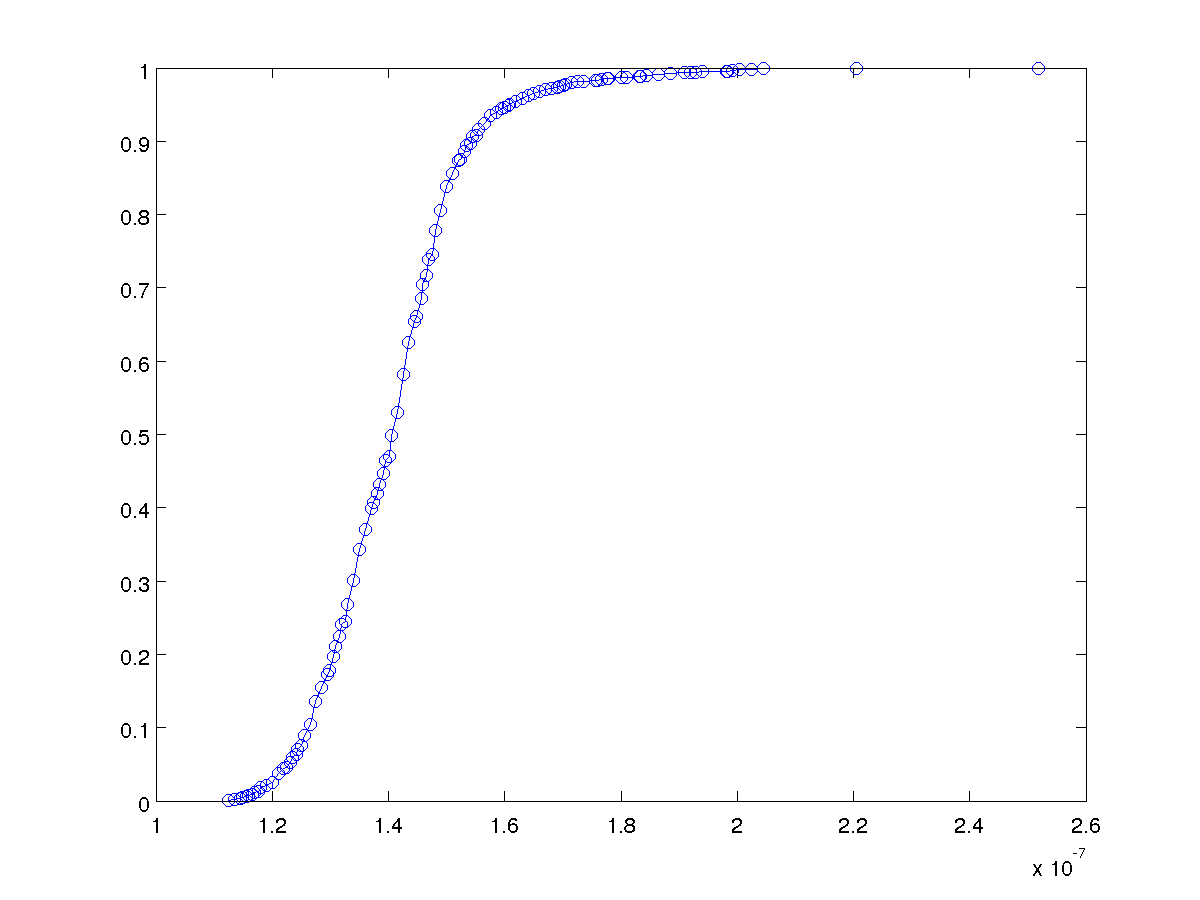

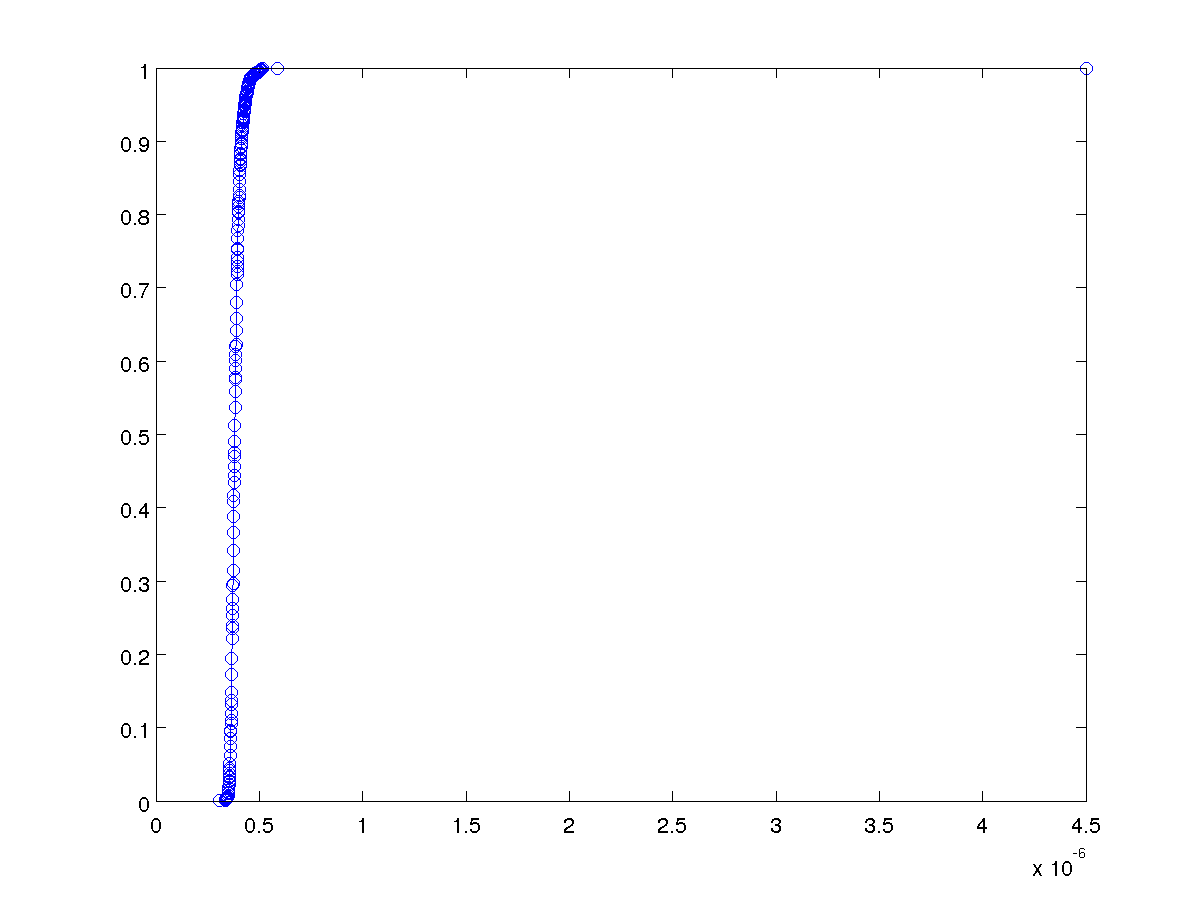

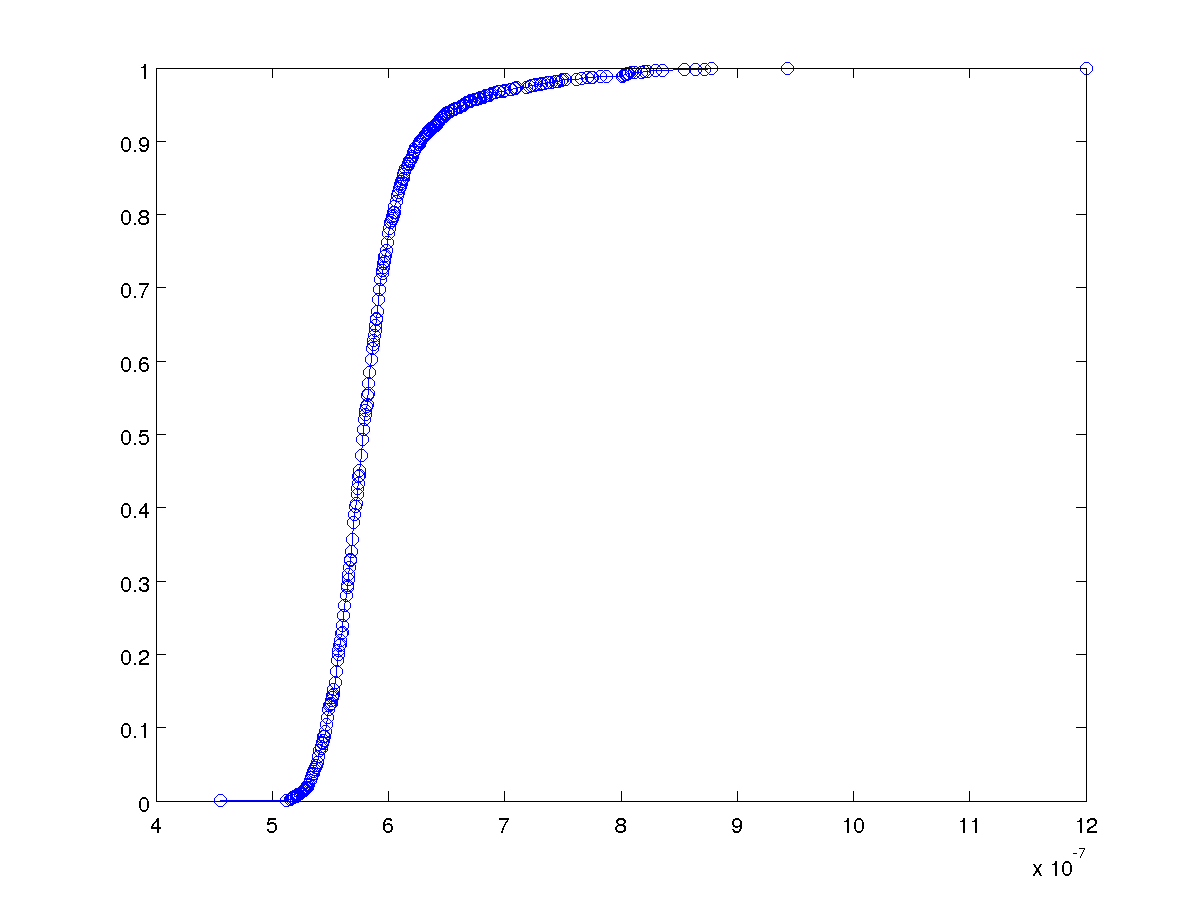

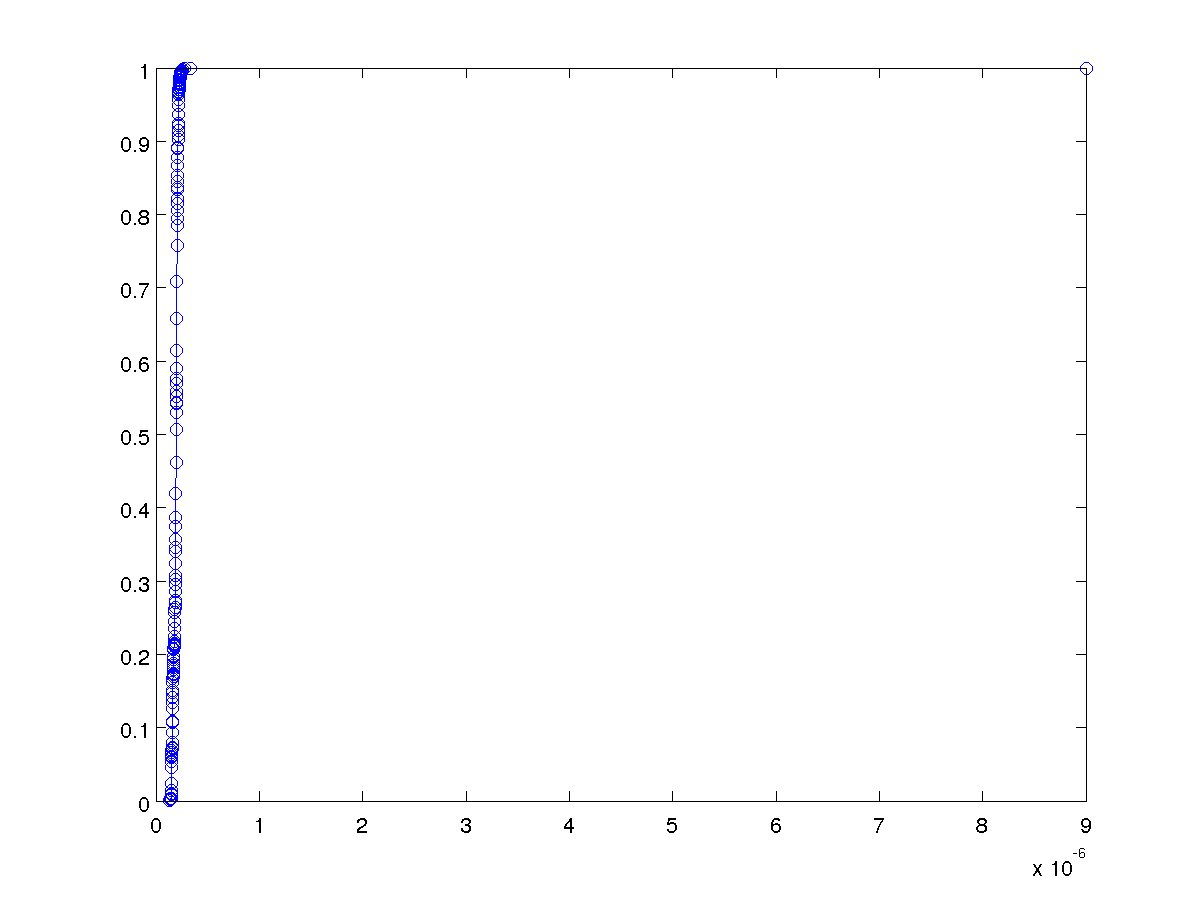

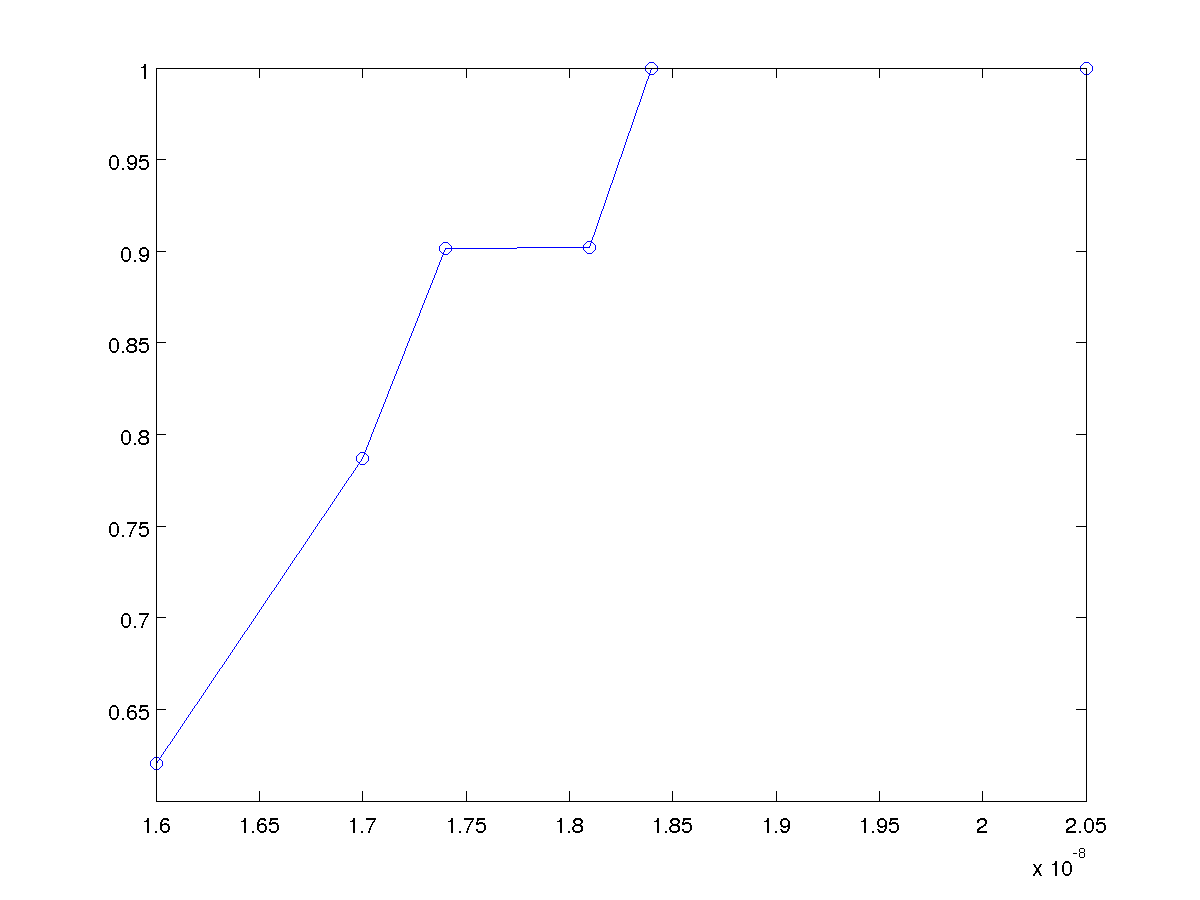

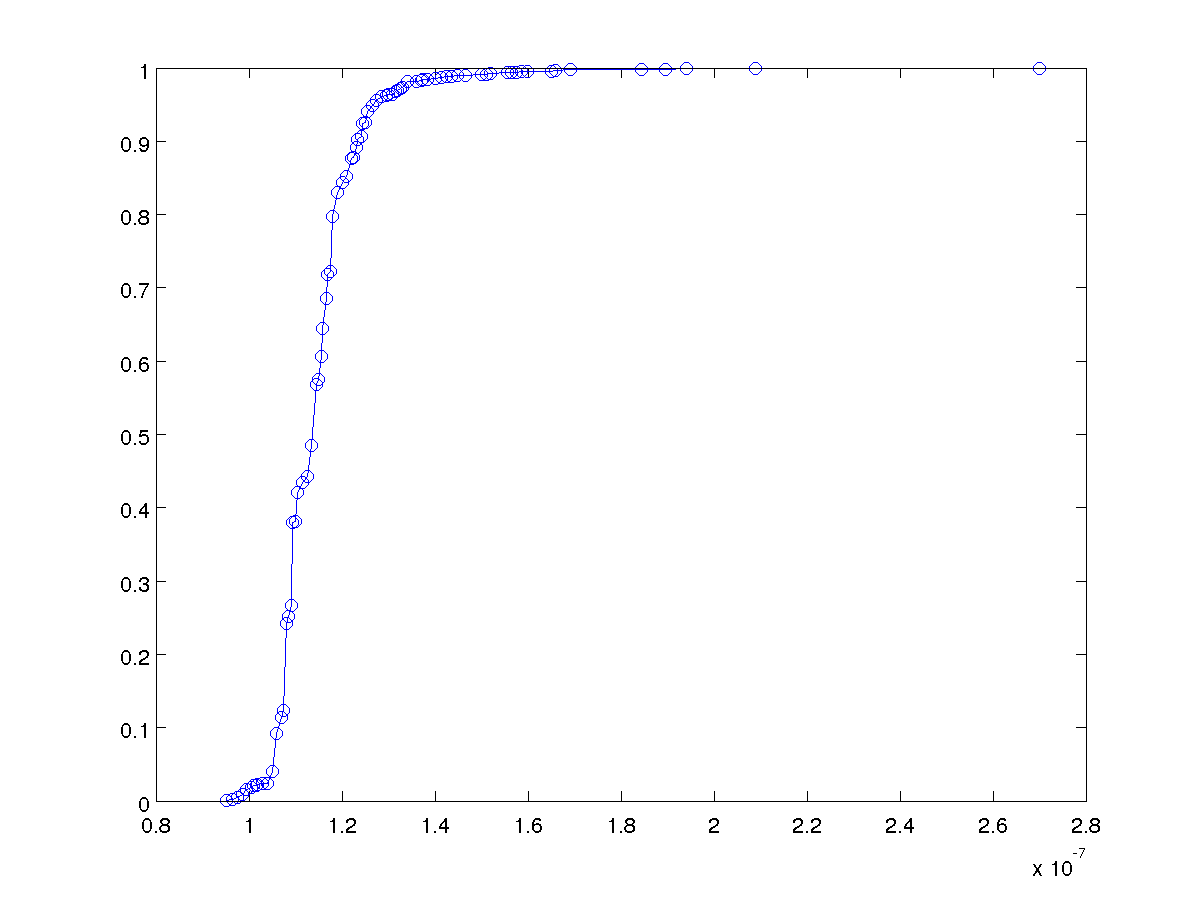

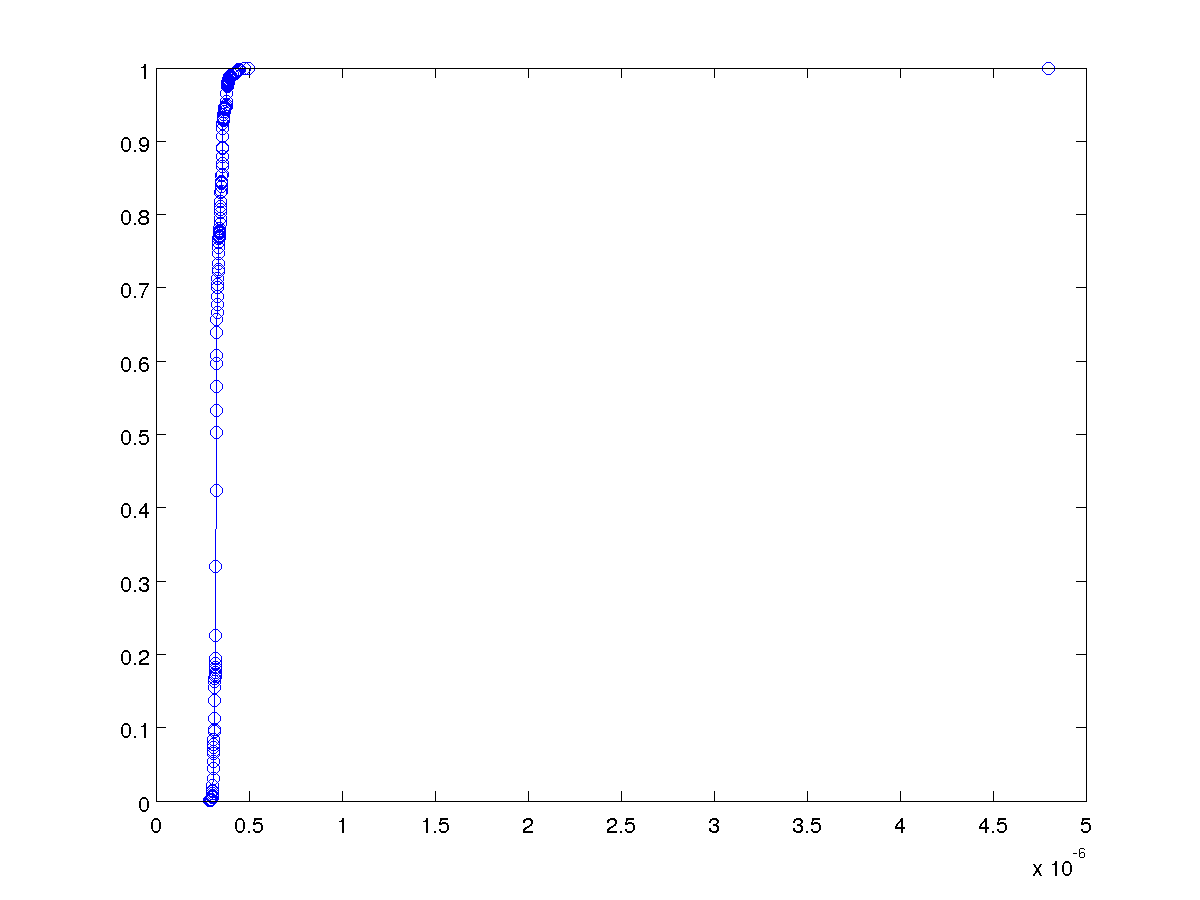

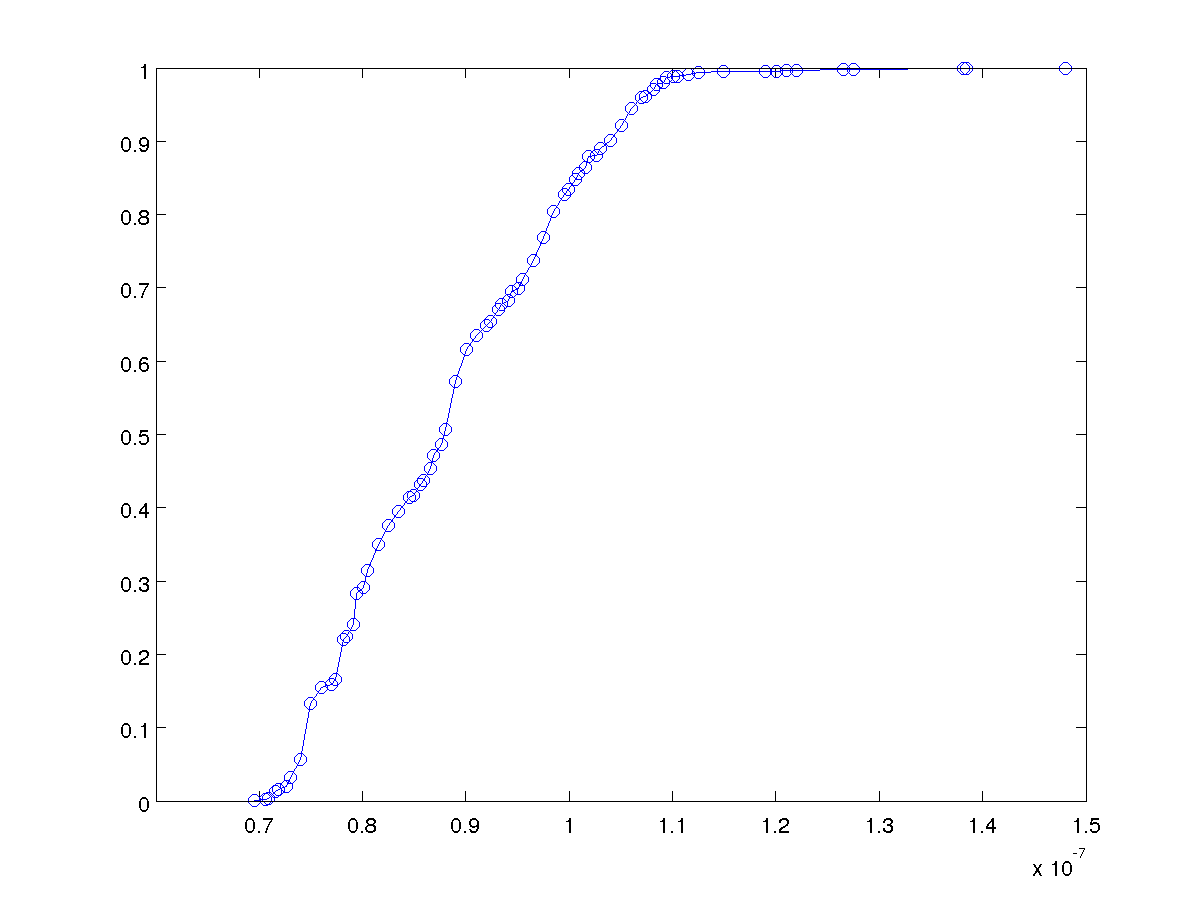

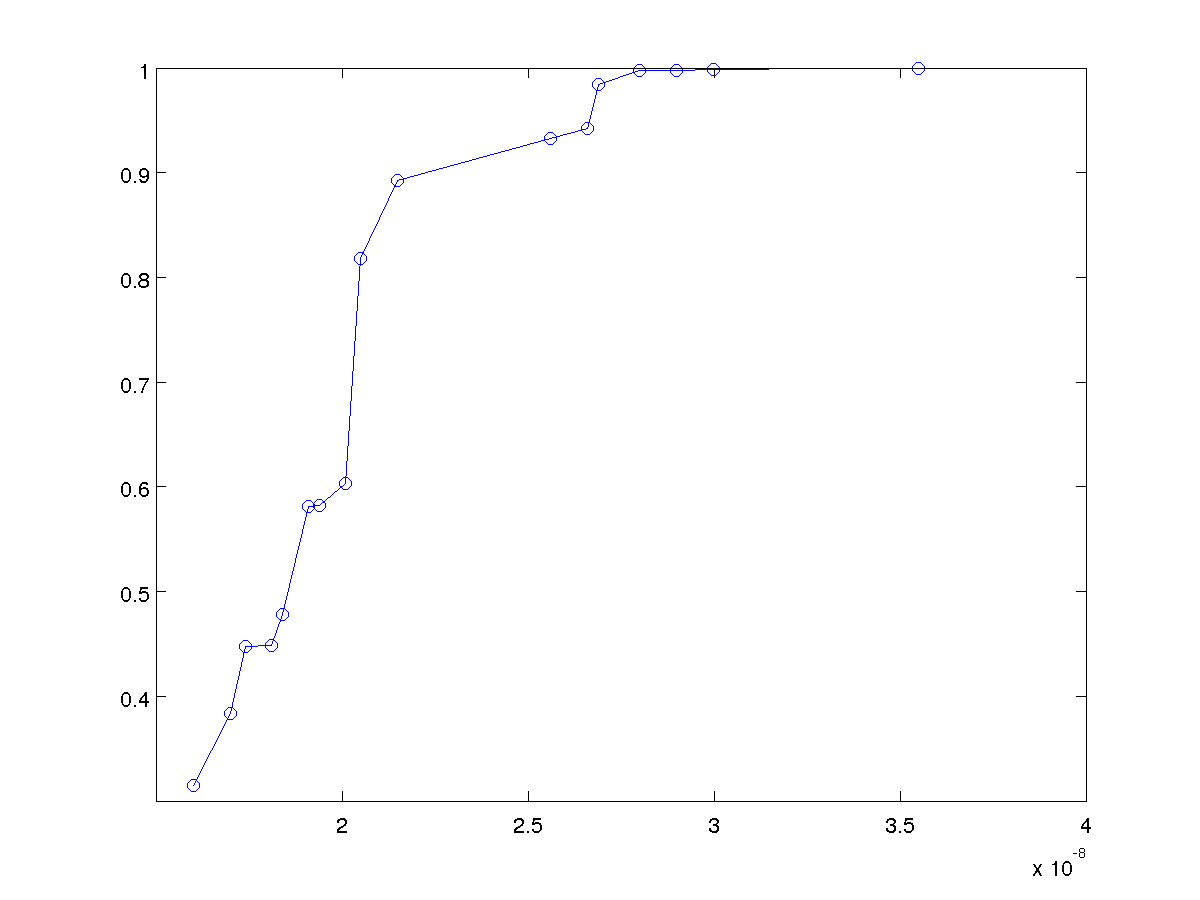

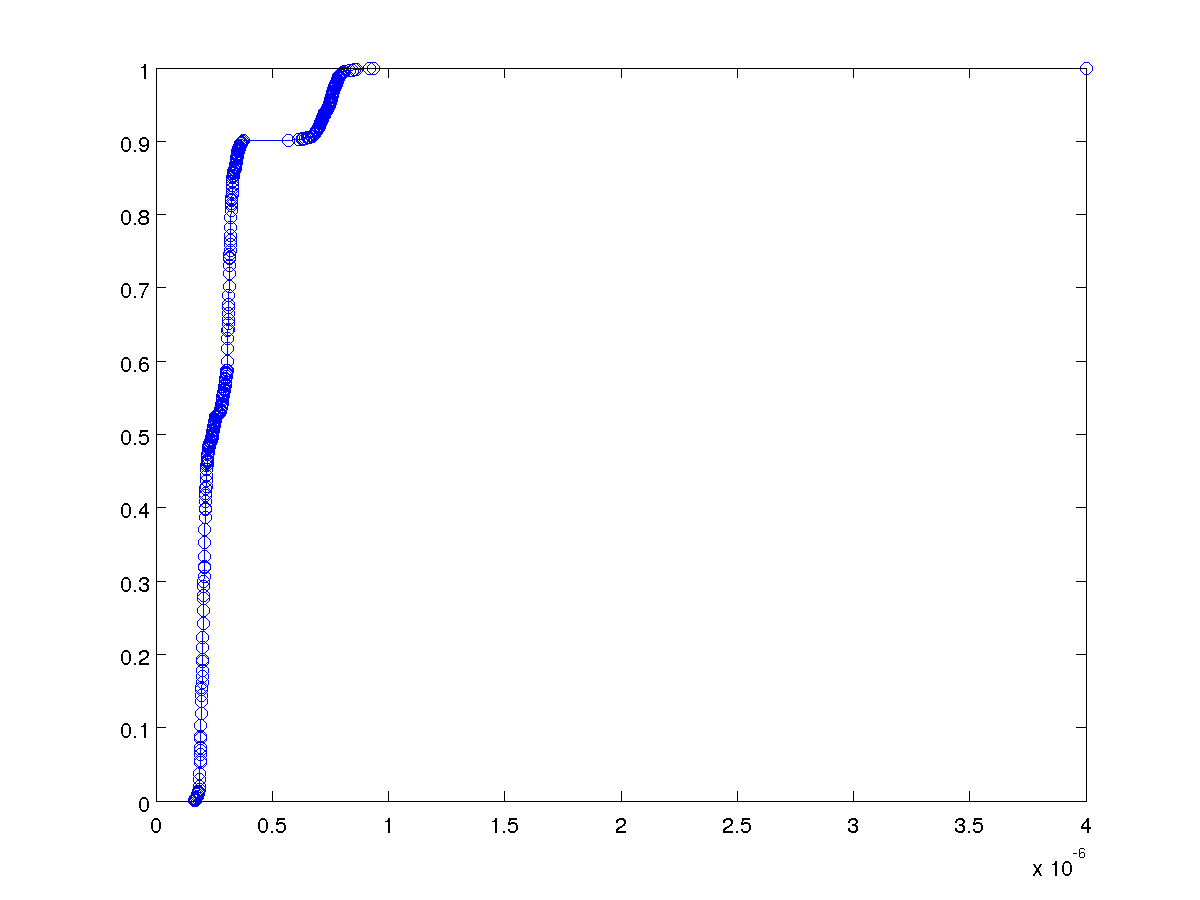

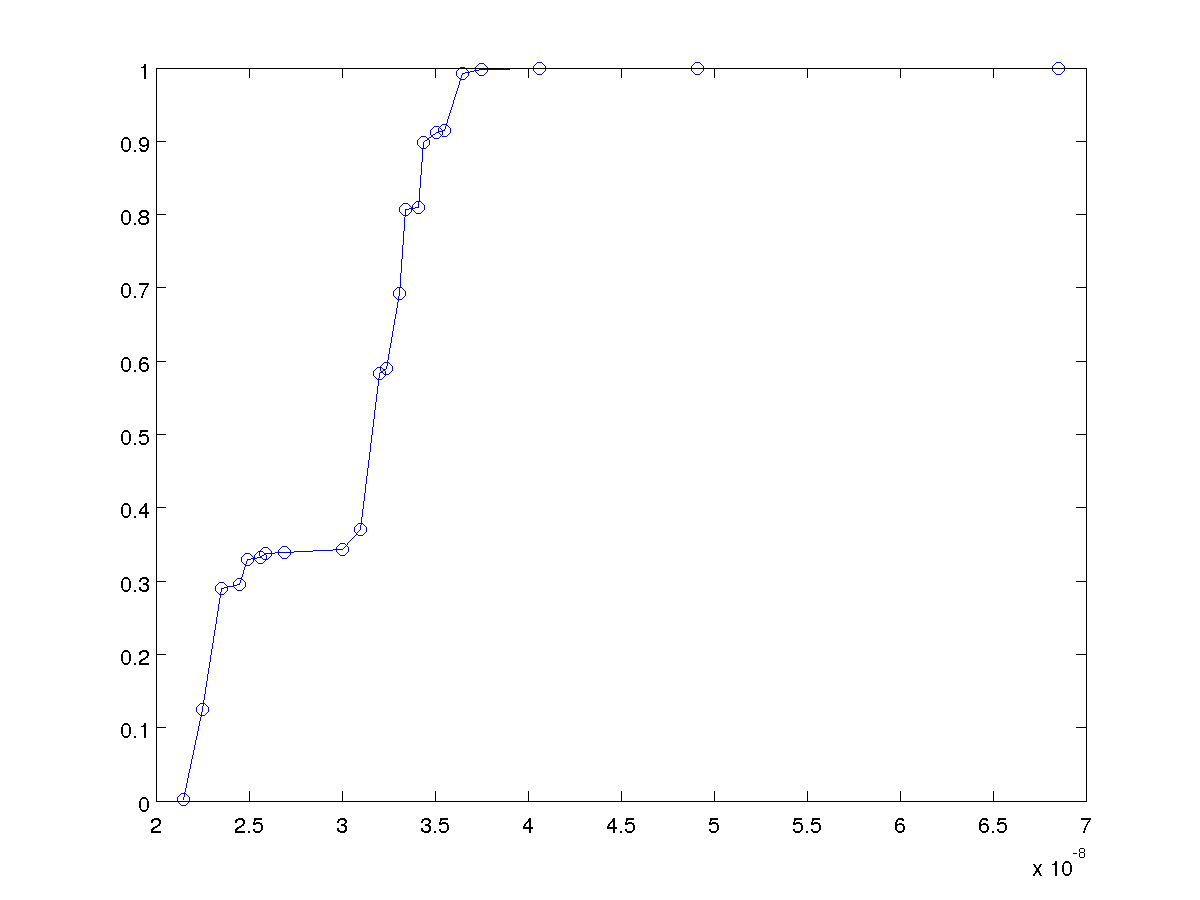

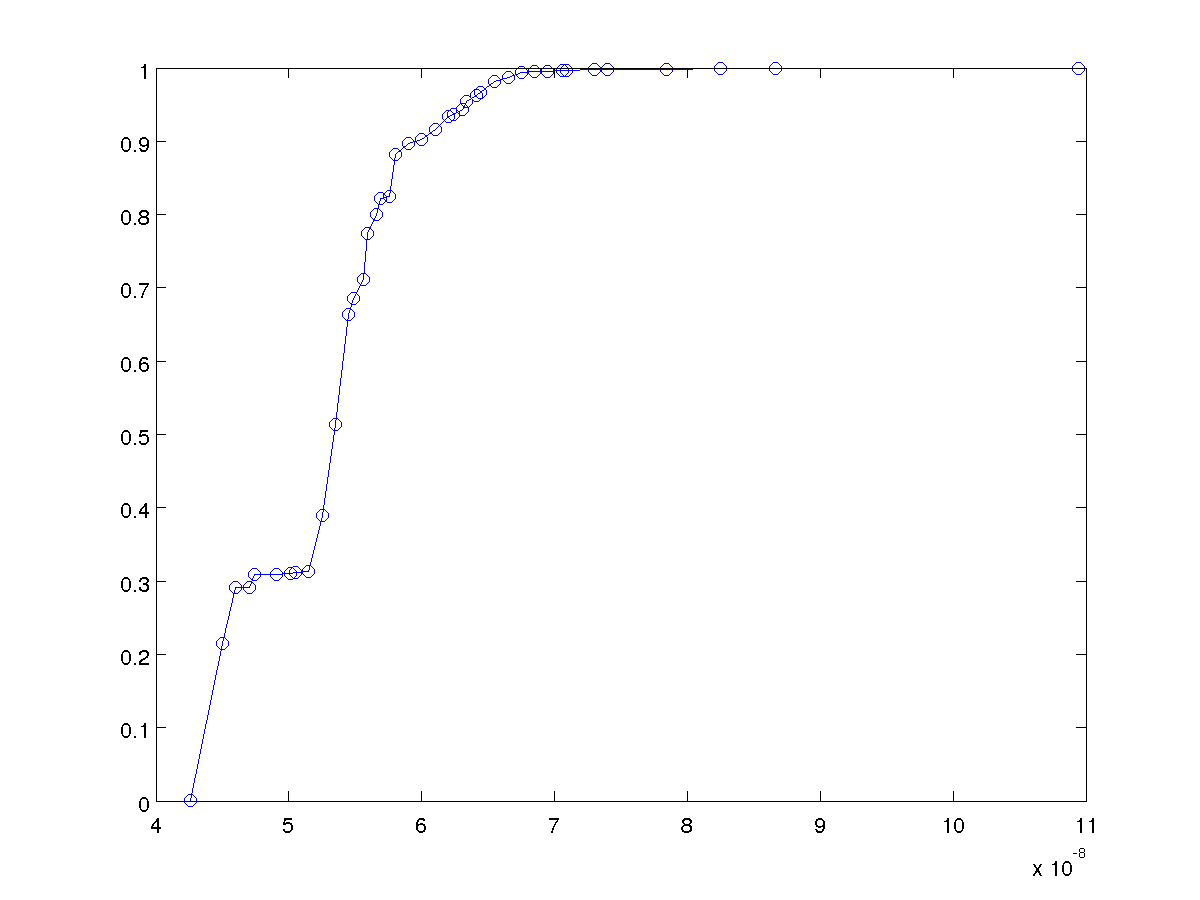

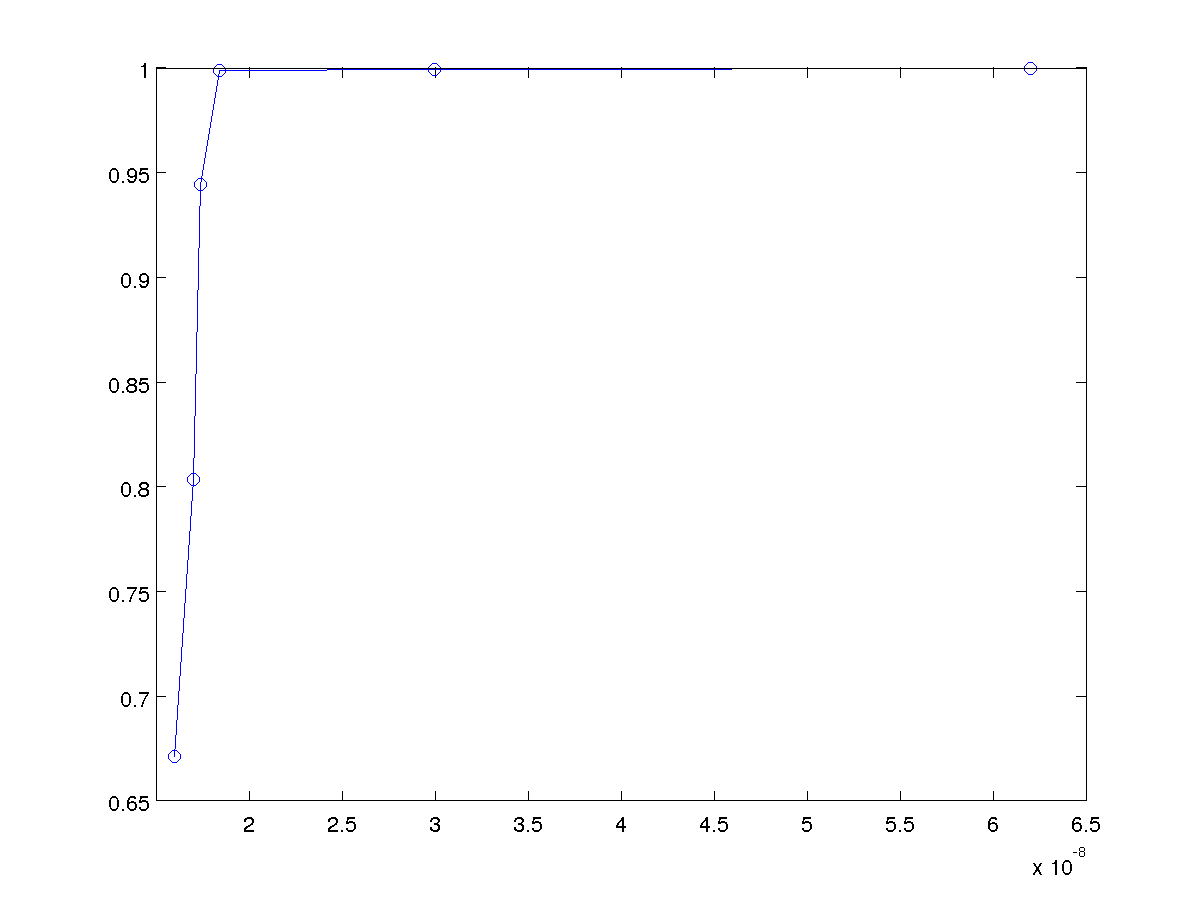

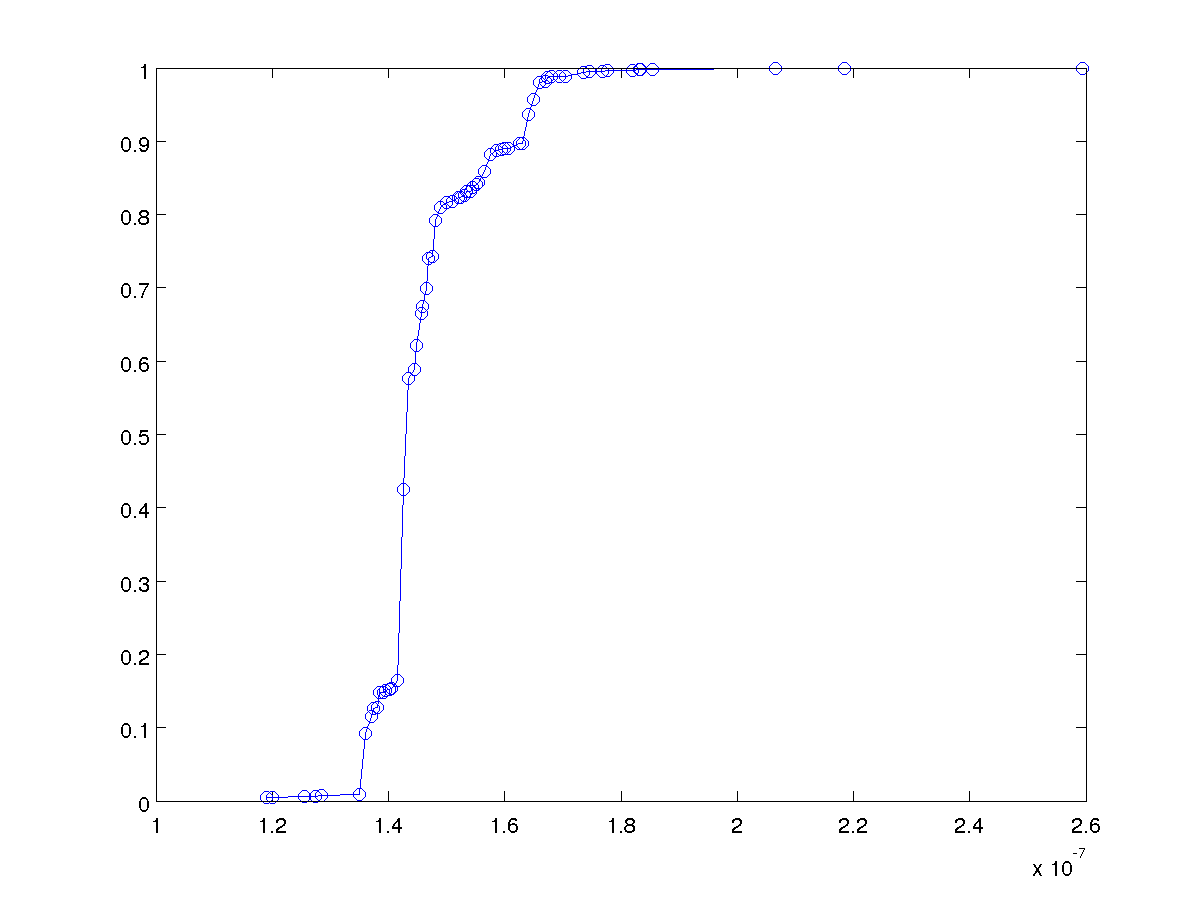

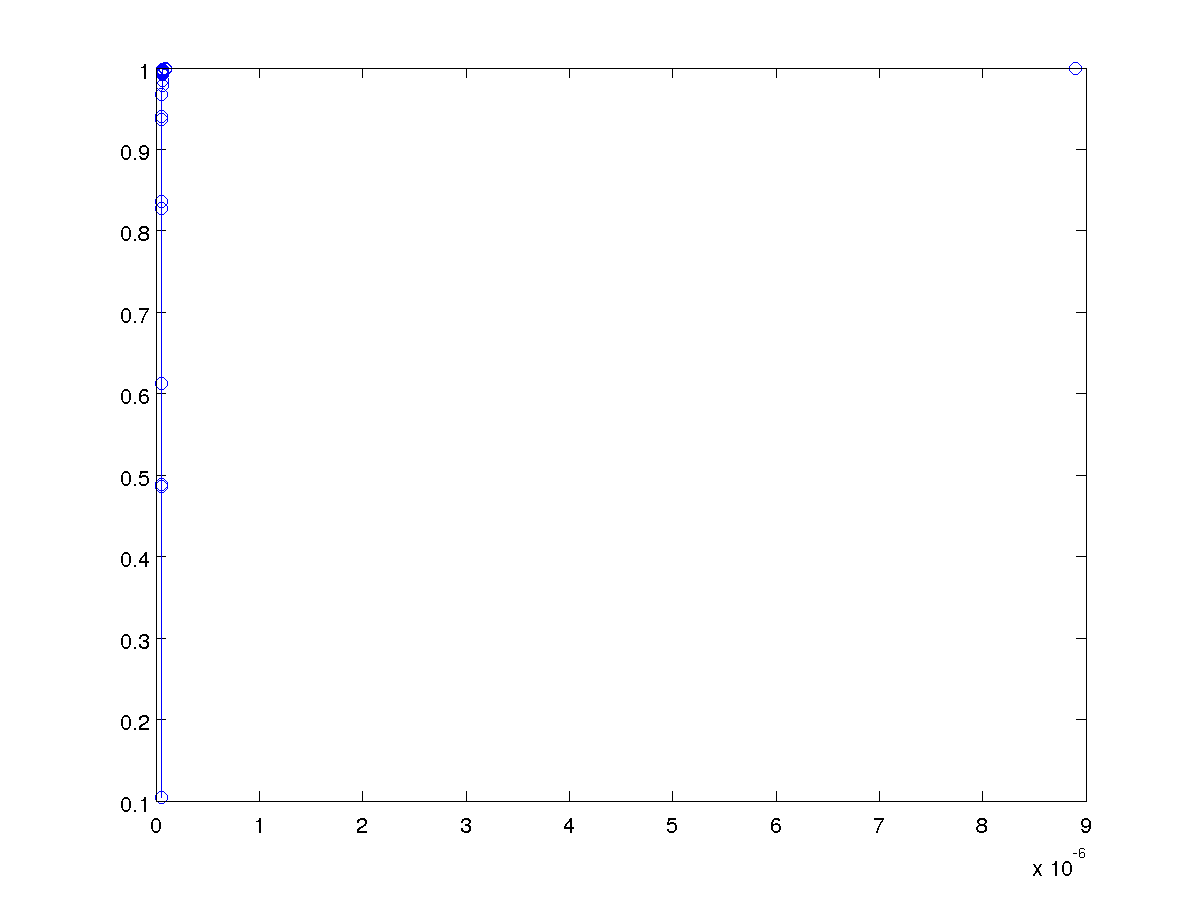

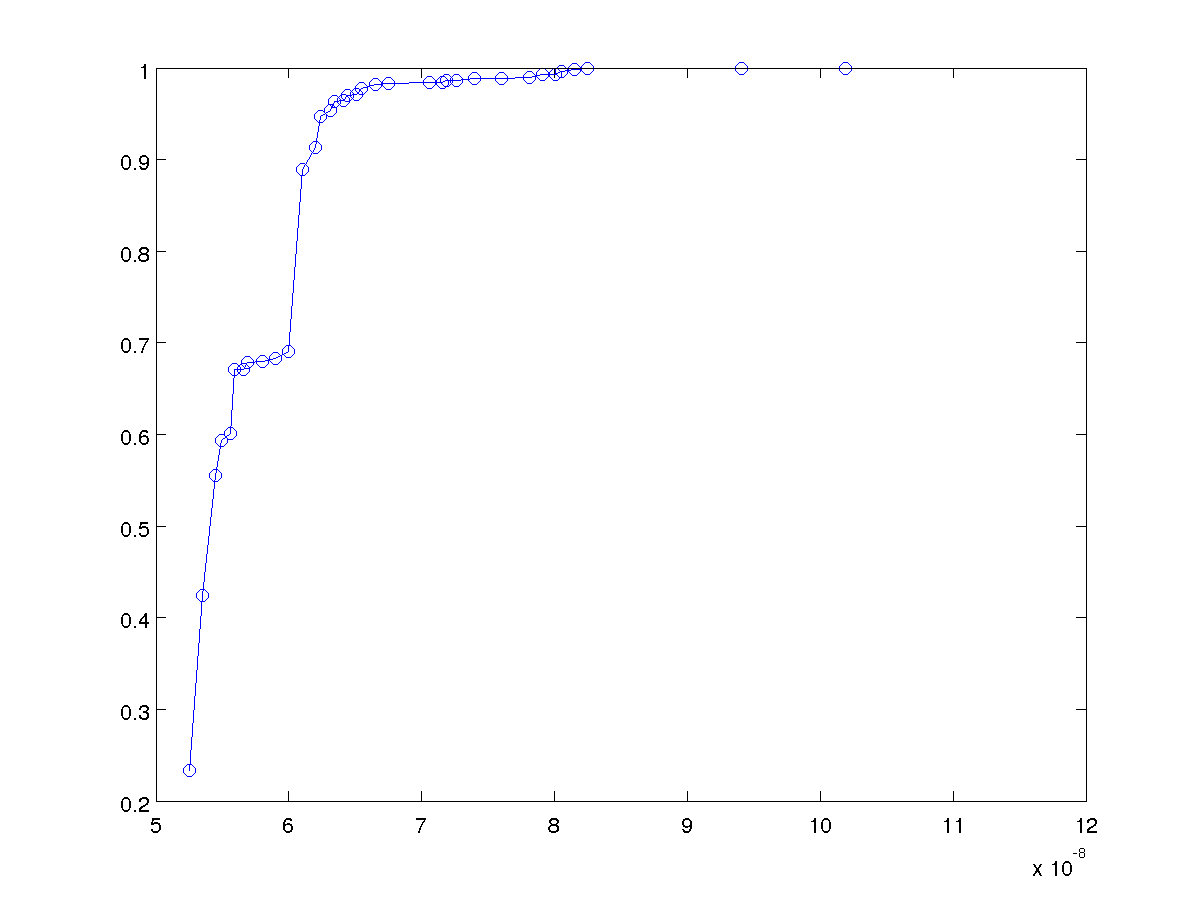

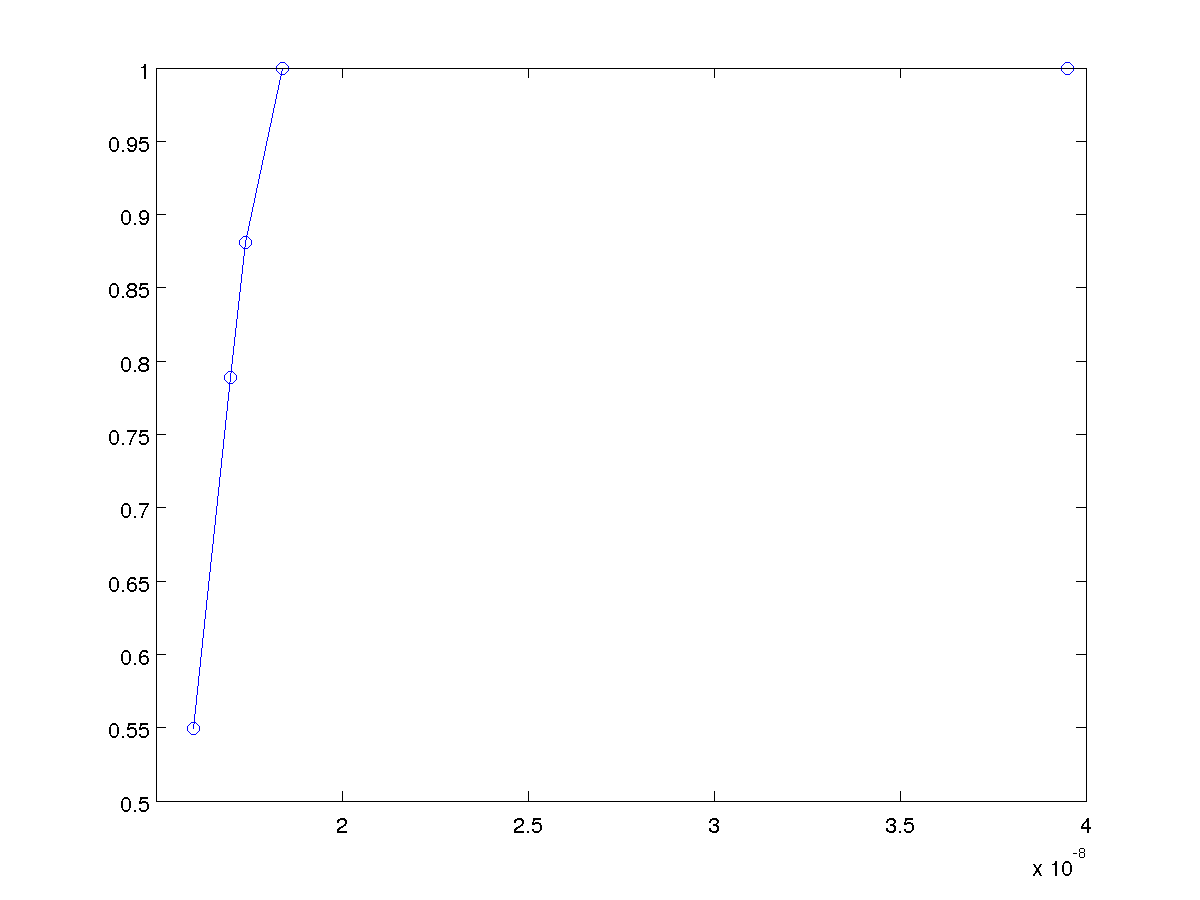

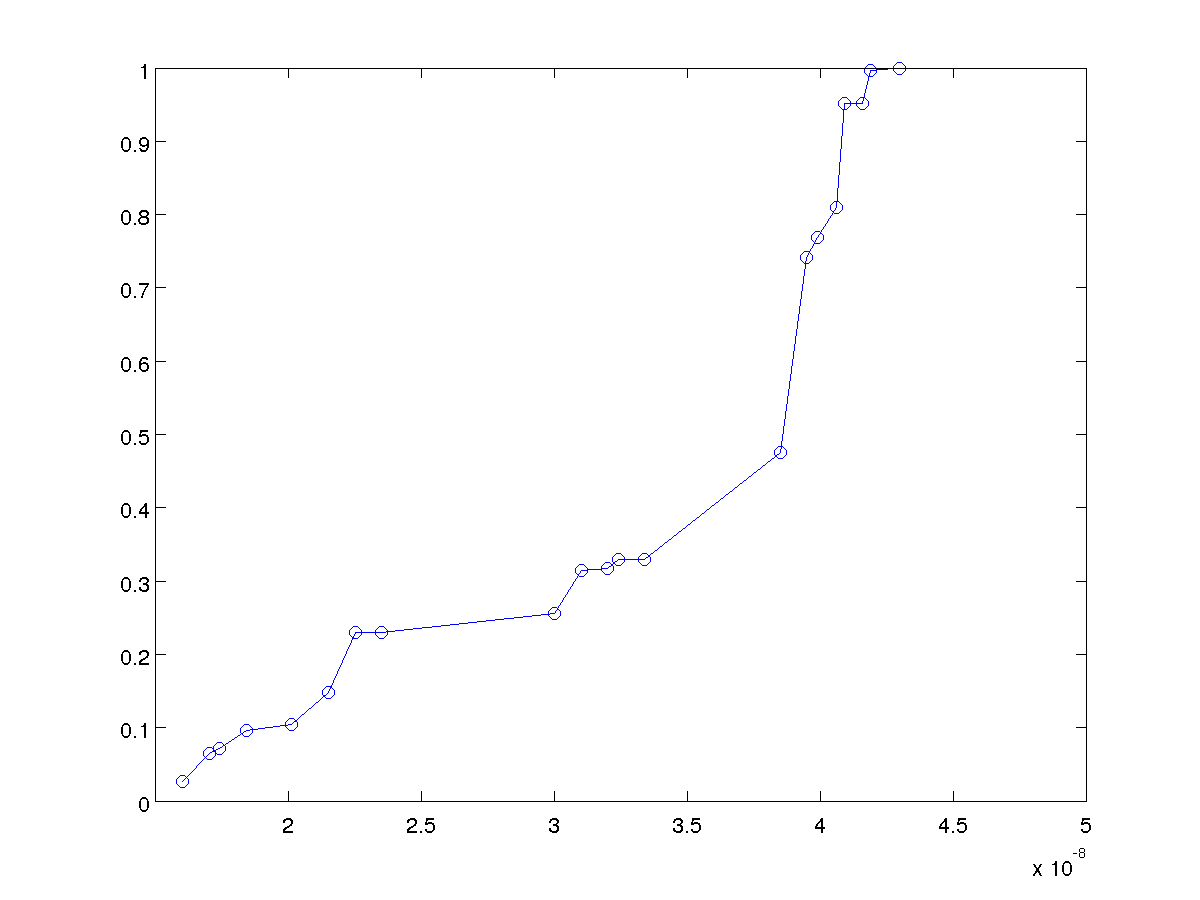

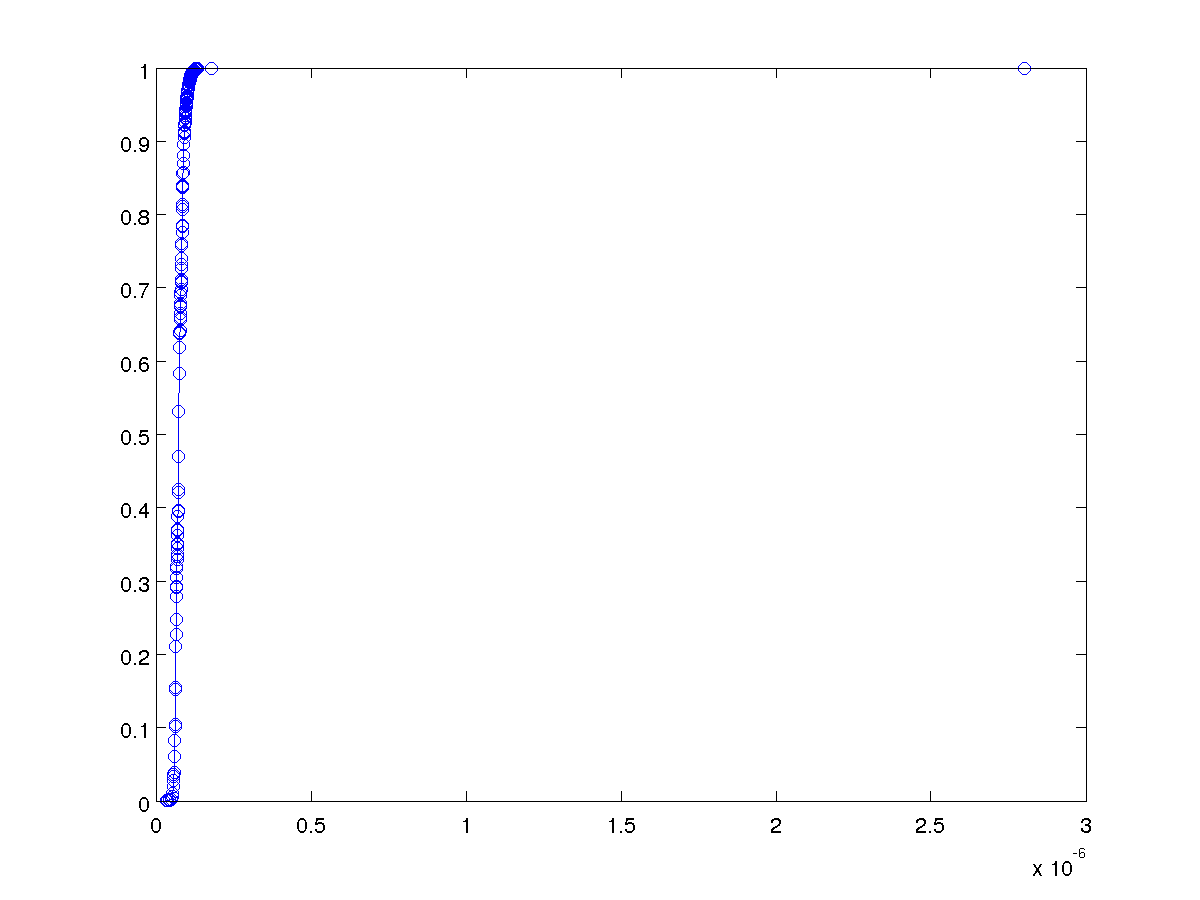

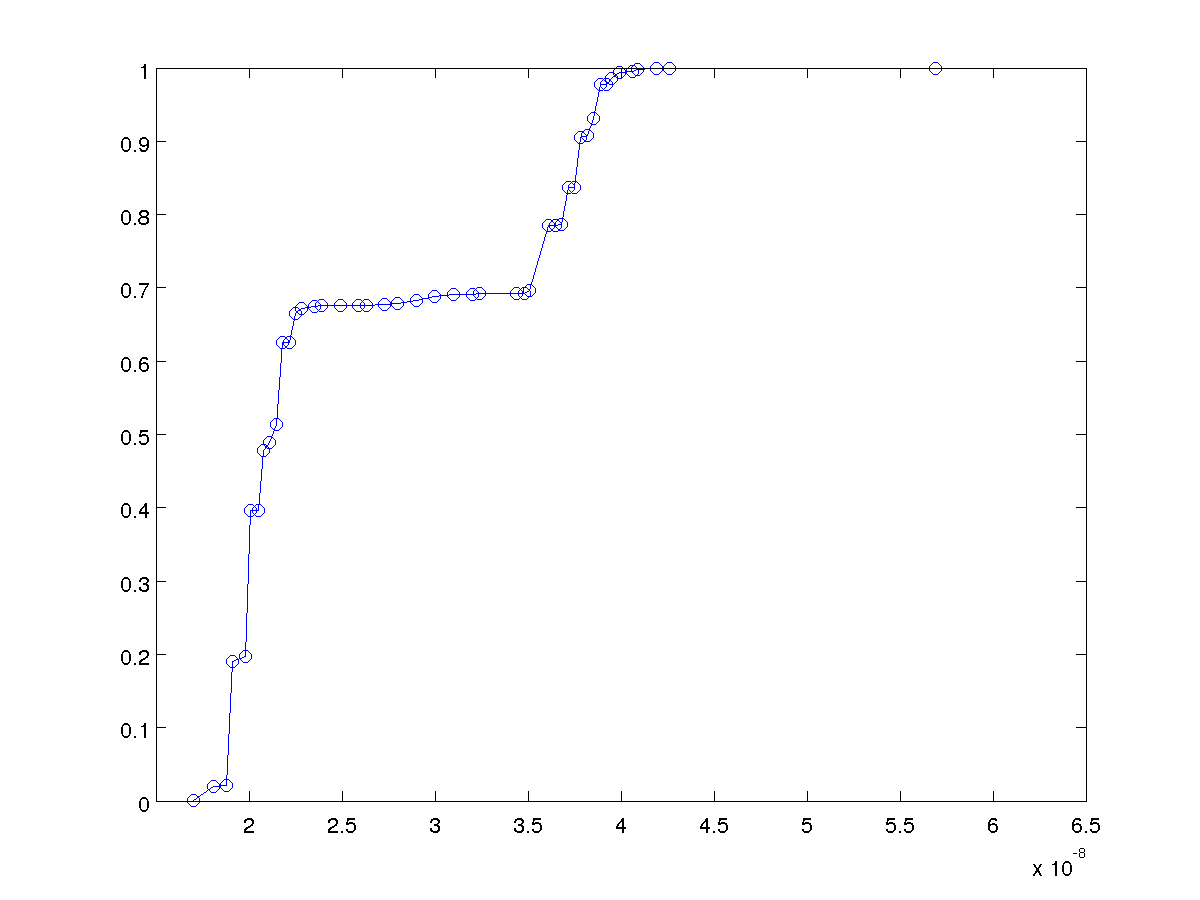

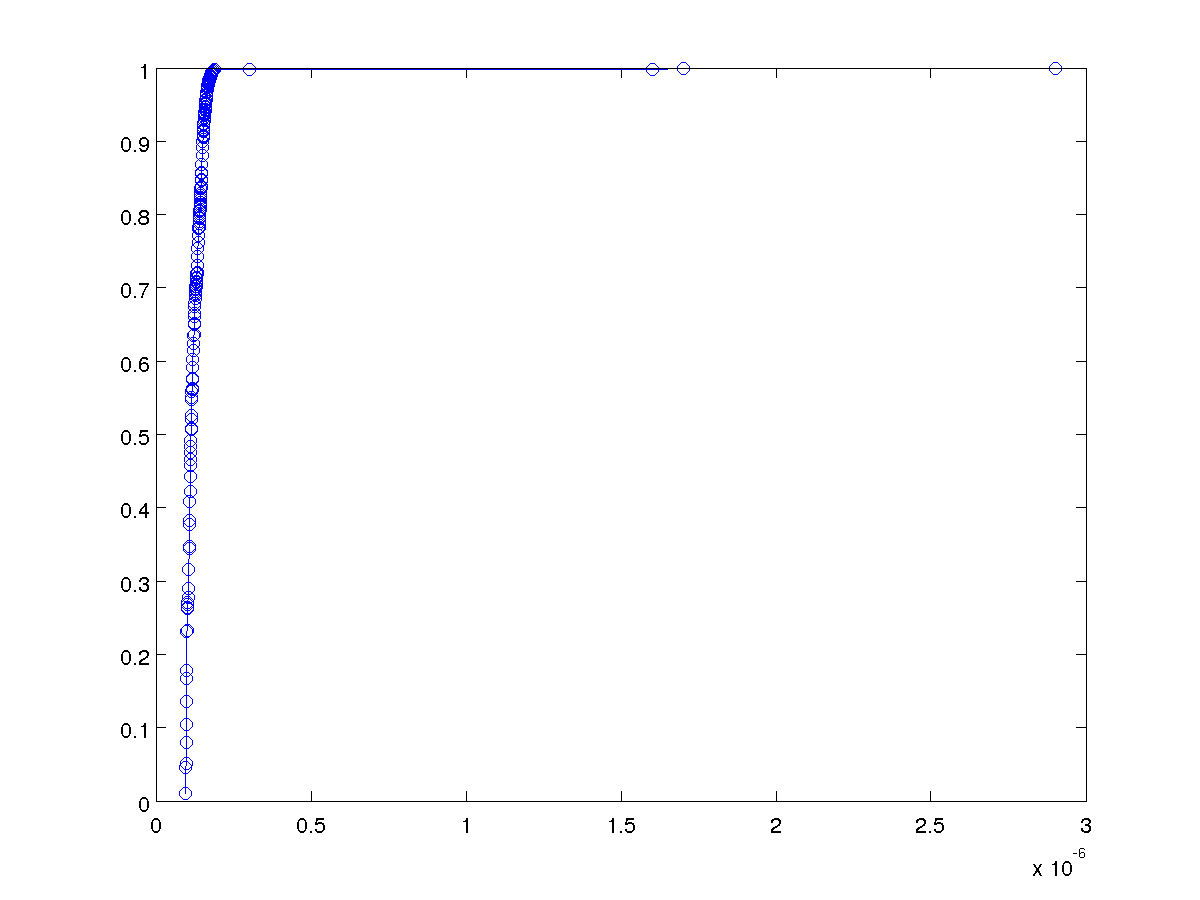

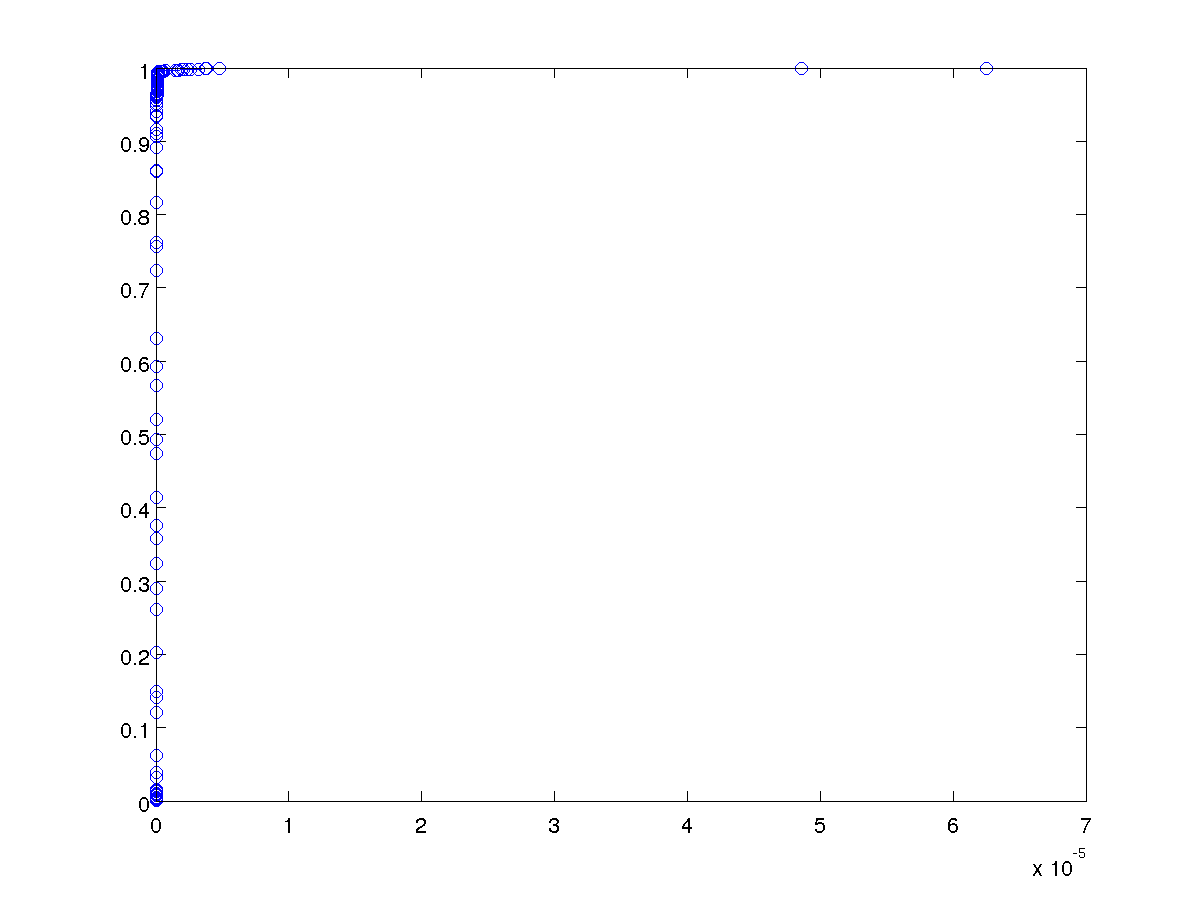

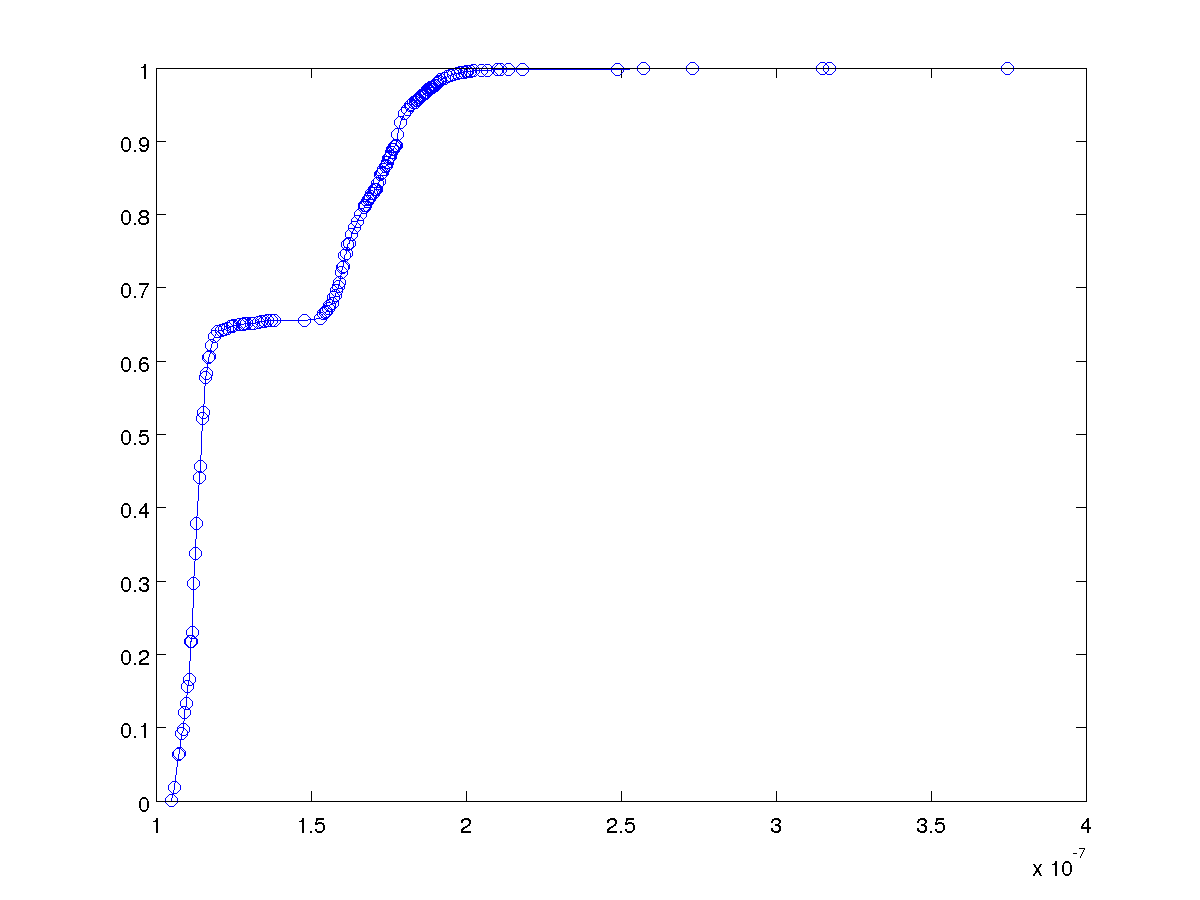

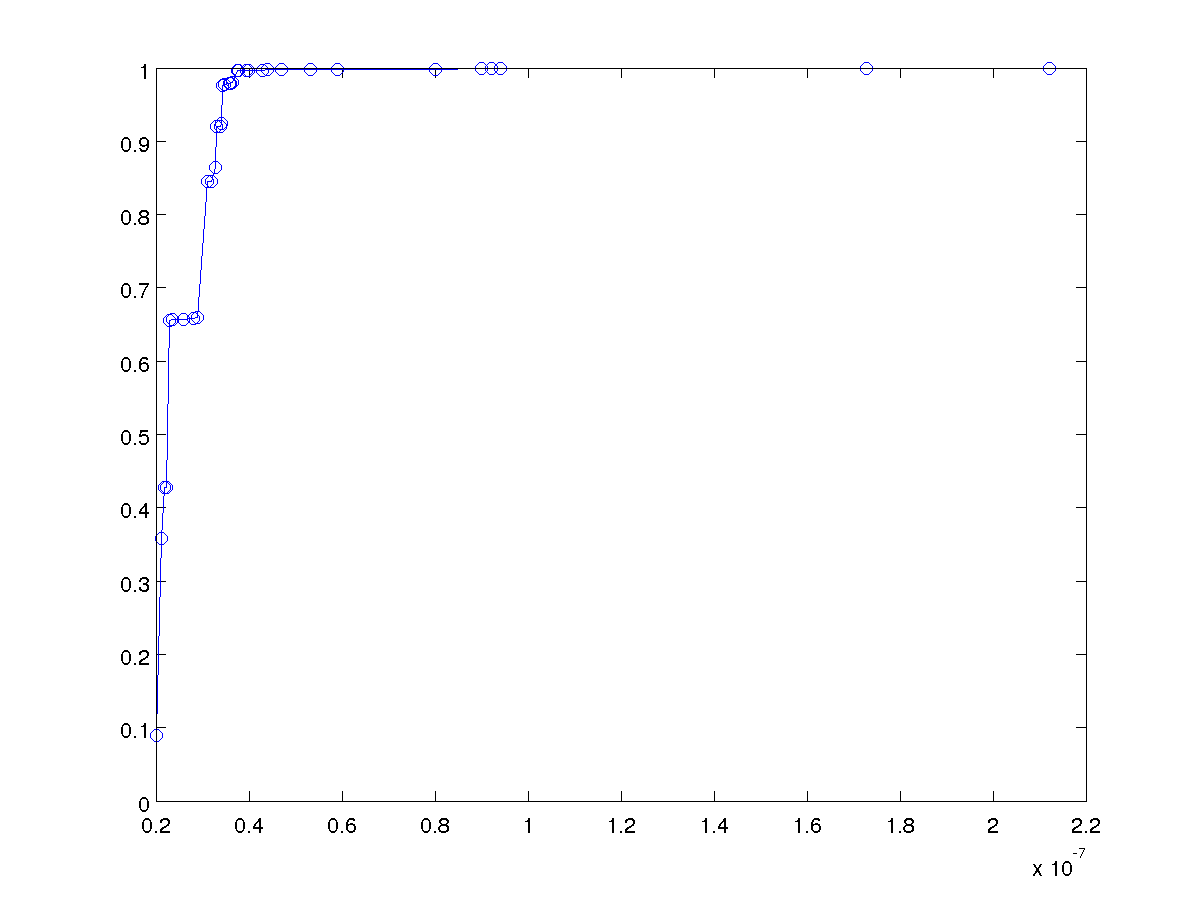

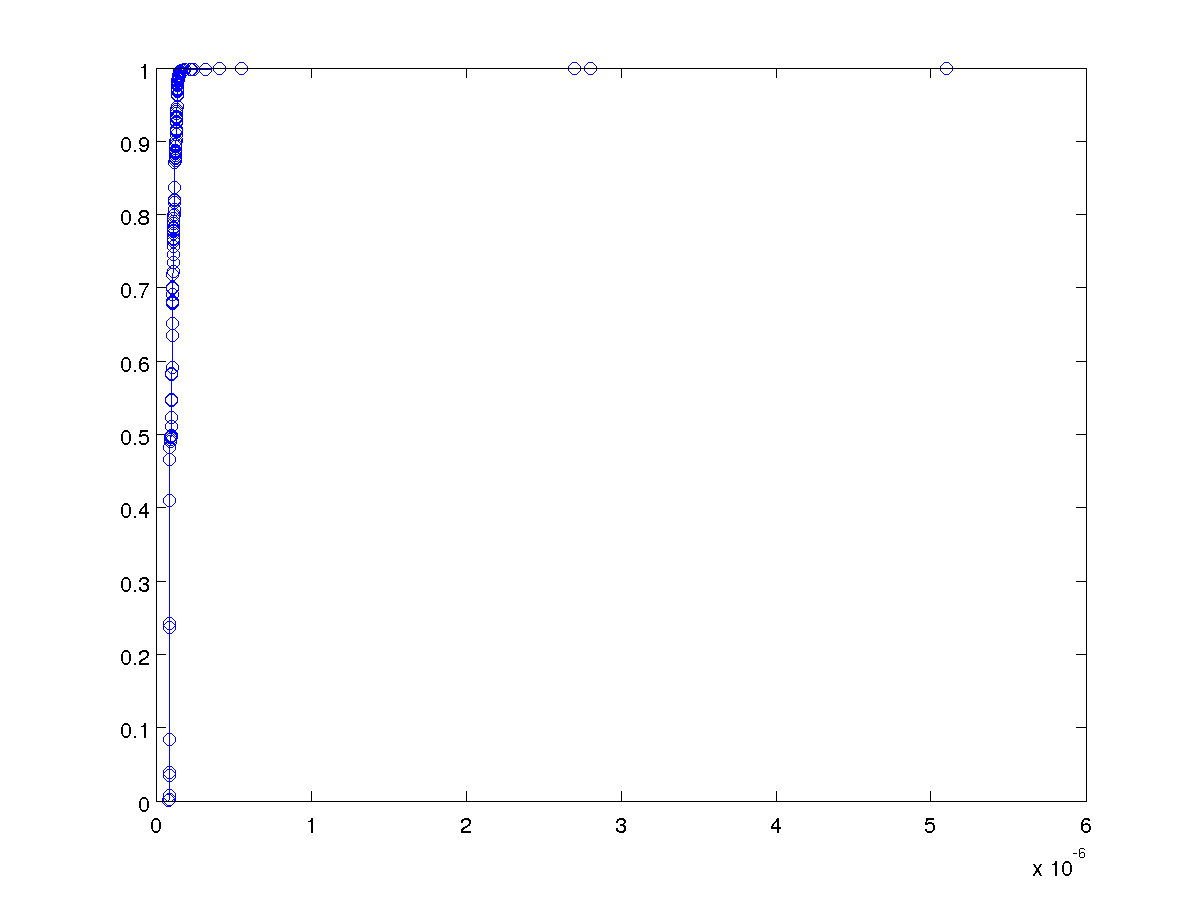

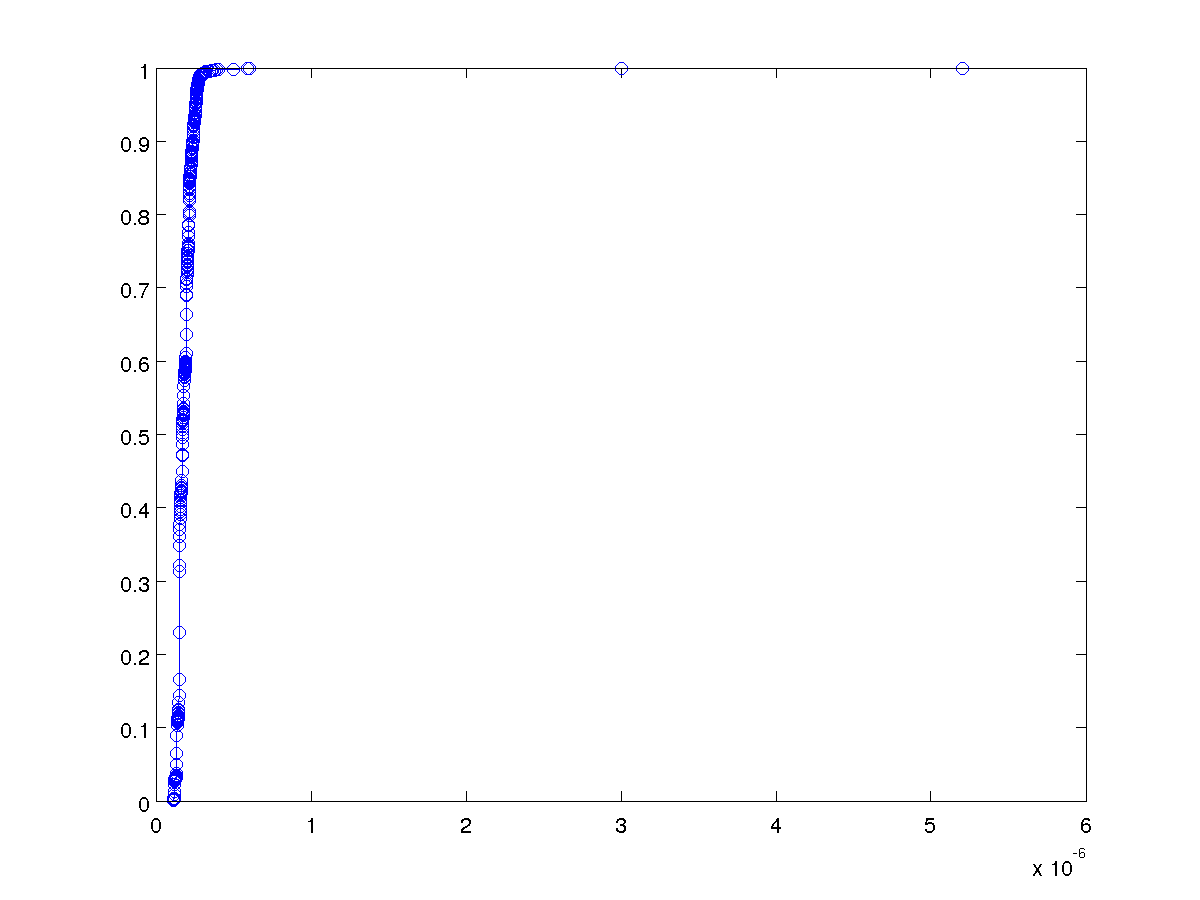

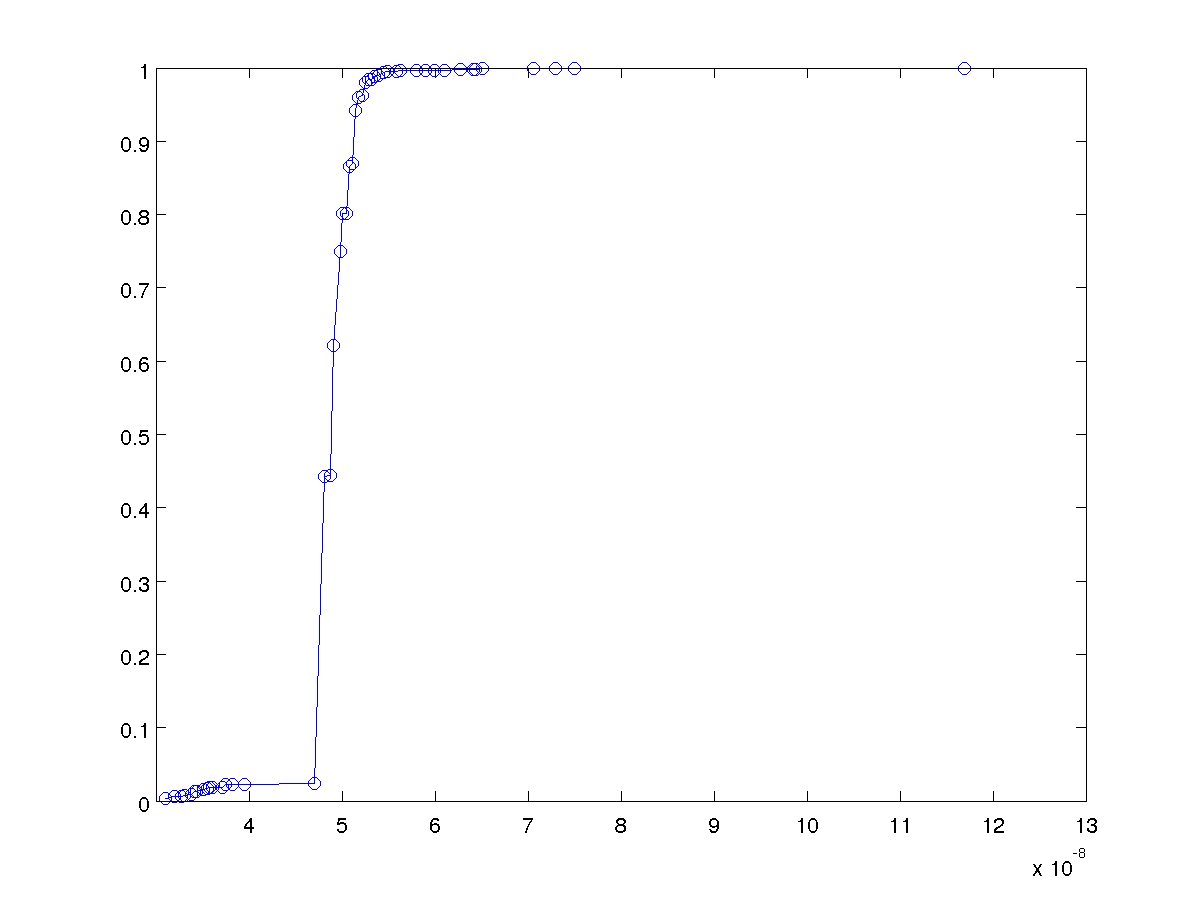

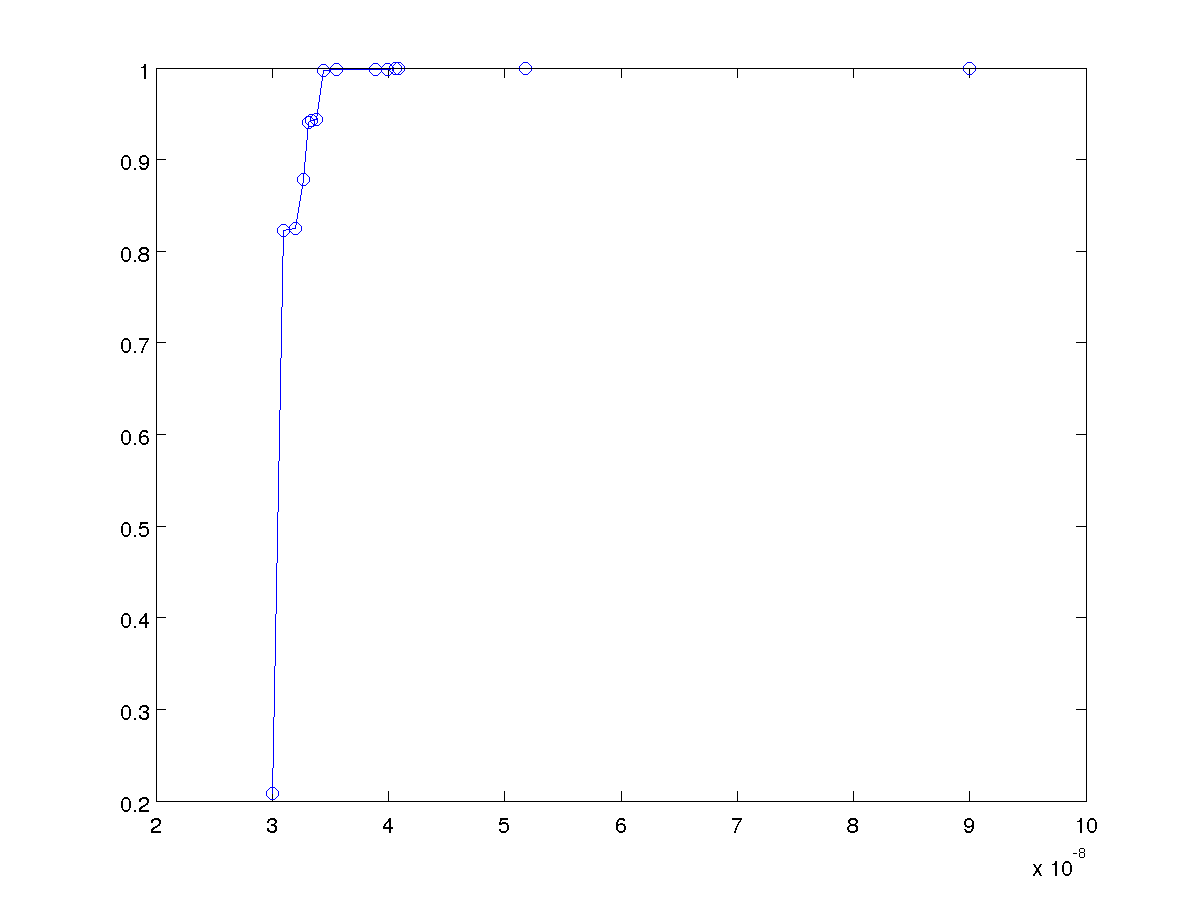

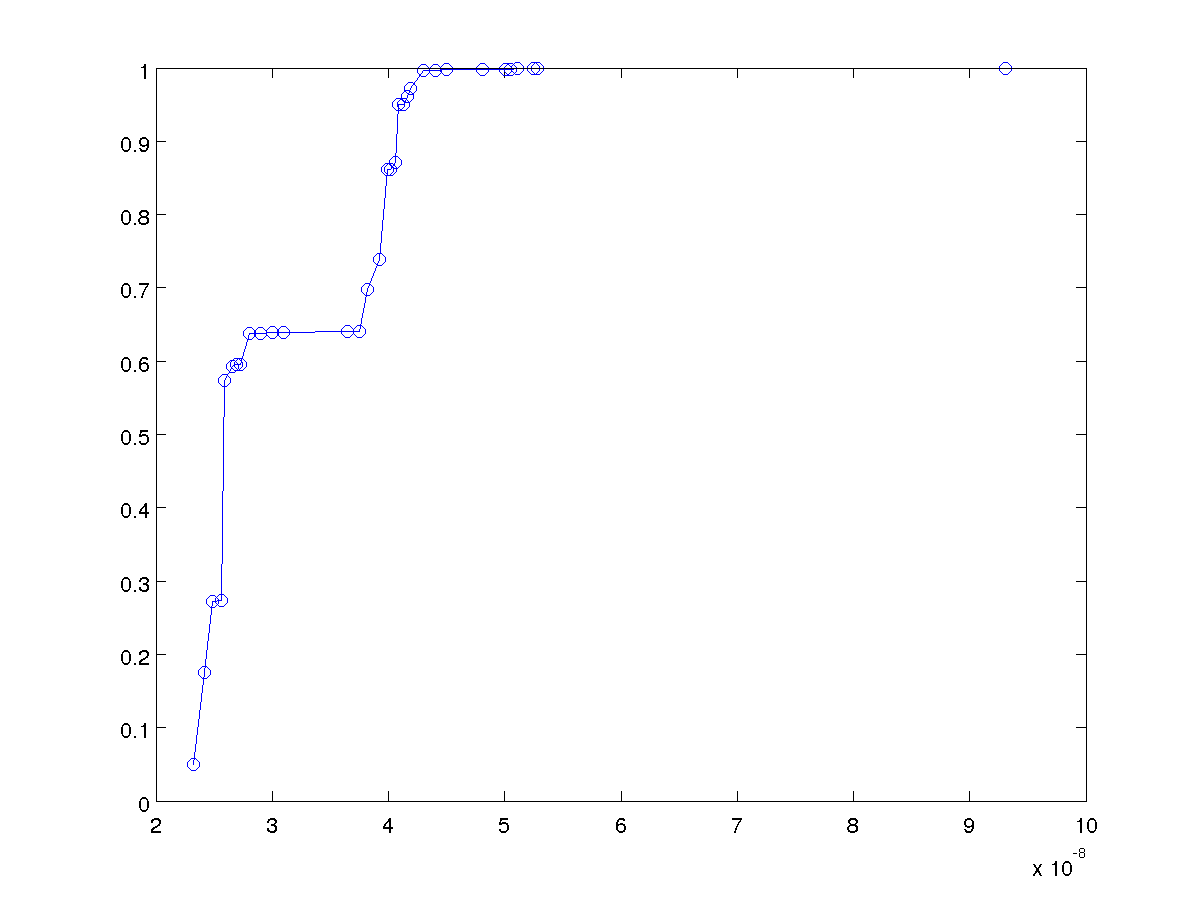

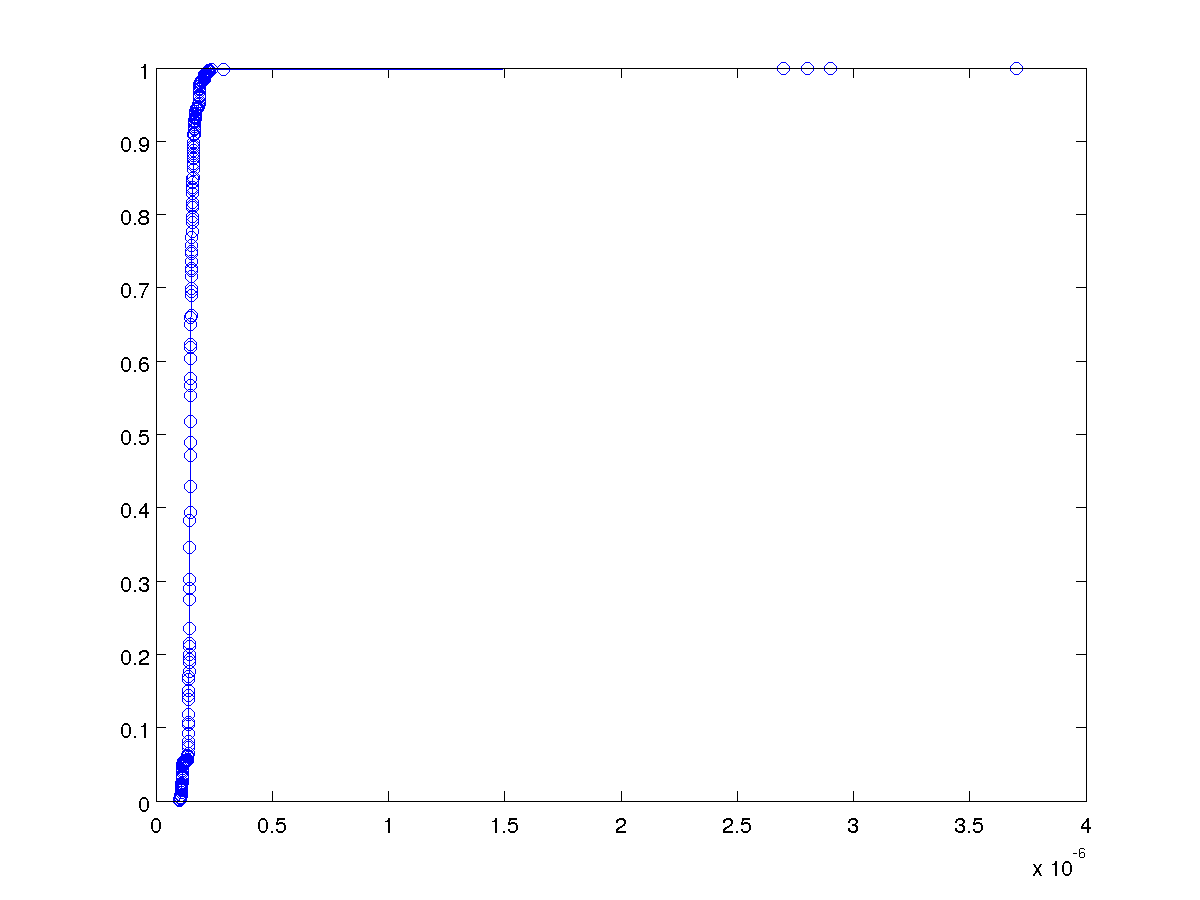

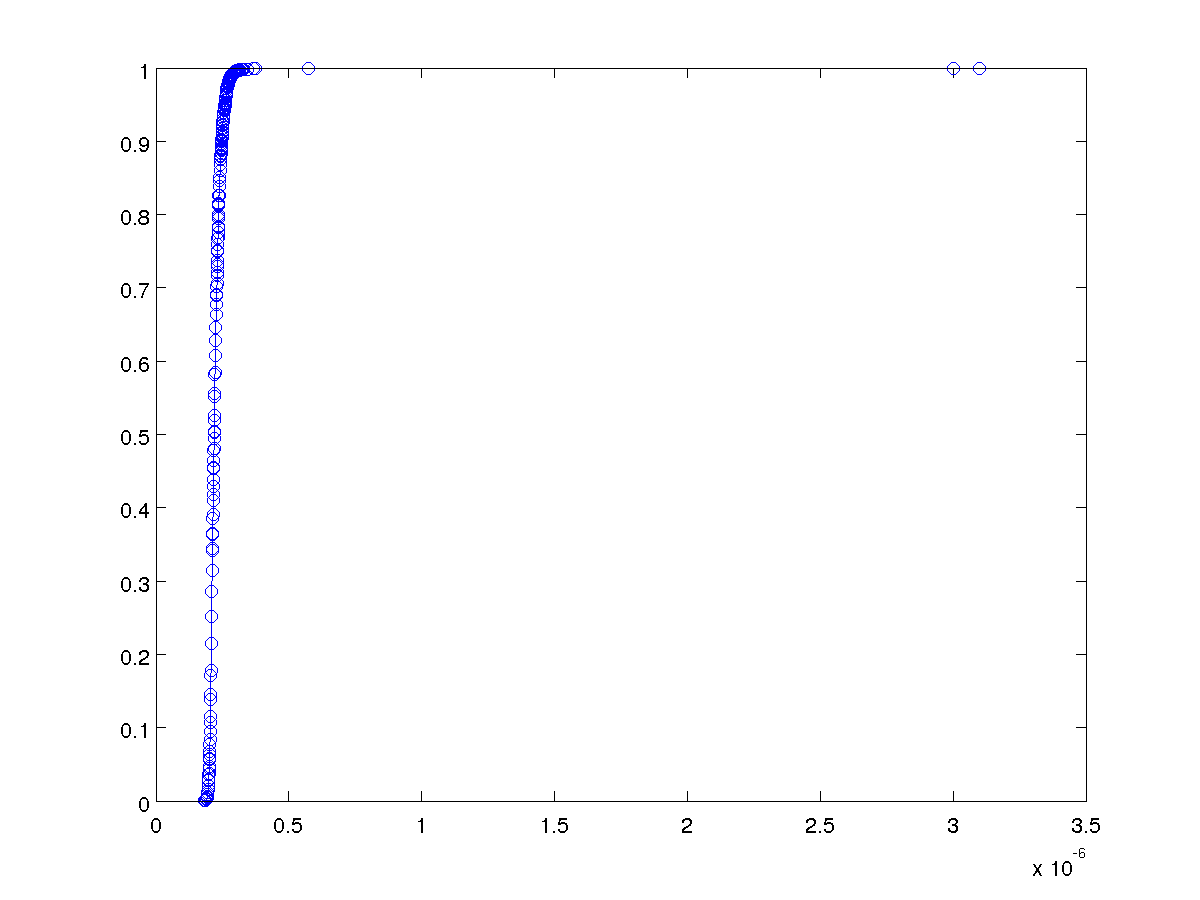

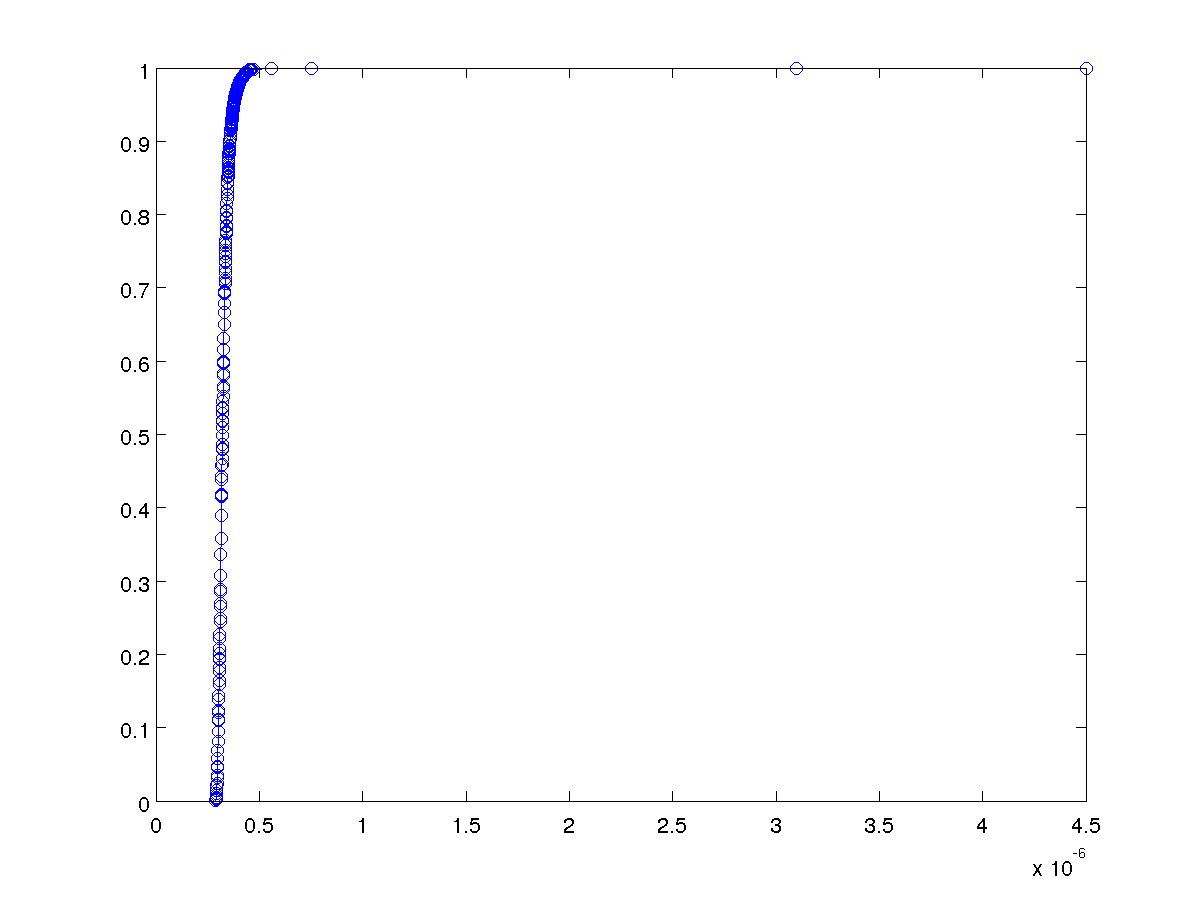

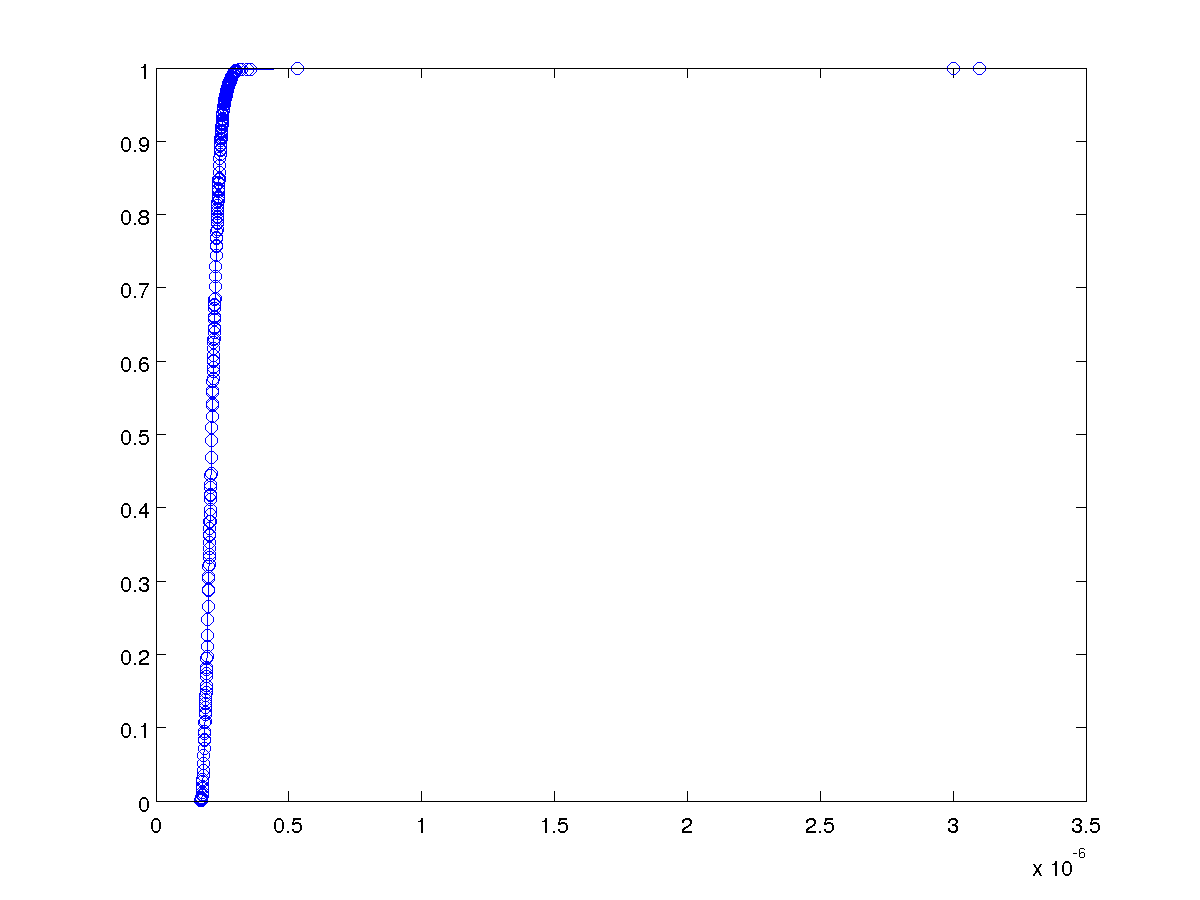

Cumulative distributions for each event

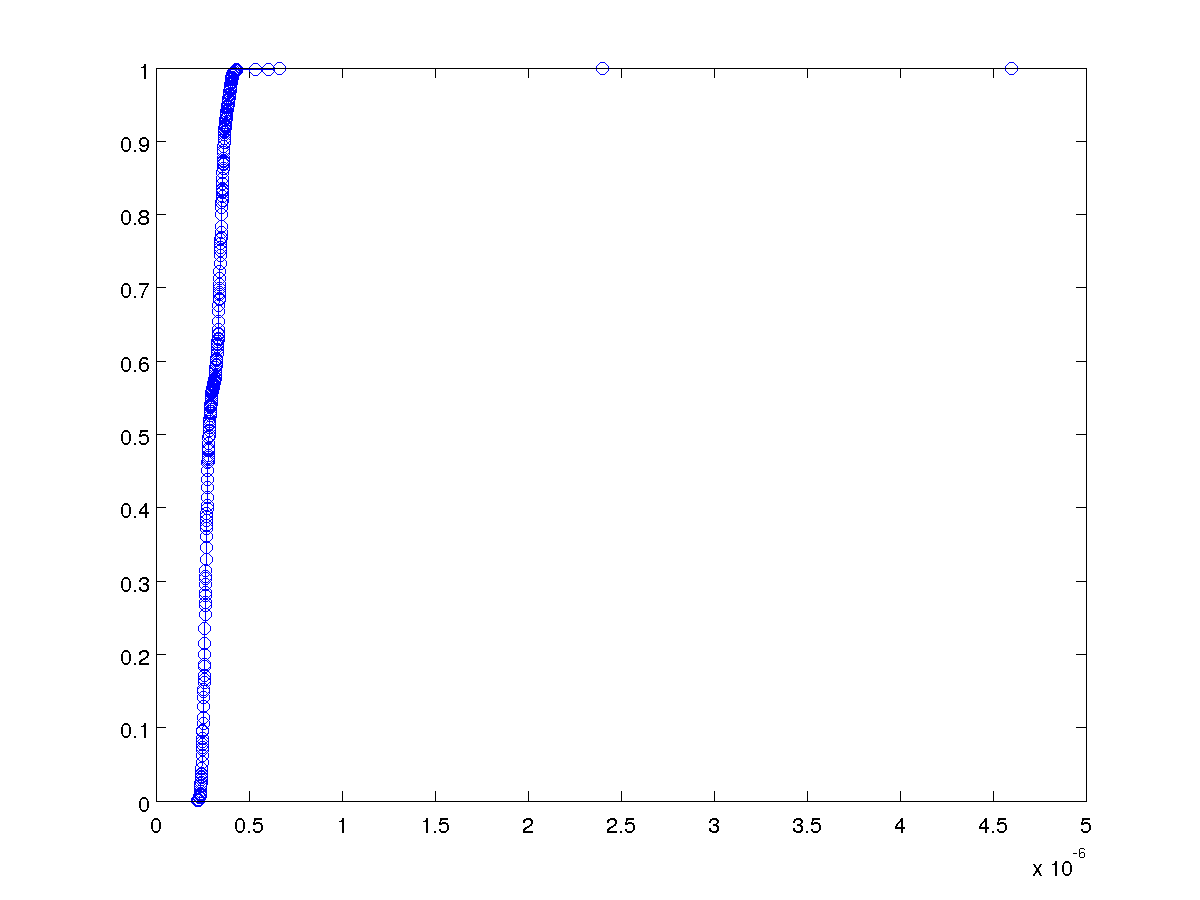

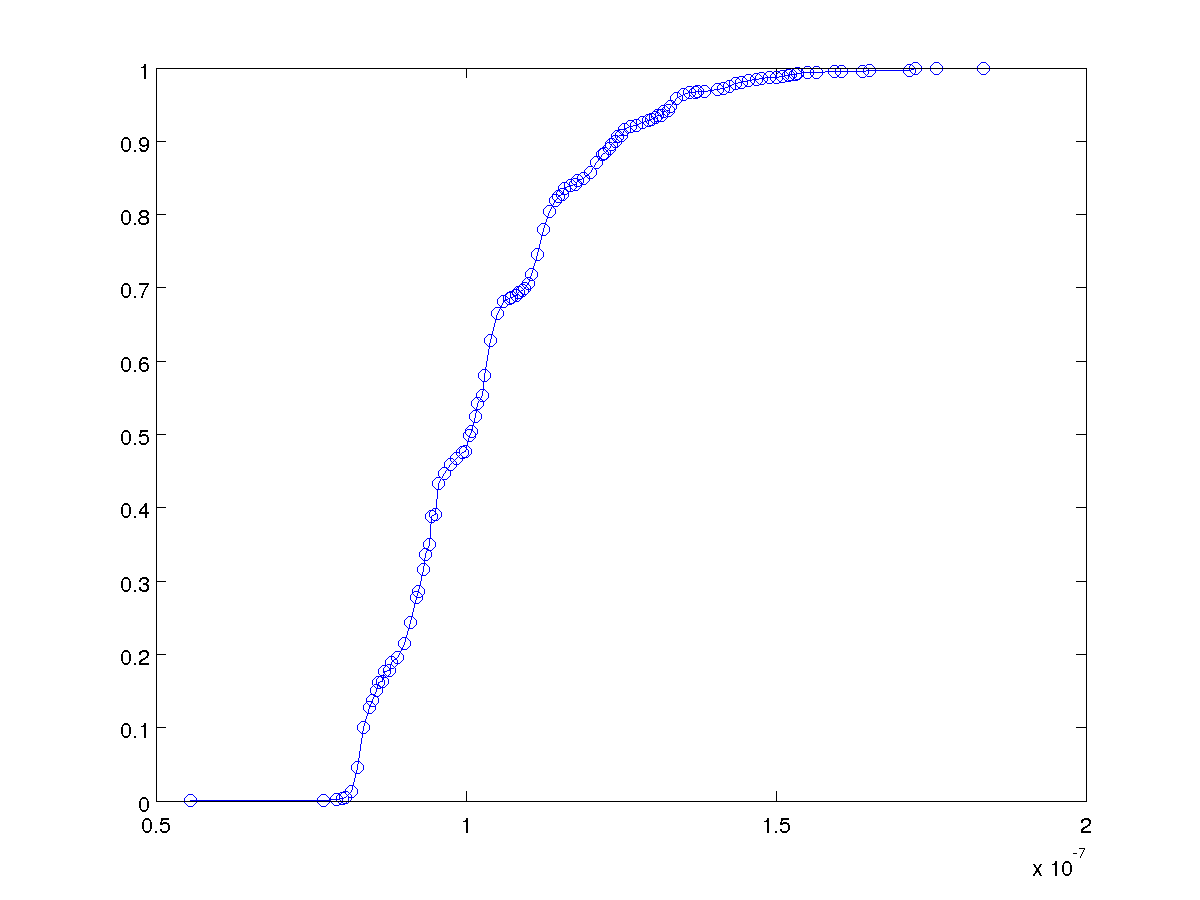

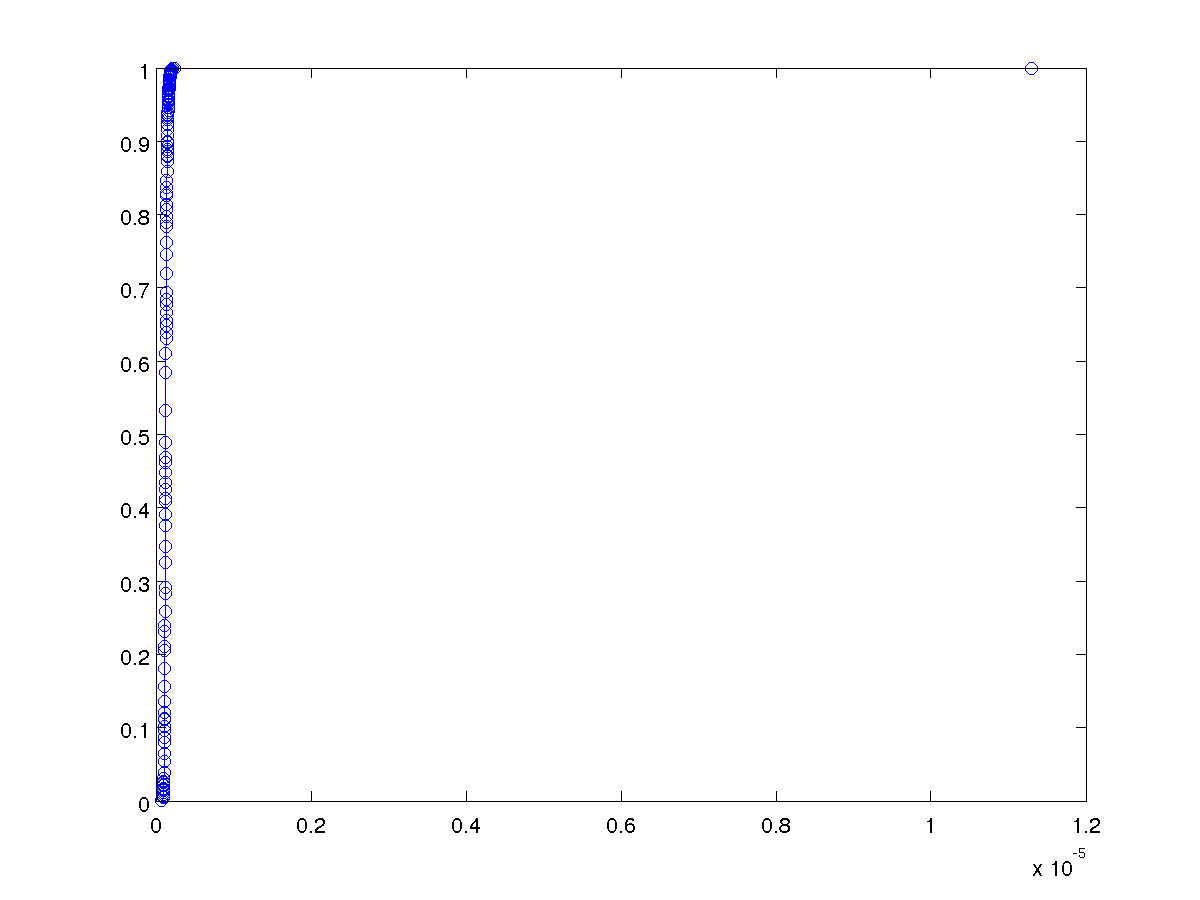

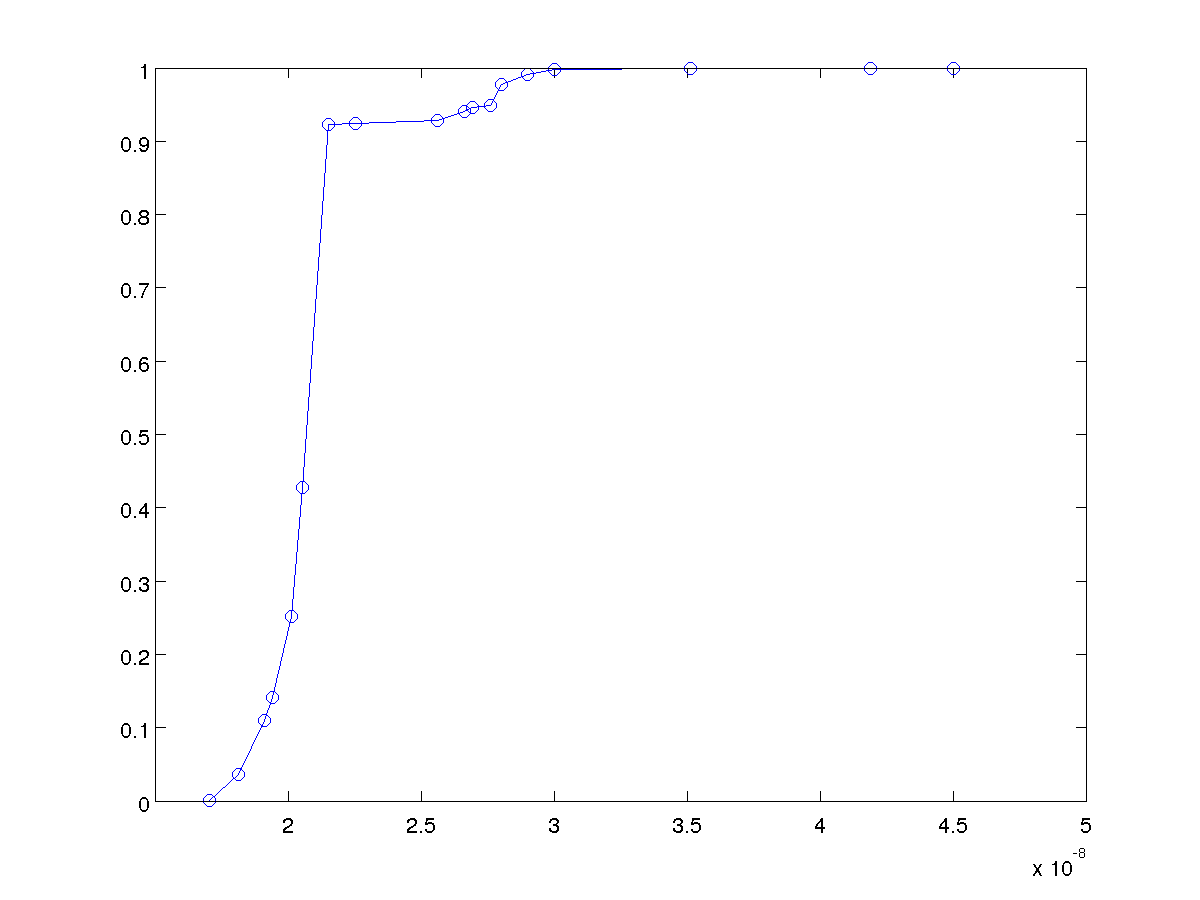

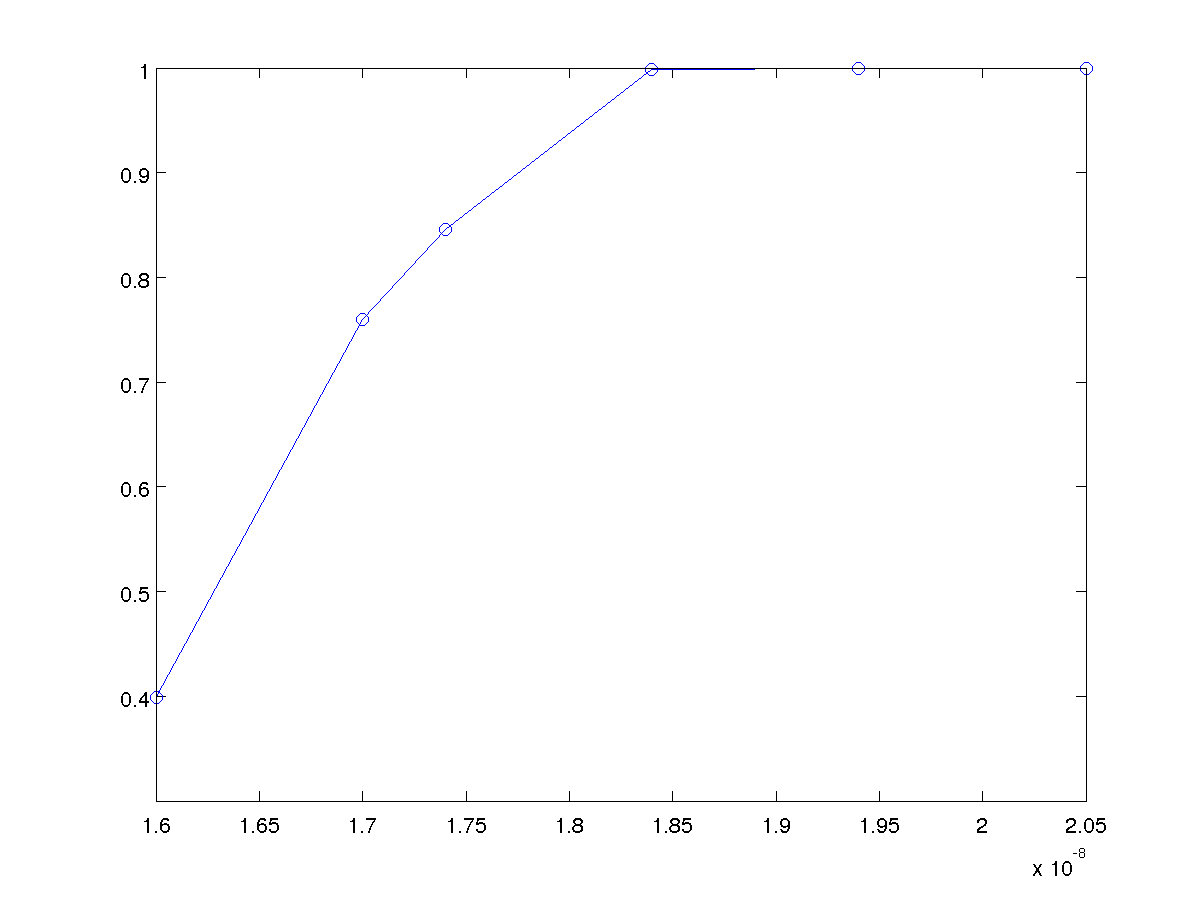

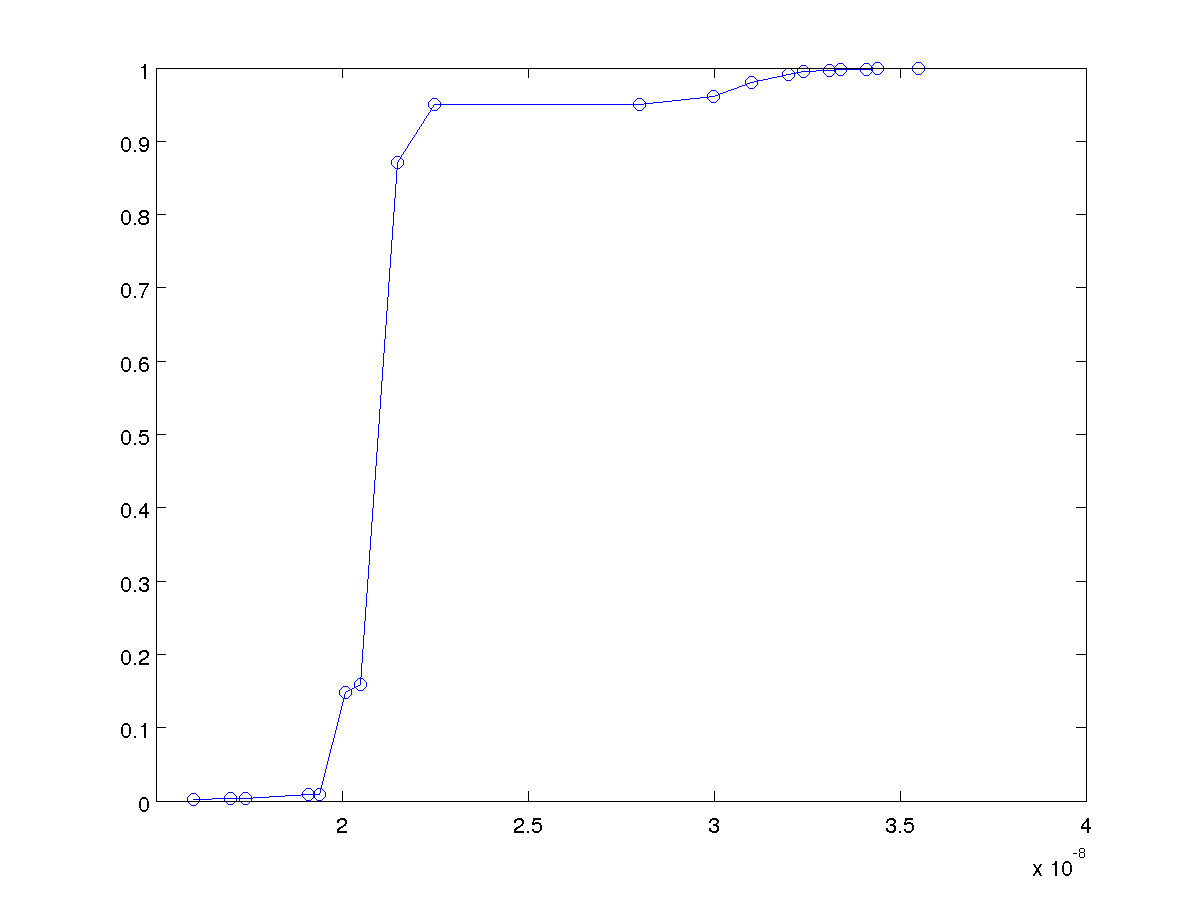

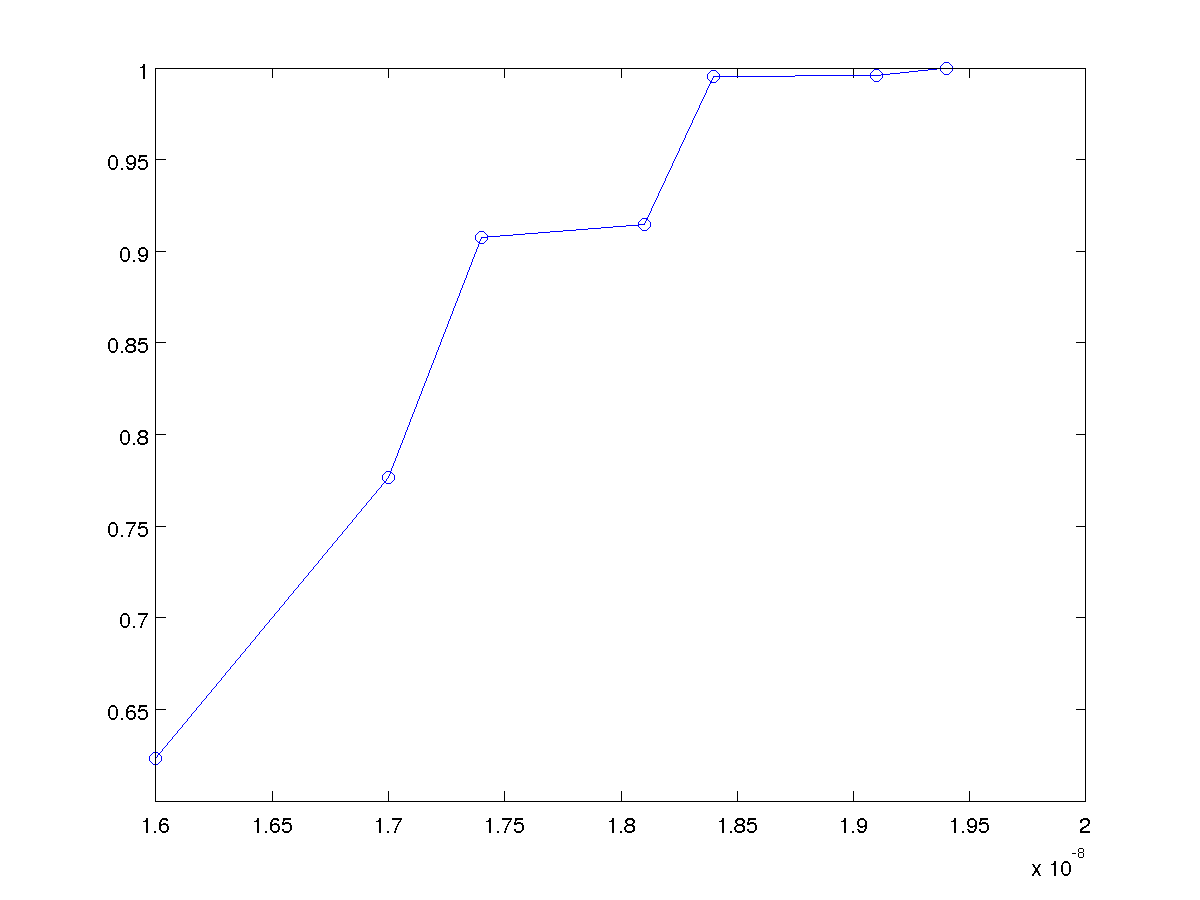

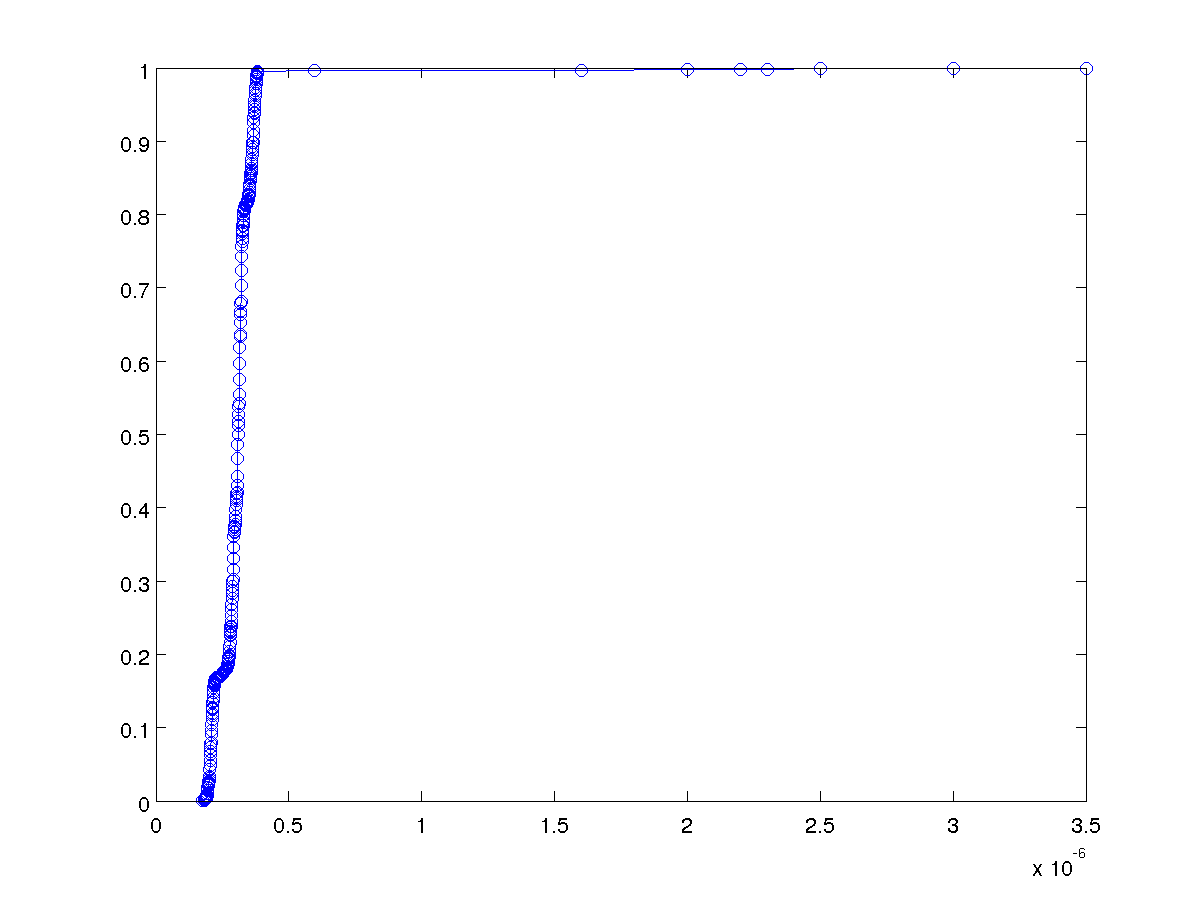

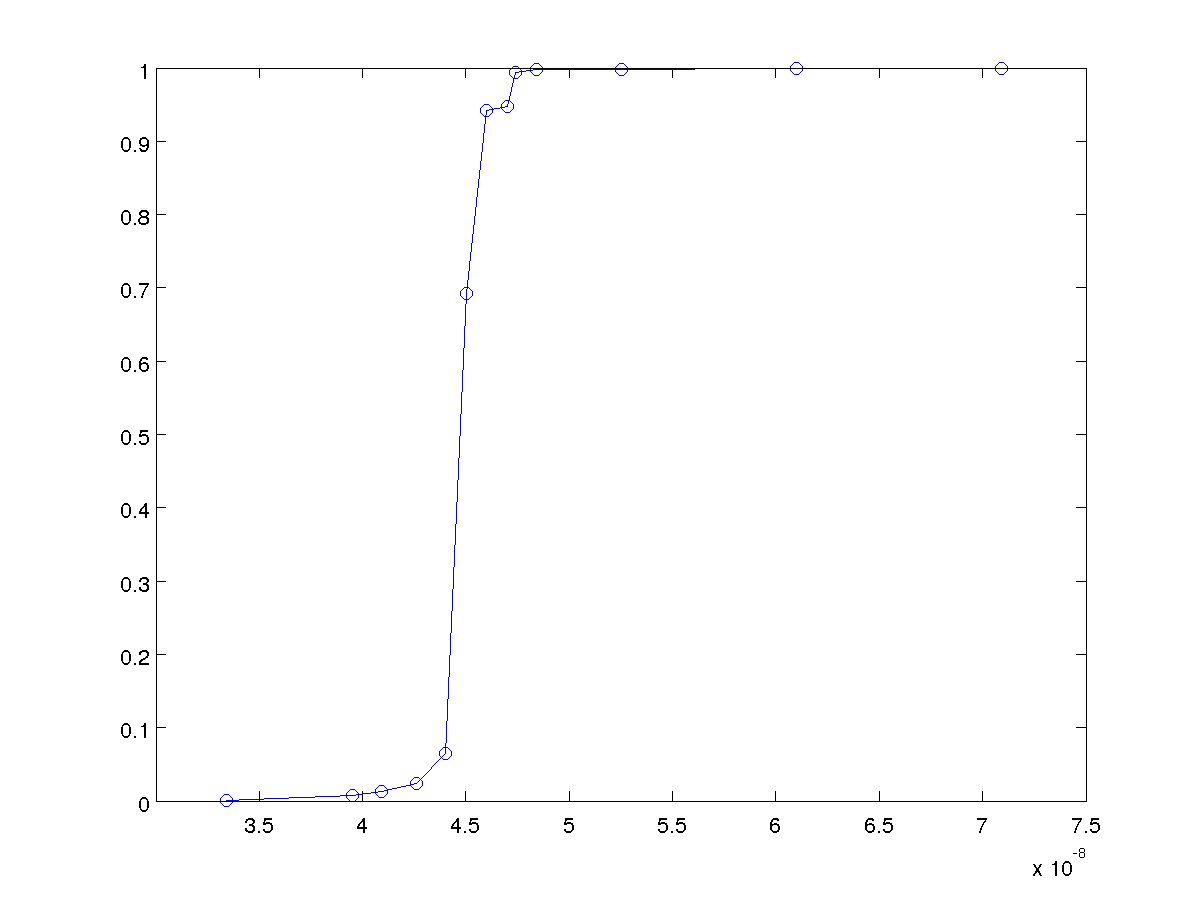

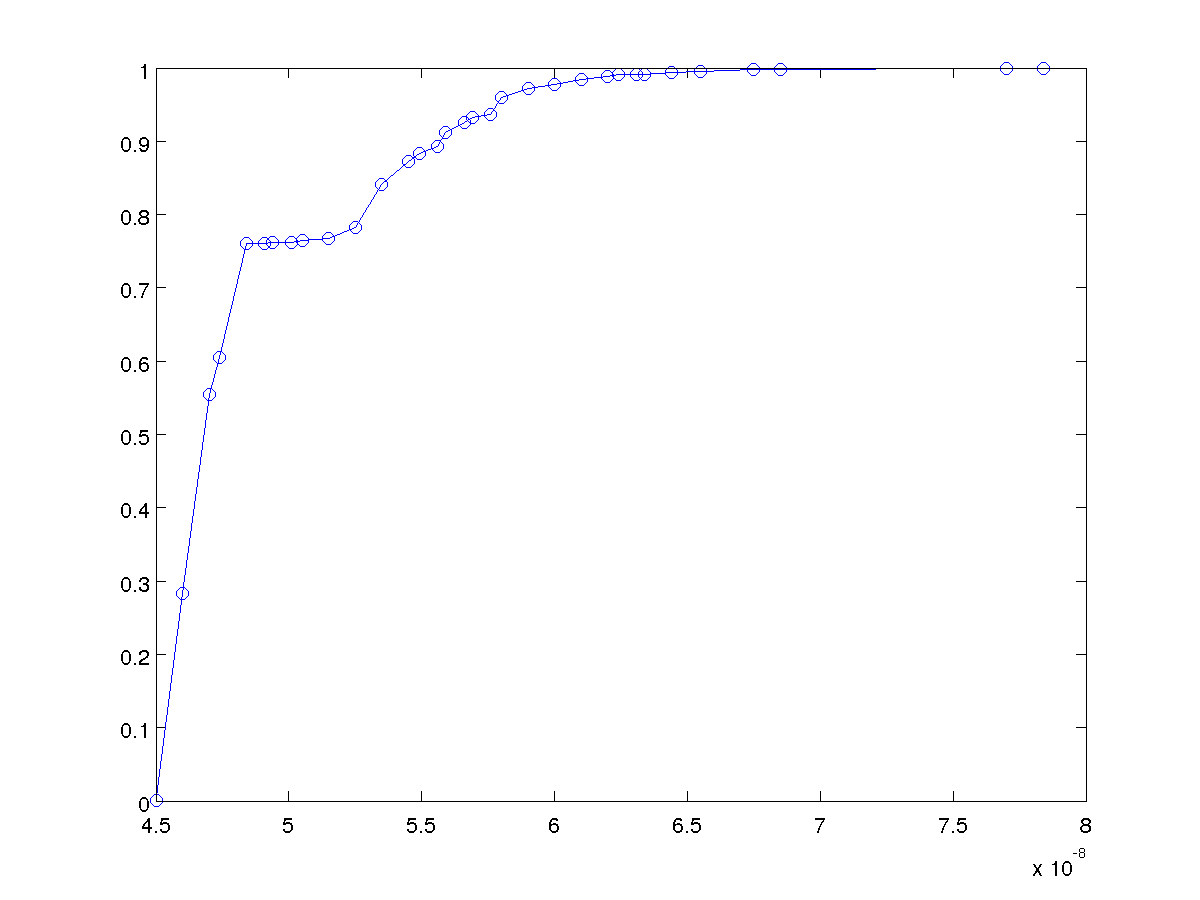

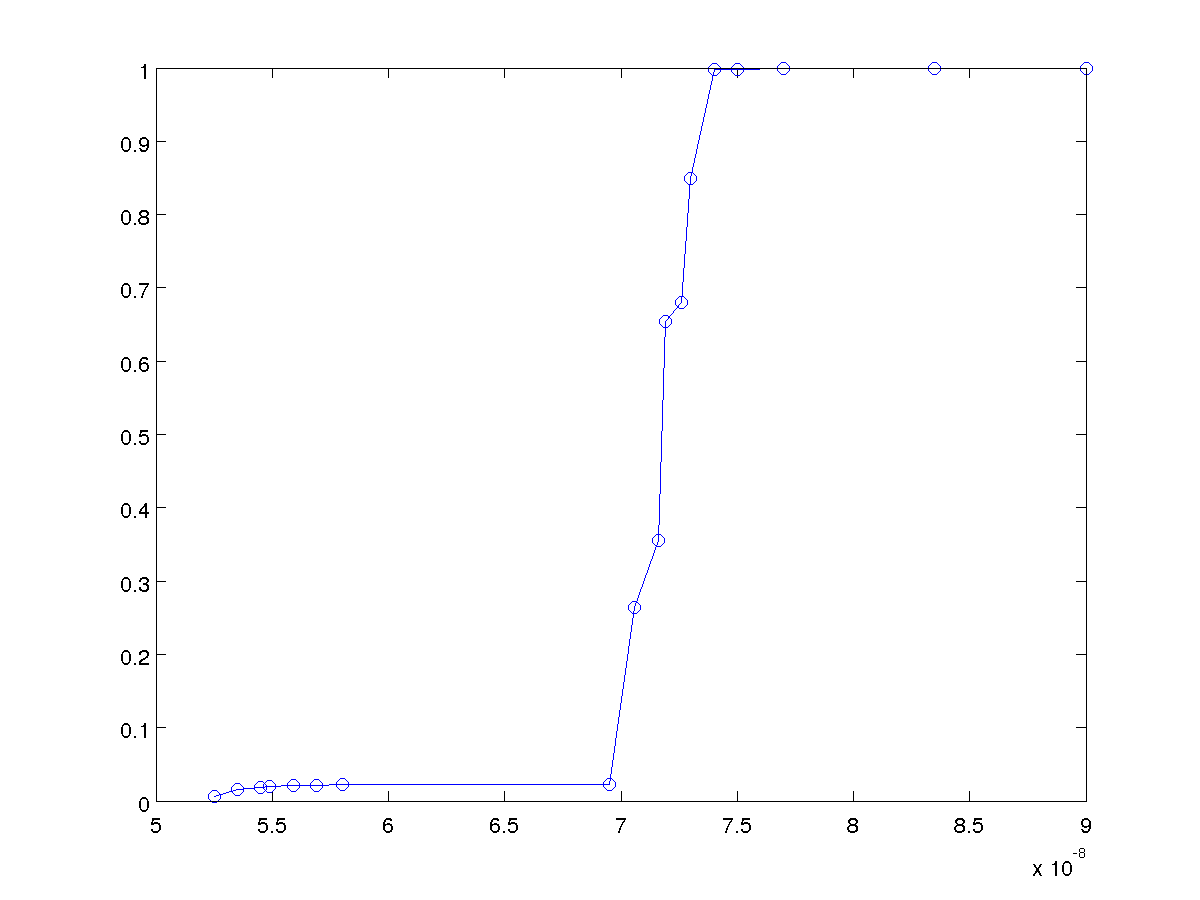

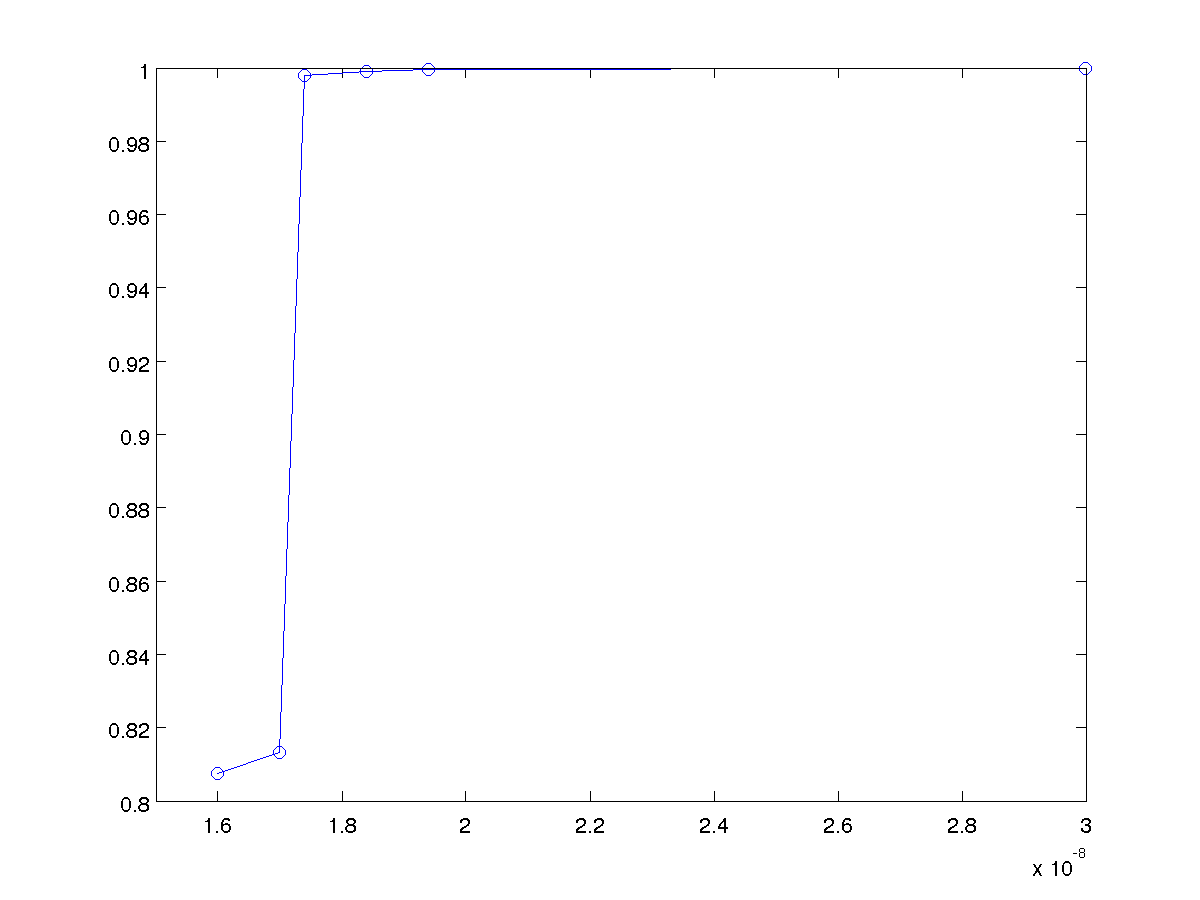

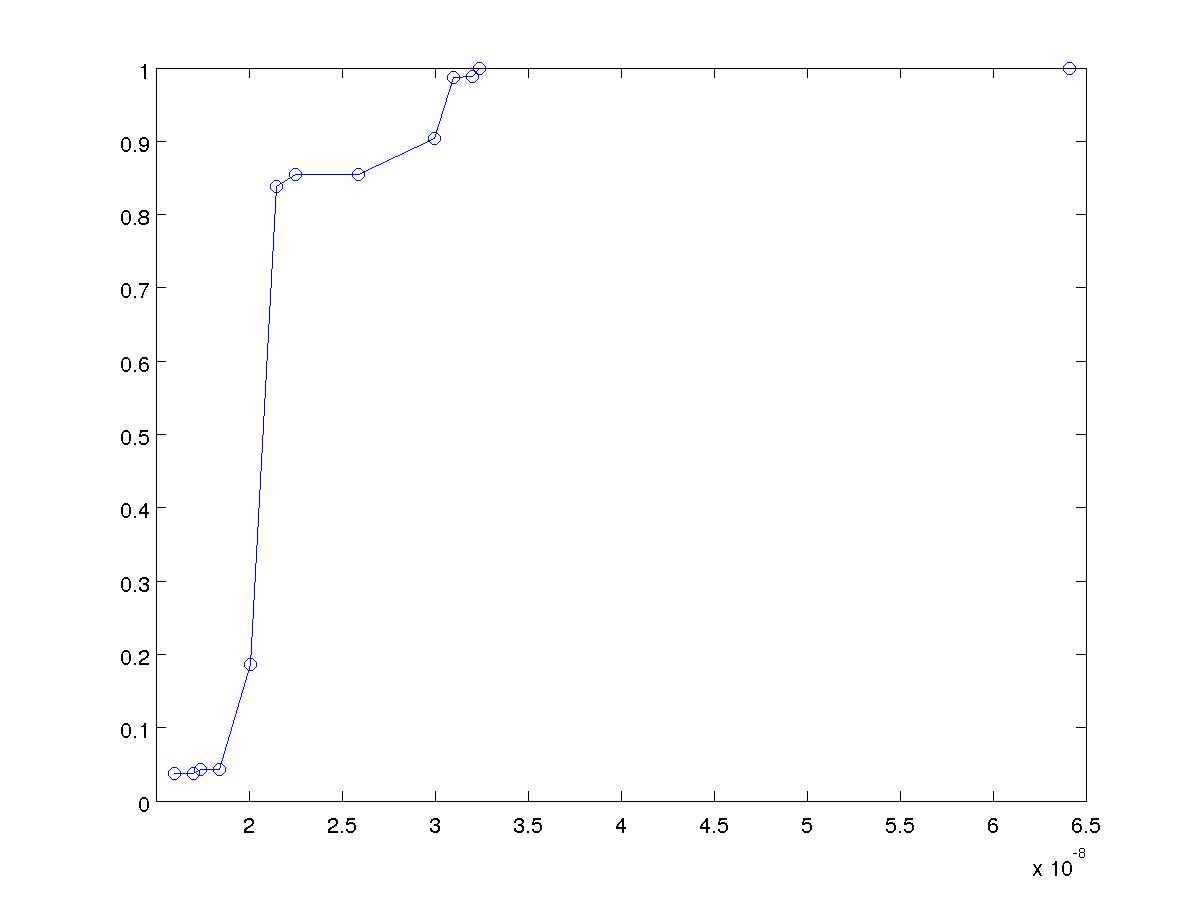

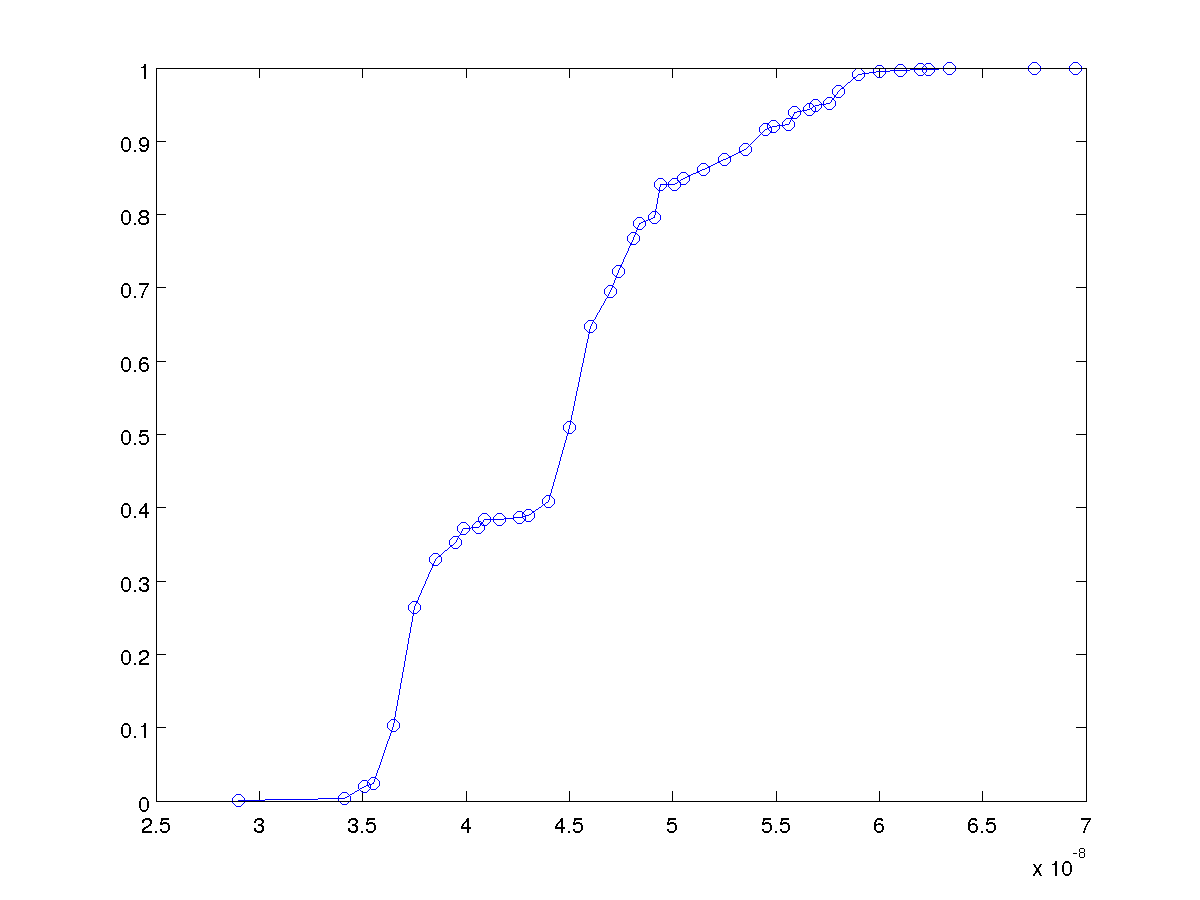

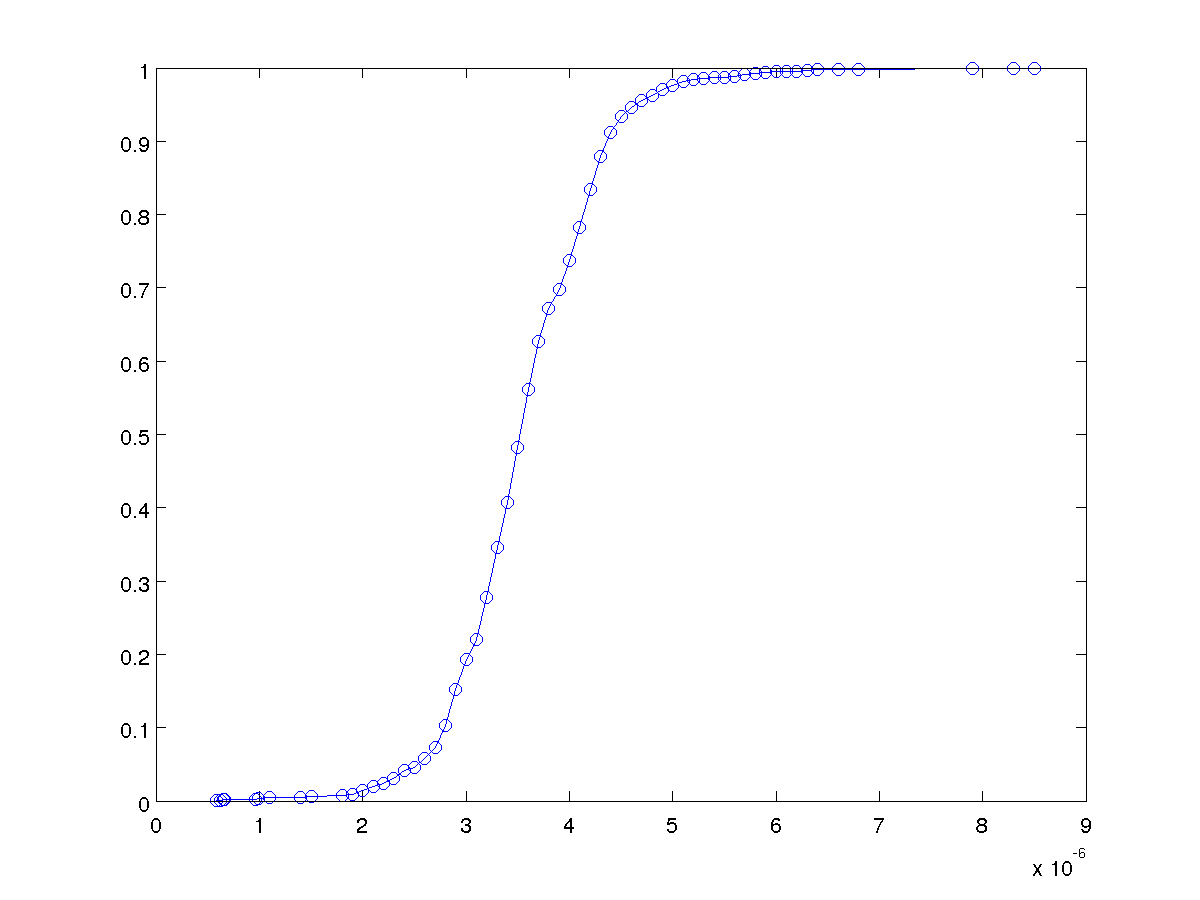

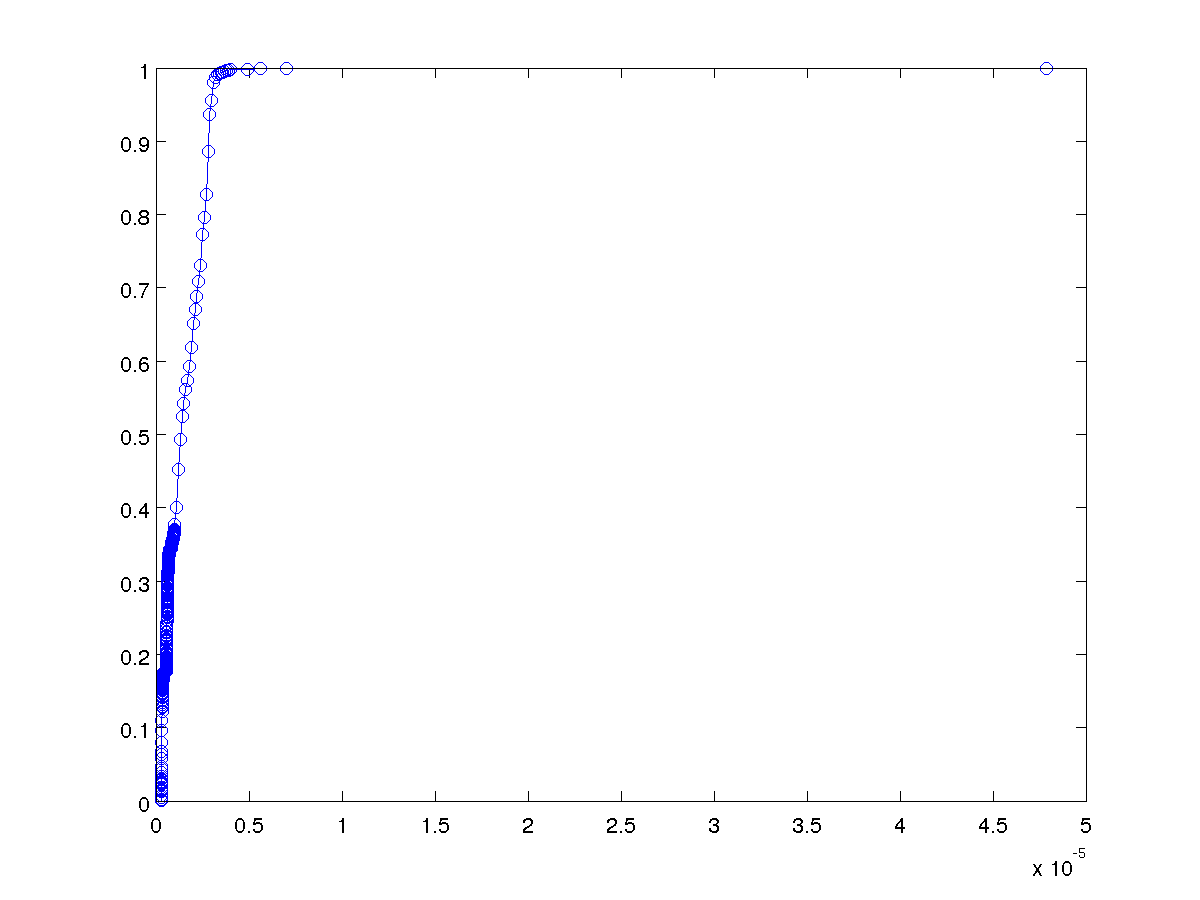

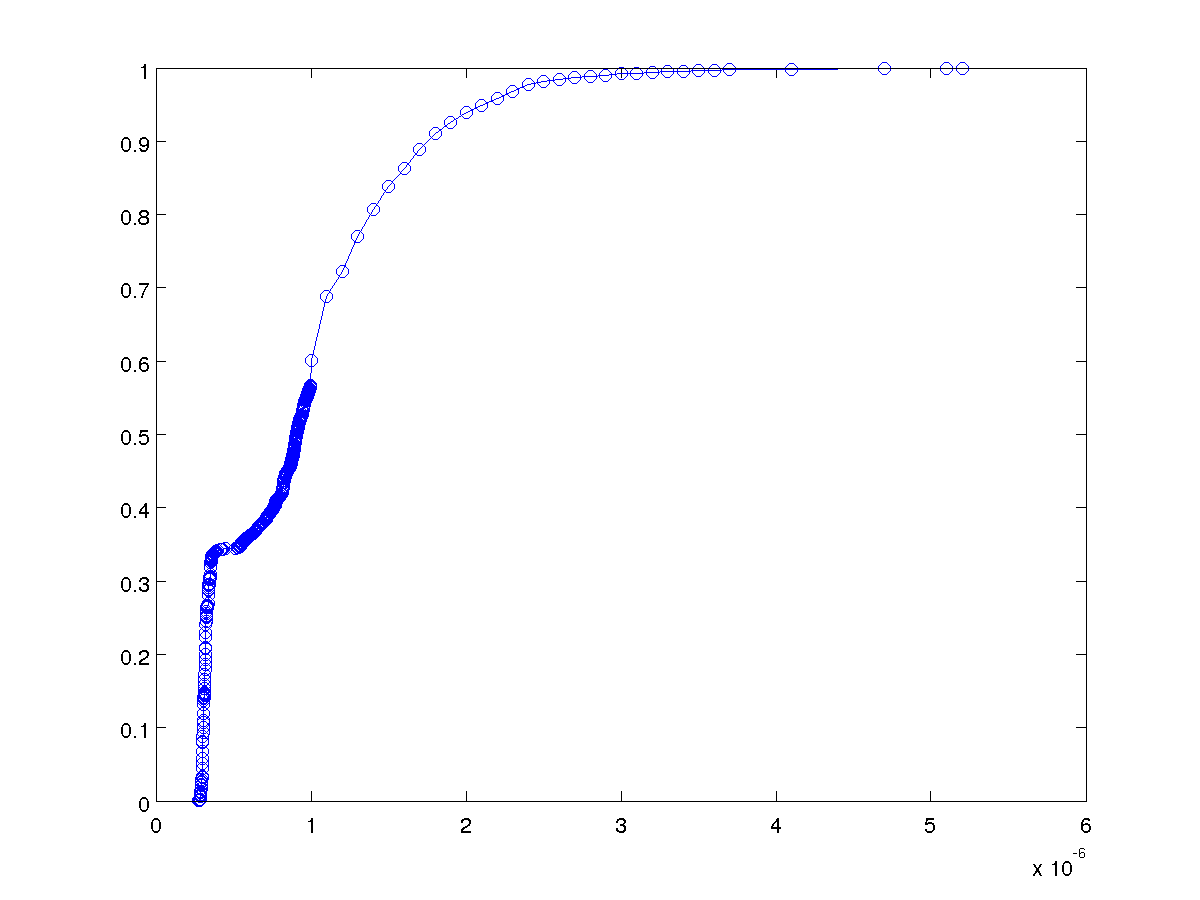

Each event in the timeline is associated with a (cumulative) distribution of "completion" times. The "completion" time for a given event is the time delta between that event and its preceding event; hence, the cdf for the first event is quite boring. The major time sinks described above are labeled by their corresponding number. (Note: the x-axis in the cdf's below is in seconds.)

Event Invoking sync() to backups

Event Invoking Send 'write segment' RPC

Event Invoking client.send()

Event Invoking session->clientSend()

Event Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend [1]

Event Invoking Instantiate new ClientRpc

Event Completed Instantiate new ClientRpc

Event Invoking rpc->sendOrQueue

Event Invoking postSendZeroCopy [2]

Event Completed postSendZeroCopy [3]

Event Completed rpc->sendOrQueue

Event Completed session->clientSend()

Event Completed client.send()

Event Completed Send 'write segment' RPC

Event Invoking Send 'write segment' RPC

Event Invoking client.send()

Event Invoking session->clientSend()

Event Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend [1]

Event Invoking Instantiate new ClientRpc

Event Completed Instantiate new ClientRpc

Event Invoking rpc->sendOrQueue

Event Invoking postSendZeroCopy [2]

Event Completed postSendZeroCopy [3]

Event Completed rpc->sendOrQueue

Event Completed session->clientSend()

Event Completed client.send()

Event Completed Send 'write segment' RPC

Event Invoking Send 'write segment' RPC

Event Invoking client.send()

Event Invoking session->clientSend()

Event Acquired Dispatch::Lock in TransportManager::WorkerSession::clientSend [1]

Event Invoking Instantiate new ClientRpc

Event Completed Instantiate new ClientRpc

Event Invoking rpc->sendOrQueue

Event Invoking postSendZeroCopy [2]

Event Completed postSendZeroCopy [3]

Event Completed rpc->sendOrQueue

Event Completed session->clientSend()

Event Completed client.send()

Event Completed Send 'write segment' RPC

Event Reaped outstanding RPC

Event Reaped outstanding RPC

Event Reaped outstanding RPC

Event Completed sync() to backups

On the the Backup

Averaged over 3515 same-shape timelines.

0 us --- InfRcTransport Poller picks ups incoming RPC [dispatch thread] | 325 ns --- Invoke service.handleRpc() [worker thread] | 885 ns --- Completed service.handleRpc() [worker thread] | 1 us --- Begin sending reply [dispatch thread] | | | 1.8 us --- Completed sending reply [dispatch thread]

More detailed timeline

Just as we did for the master, we have a more detailed timeline for the events on the backup server:

( Cumulative ) | Delta | [ Std. Dev. ] Event Description ( Min Mean Max ) | Min Mean Max | [ ] ( ) | | [ ] ( 0.0 ns 0.0 ns 0.0 ns ) | 0.0 ns 0.0 ns 0.0 ns | [ 0.0 ns ] Invoking append to buffer [dispatch] ( 34.4 ns 76.2 ns 2.8 us ) | 34.4 ns 76.2 ns 2.8 us | [ 47.6 ns ] Completed append to buffer [dispatch] ( 51.4 ns 102.1 ns 2.9 us ) | 17.0 ns 25.8 ns 56.9 ns | [ 8.0 ns ] Invoking serviceManager->handleRpc() [dispatch] ( 146.5 ns 226.0 ns 5.8 us ) | 95.1 ns 124.0 ns 2.9 us | [ 102.8 ns ] Completed serviceManager->handleRpc() [dispatch] ( 170.0 ns 325.7 ns 68.3 us ) | 23.5 ns 99.7 ns 62.5 us | [ 1.3 us ] Invoking service.handleRpc() [worker] ( 275.0 ns 458.8 ns 68.6 us ) | 105.0 ns 133.1 ns 374.7 ns | [ 29.3 ns ] Invoking service.dispatch() [worker] ( 295.1 ns 484.3 ns 68.8 us ) | 20.1 ns 25.5 ns 212.1 ns | [ 7.0 ns ] Invoking callHandler() [worker] ( 378.3 ns 589.2 ns 73.9 us ) | 83.2 ns 104.9 ns 5.1 us | [ 107.2 ns ] Invoking SegmentInfo->write() [worker] ( 492.5 ns 774.0 ns 79.1 us ) | 114.2 ns 184.8 ns 5.2 us | [ 115.6 ns ] Completed SegmentInfo->write() [worker] ( 523.5 ns 823.0 ns 79.3 us ) | 31.0 ns 49.0 ns 116.9 ns | [ 3.0 ns ] Completed callHandler() [worker] ( 553.5 ns 854.3 ns 79.4 us ) | 30.0 ns 31.2 ns 90.0 ns | [ 1.6 ns ] Completed service.dispatch() [worker] ( 576.7 ns 885.0 ns 79.4 us ) | 23.2 ns 30.7 ns 93.1 ns | [ 7.3 ns ] Completed service.handleRpc() [worker] ( 675.9 ns 1.0 us 83.1 us ) | 99.2 ns 153.7 ns 3.7 us | [ 109.4 ns ] Invoking worker->rpc->sendReply() [dispatch] ( 858.0 ns 1.3 us 86.2 us ) | 182.1 ns 224.8 ns 3.1 us | [ 70.2 ns ] Invoking postSend() [dispatch] ( 1.1 us 1.6 us 90.7 us ) | 290.1 ns 330.6 ns 4.5 us | [ 113.1 ns ] Completed postSend() [dispatch] ( 1.3 us 1.8 us 93.8 us ) | 169.1 ns 216.5 ns 3.1 us | [ 98.8 ns ] Completed worker->rpc->sendReply() [dispatch]

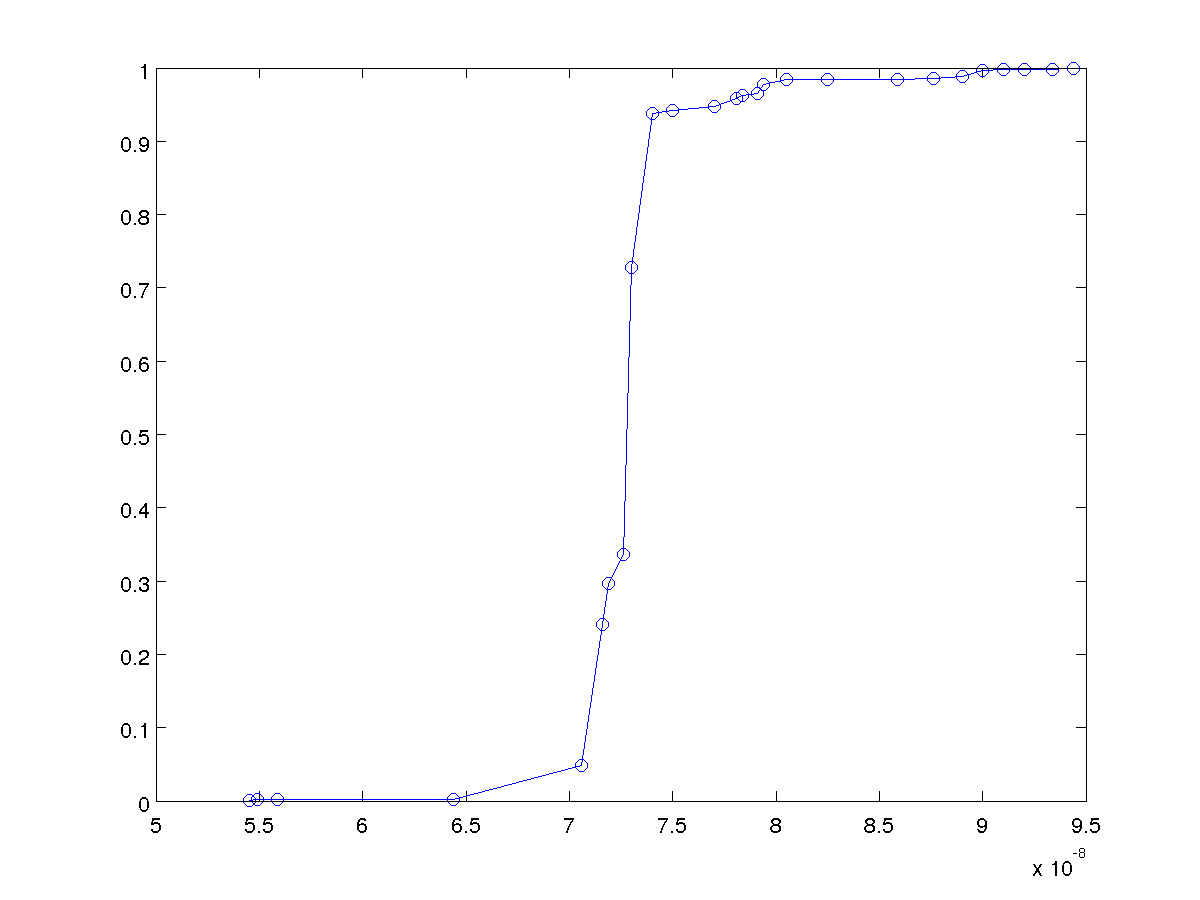

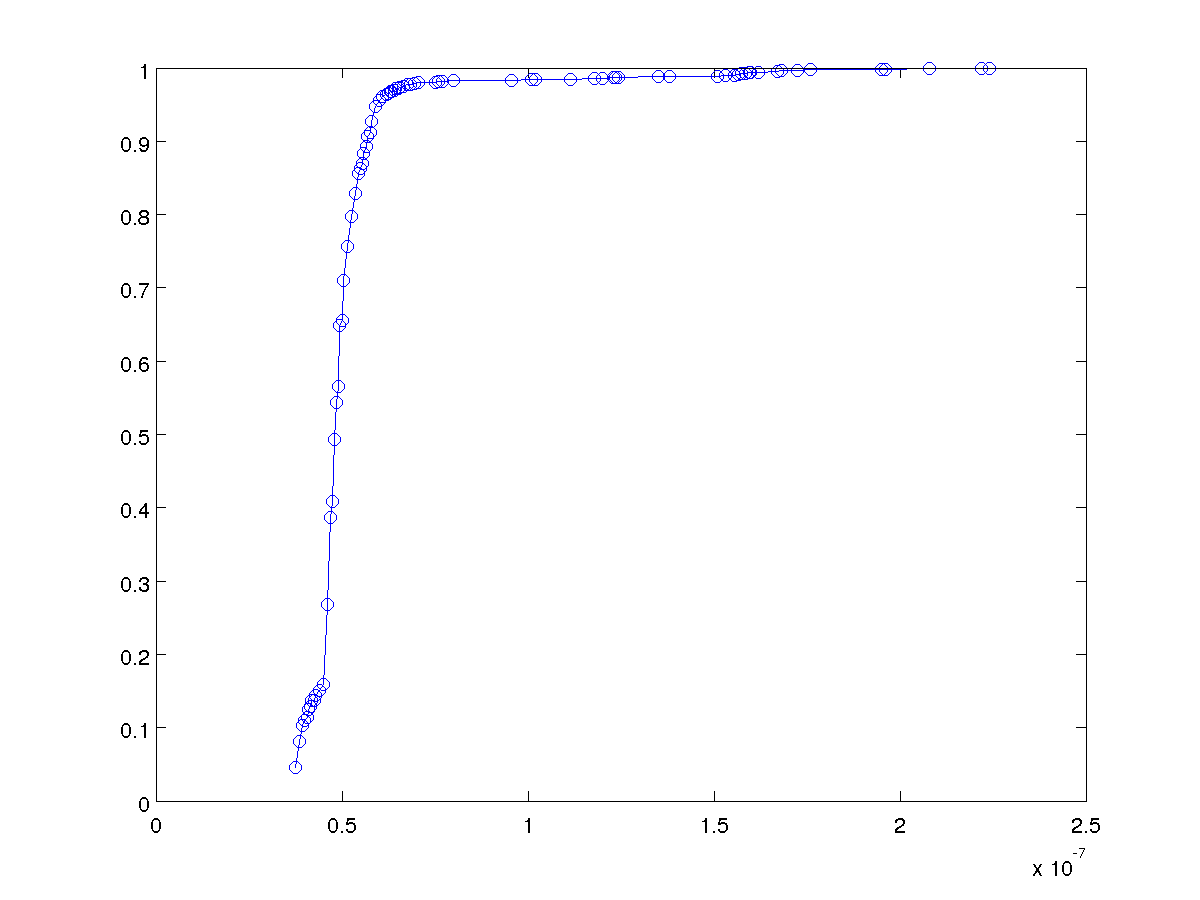

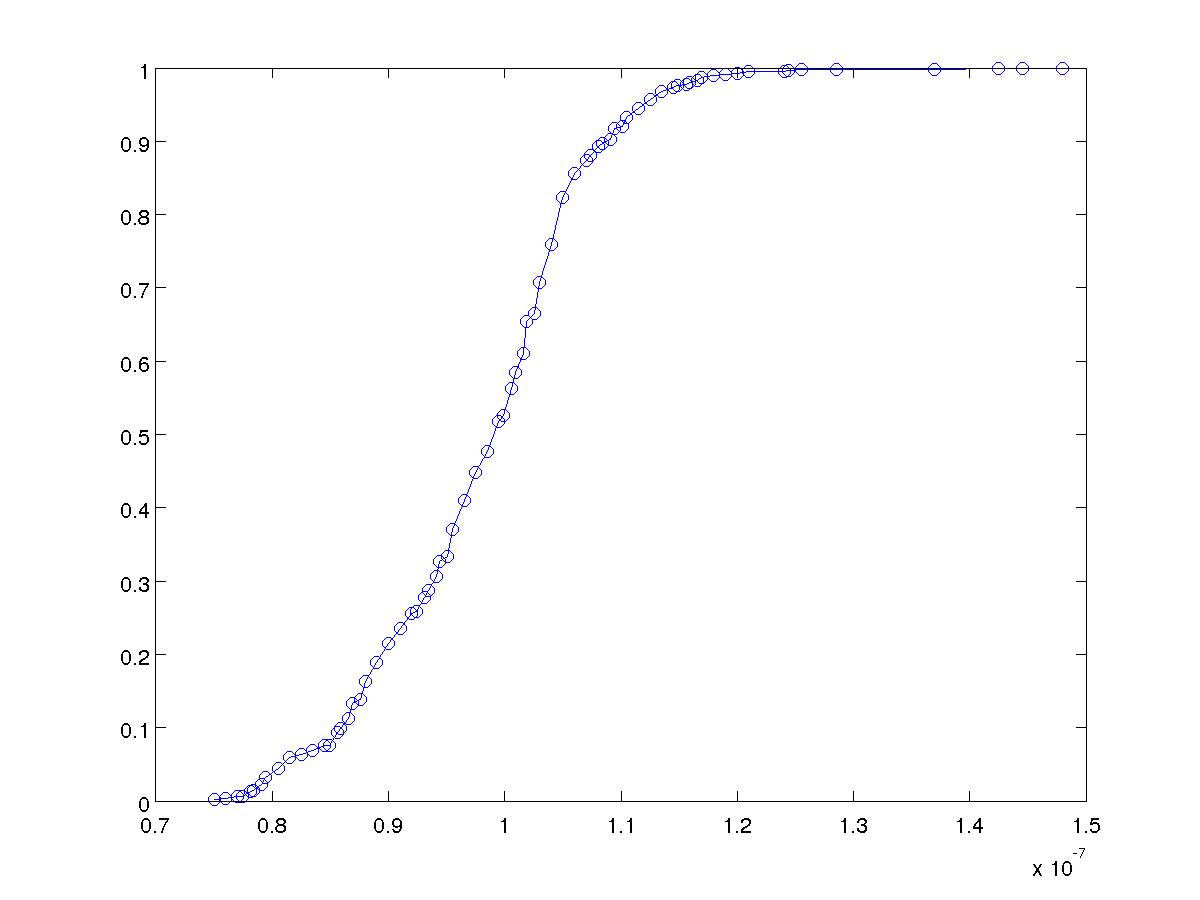

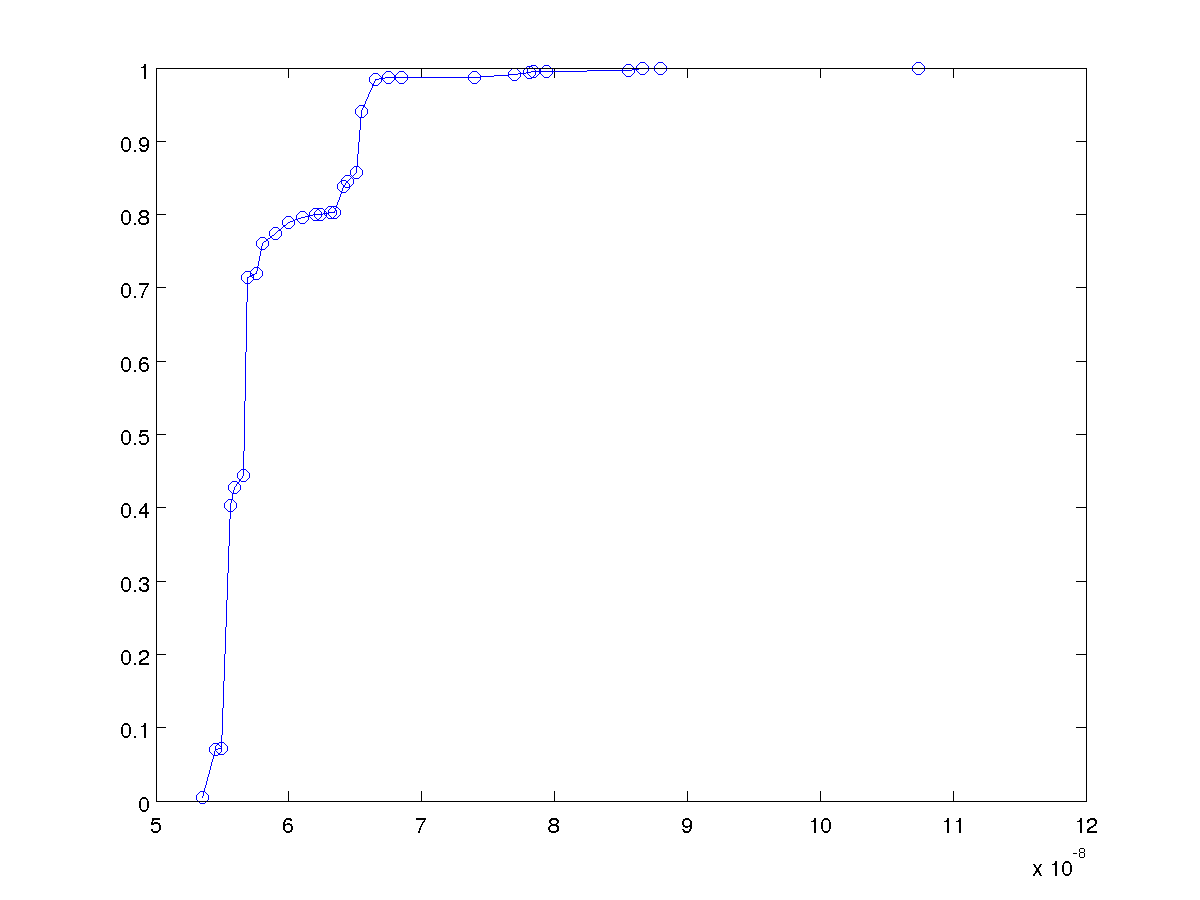

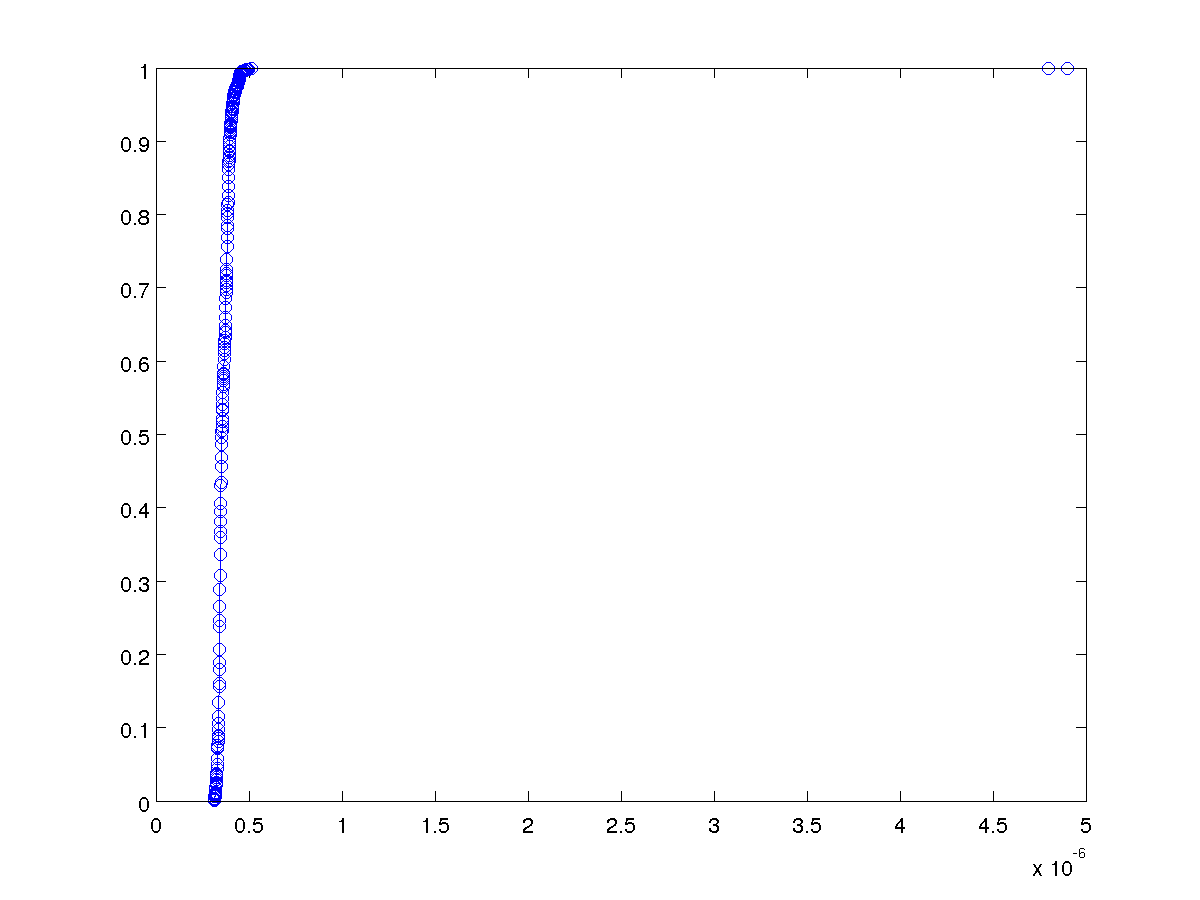

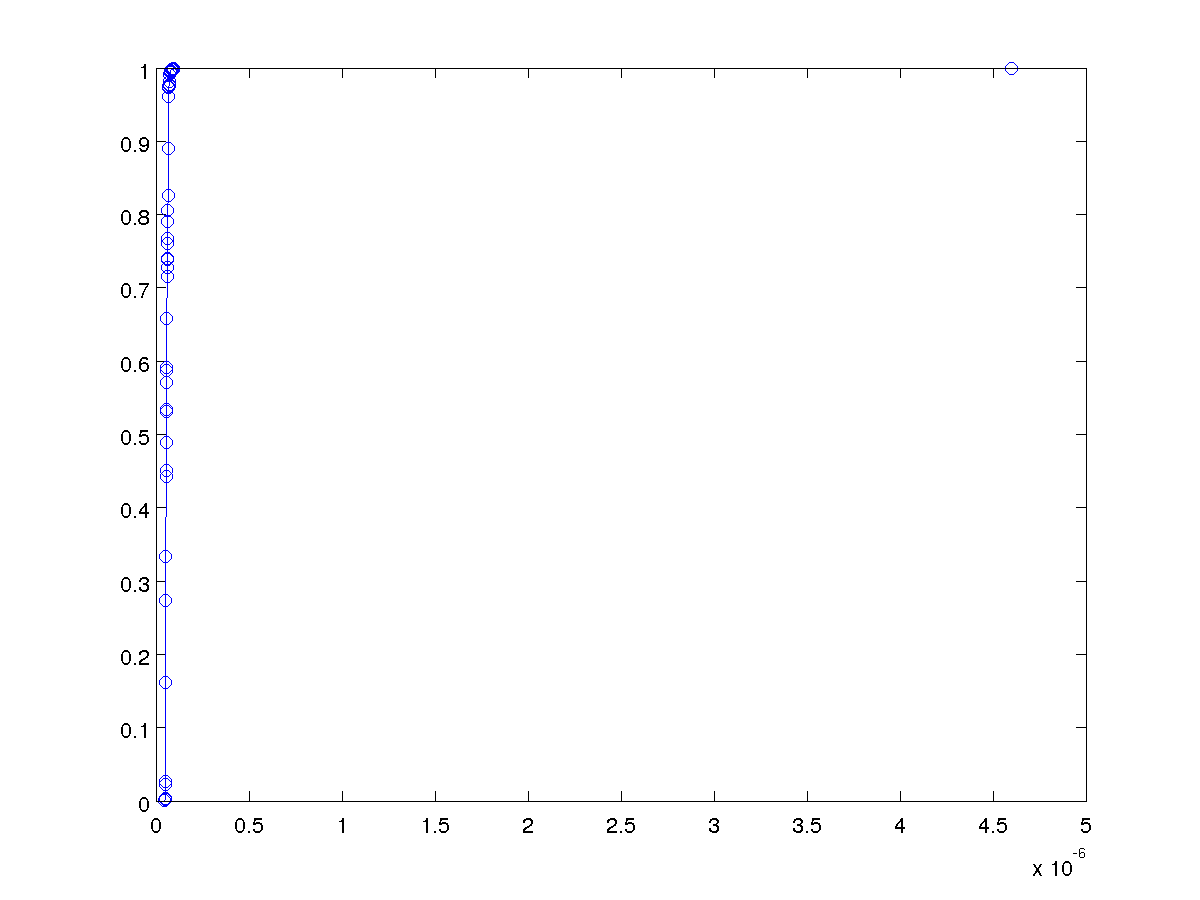

Cumulative distributions for each event

Just as we did for the master, we have the cdf's for the events on the backup server:

Event Invoking appendToBuffer() [dispatch]

Event Completed appendToBuffer() [dispatch]

Event Invoking serviceManager->handleRpc() [dispatch]

Event Completed serviceManager->handleRpc() [dispatch]

Event Invoking service.handleRpc() [worker]

Event Invoking service.dispatch() [worker]

Event Invoking callHandler() [worker]

Event Invoking SegmentInfo->write() [worker]

Event Completed SegmentInfo->write() [worker]

Event Completed callHandler() [worker]

Event Completed service.dispatch() [worker]

Event Completed service.handleRpc() [worker]

Event Invoking worker->rpc->sendReply() [dispatch]

Event Invoking postSend() [dispatch]

Event Completed postSend() [dispatch]

Event Completed worker->rpc->sendReply() [dispatch]

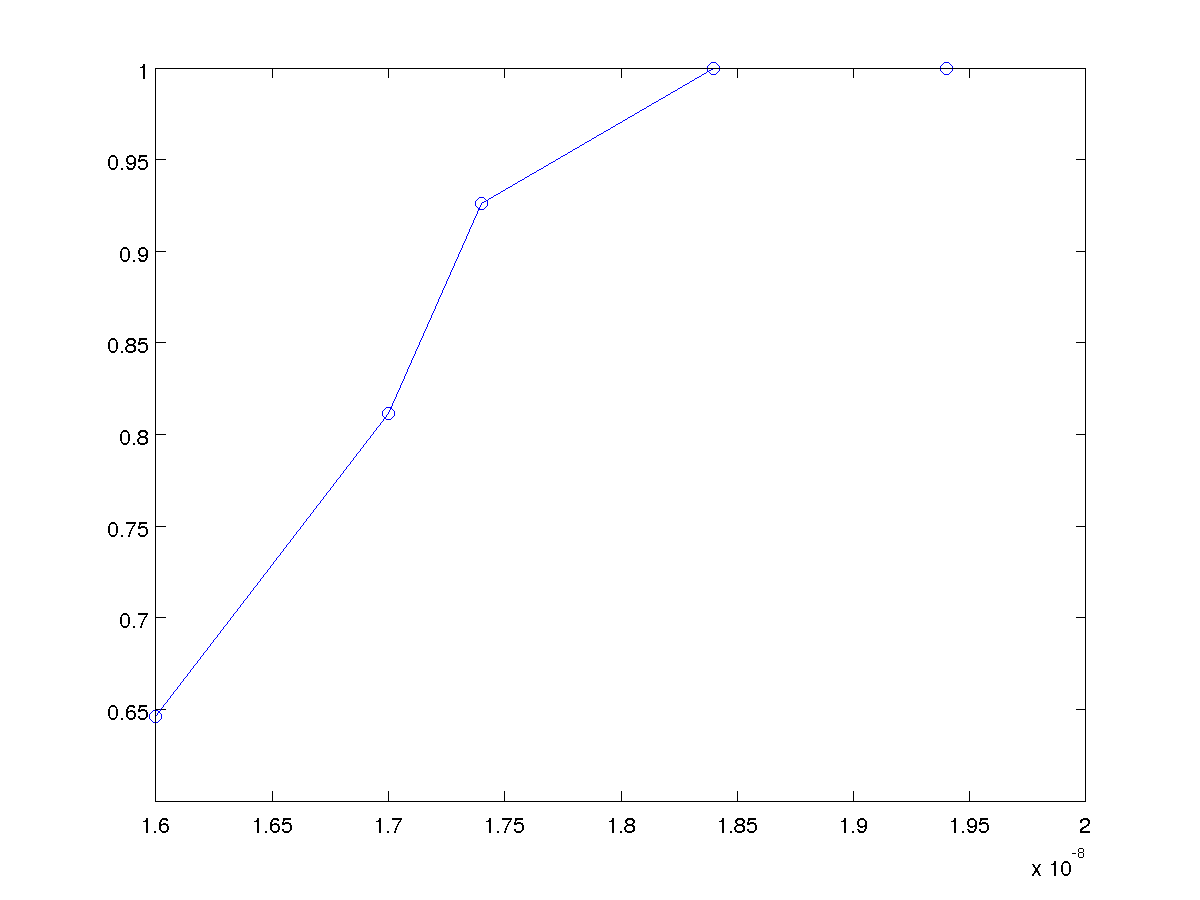

Benchmark IB Send vs. RDMA

Simple program to benchmark 56-byte write.

Averaged over 100 samples for each experiment. (Using IBV_SEND_INLINE in all four experiments).

| Using RDMA | Using Send | |

|---|---|---|

One way (incl. completion on sender) | 1.757 us | 1.801 us |

Round trip (RPC style) | 3.136 us | 3.219 us |

We see that a one-way RDMA easily beats the round-trip IB Send's currently used RAMCloud RPC.