Mellanox Performance Data

Information for 5/24 phone call.

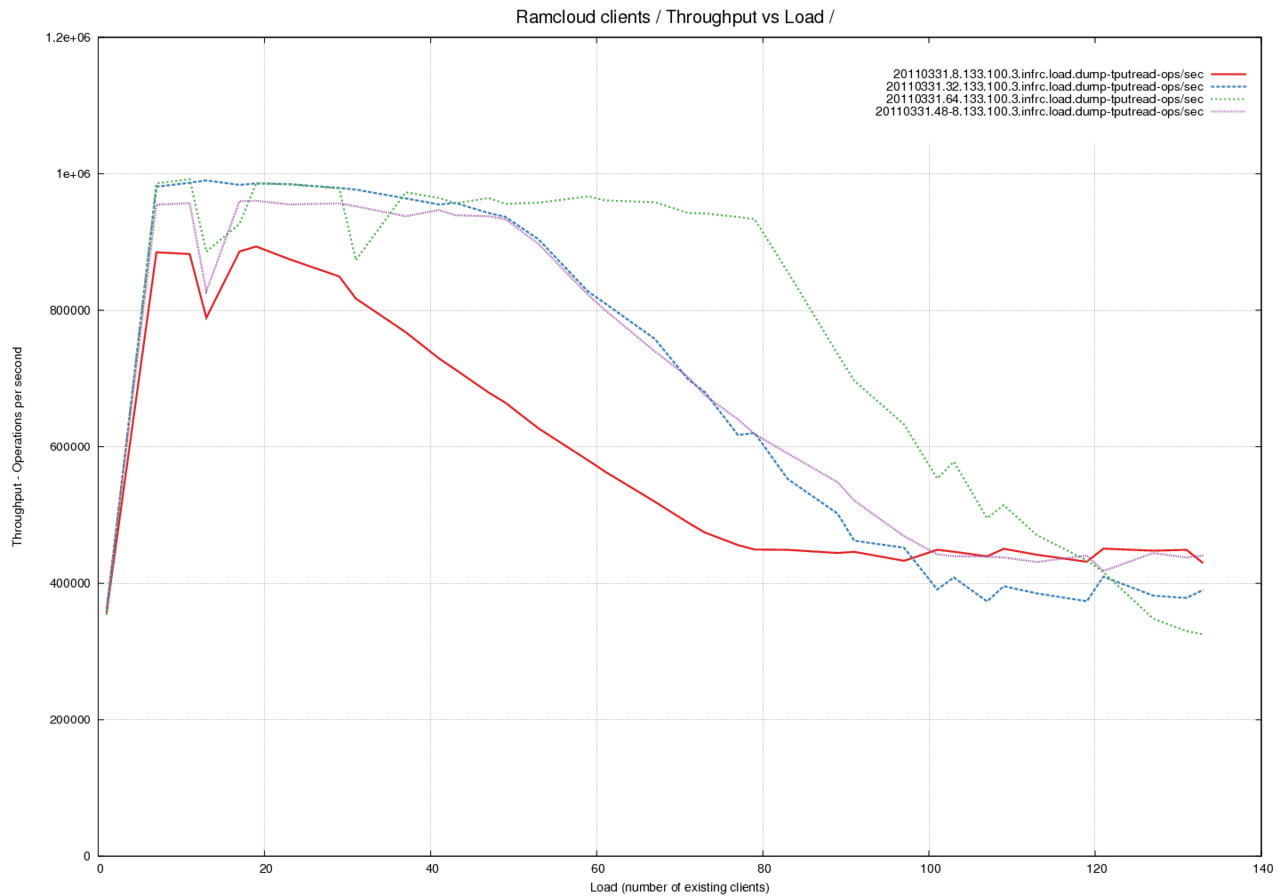

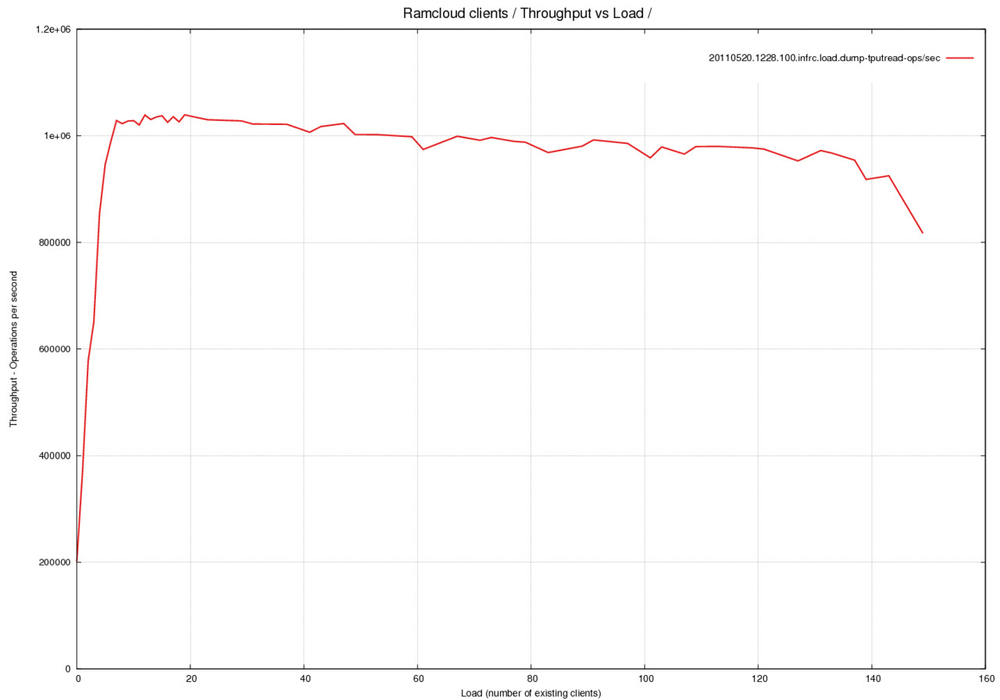

Basic Performance Graph

Throughput as a function of # clients, # transmit buffers, # receive buffers.

Best case: 1.1us/request

Under load: 2.35us/request

Graph showing performance with 128 Rx/ 128 Tx buffers

Code for getTransmitBuffer

Include code for the method here.

getTransmitBuffer()

/**

* Return a free transmit buffer, wrapped by its corresponding

* BufferDescriptor. If there are none, block until one is available.

*

*/

template<typename Infiniband>

typename Infiniband::BufferDescriptor*

InfRcTransport<Infiniband>::getTransmitBuffer()

{

CycleCounter<uint64_t> timeThis2;

// if we've drained our free tx buffer pool, we must wait.

while (txBuffers.empty()) {

ibv_wc retArray[MAX_TX_QUEUE_DEPTH];

CycleCounter<uint64_t> timeThis;

int n = infiniband->pollCompletionQueue(commonTxCq,

MAX_TX_QUEUE_DEPTH,

retArray);

uint64_t gtbPollNanos = cyclesToNanoseconds(timeThis.stop());

serverStats.gtbPollNanos += gtbPollNanos;

serverStats.gtbPollCount++;

if (0 >= n) {

serverStats.gtbPollZeroNCount++;

serverStats.gtbPollZeroNanos += gtbPollNanos;

} else {

serverStats.gtbPollNonZeroNAvg += n;

serverStats.gtbPollNonZeroNanos += gtbPollNanos;

}

for (int i = 0; i < n; i++) {

BufferDescriptor* bd =

reinterpret_cast<BufferDescriptor*>(retArray[i].wr_id);

txBuffers.push_back(bd);

if (retArray[i].status != IBV_WC_SUCCESS) {

LOG(ERROR, "Transmit failed: %s",

infiniband->wcStatusToString(retArray[i].status));

}

}

}

BufferDescriptor* bd = txBuffers.back();

txBuffers.pop_back();

serverStats.infrcGetTxBufferNanos += timeThis2.stop();

return bd;

}

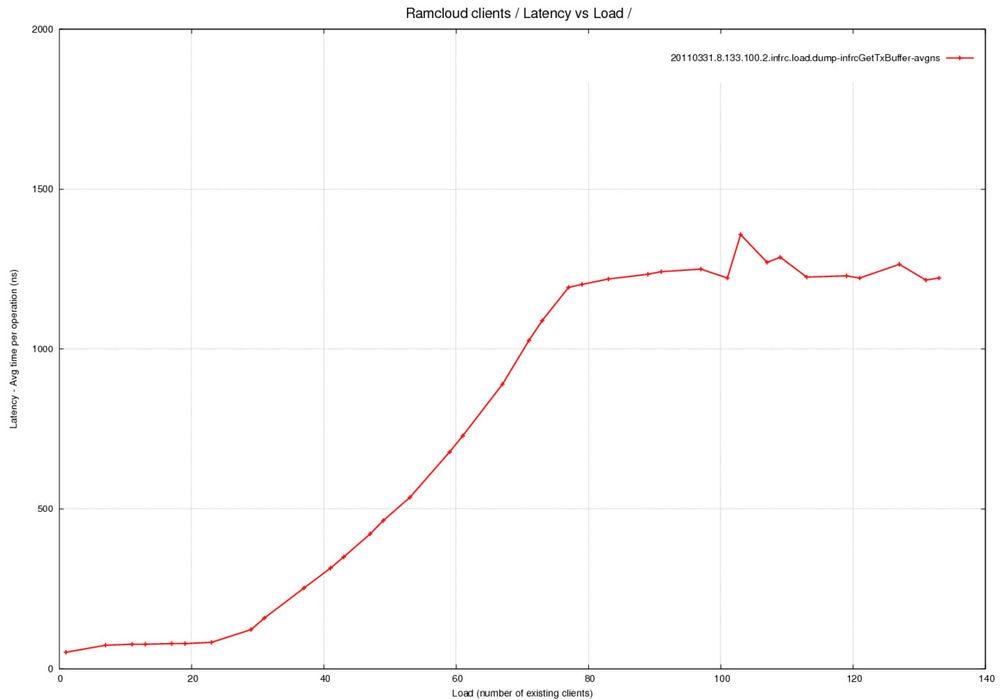

Graph of getTransmitBuffer Running Time

Best case: 80ns

Under load: 1.3us

Accounts for all of the additional time

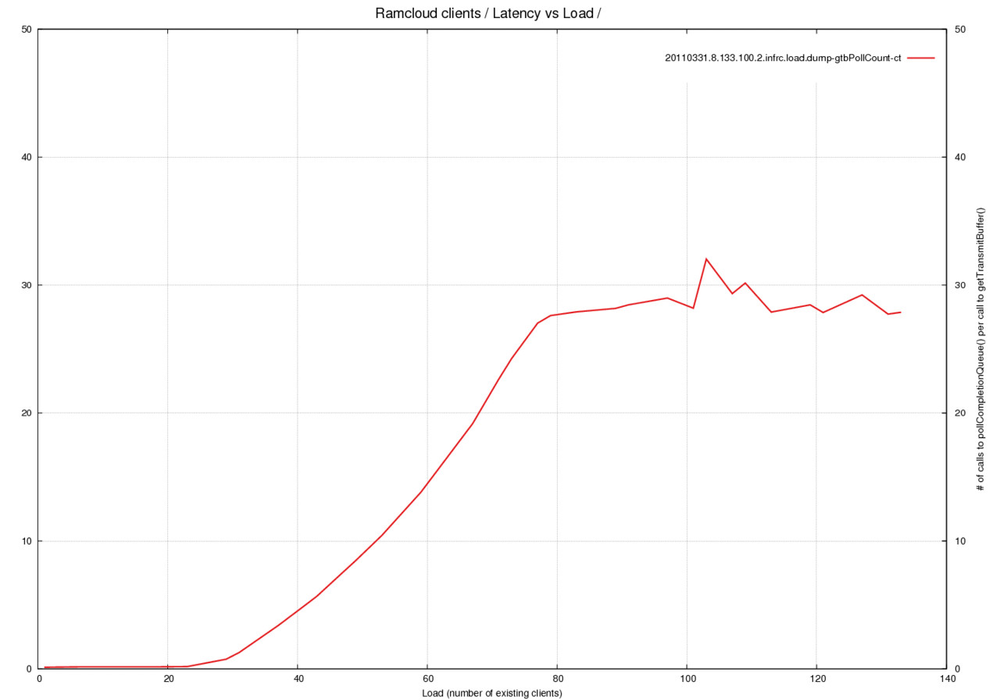

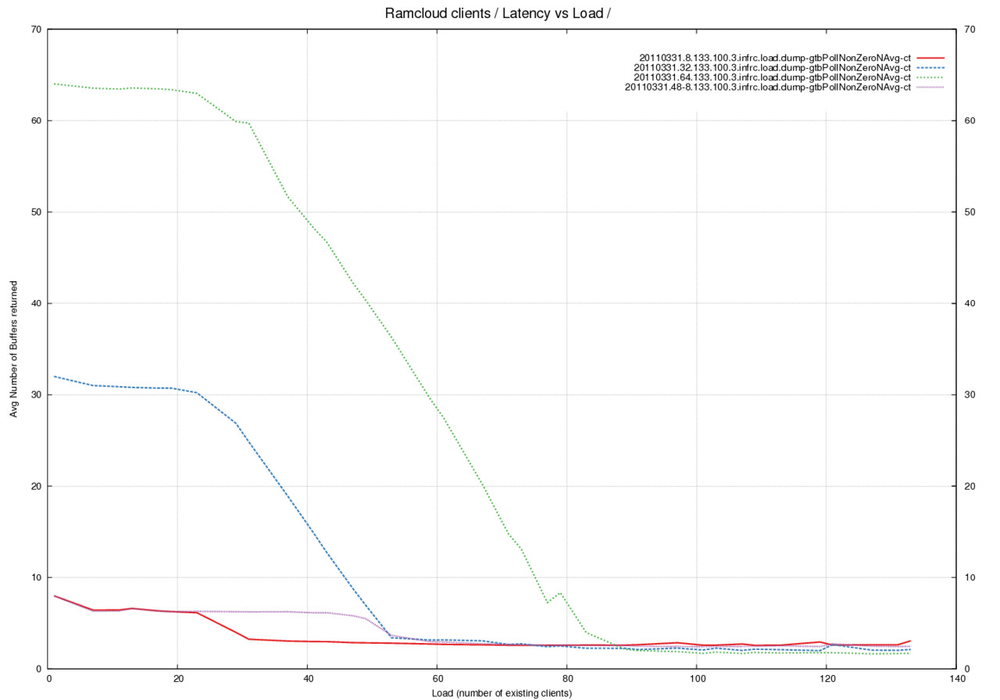

Graph of how many times pollCompletionQueue is called

Graph of avg. # buffers returned by pollCompletionQueue