Machine Evaluations

Purpose

We are evaluating four platforms for our proposed 40-node RAMCloud cluster. Each is very similar in price (approx. $2000-2500/node for 24-32GB of RAM, cpu, and a disk). Three machines are from SuperMicro. The fourth is a Dell box with nearly identical configuration to the Xeon E5620-based SuperMicro.

Machines

All machines are server-class hardware (i.e. support ECC) in 1U units. In the Xeon cases, we have the option of buying twin servers (two independent boards in one case) from SuperMicro. We're looking at the following configurations:

- Xeon E5620 (4 core / 8 thread Westmere at 2.4GHz. Dual socket server. Max. 192GB RAM)

- Xeon X3470 (4 core / 8 thread Nehalem at 2.93GHz. Single socket server. Max. 32GB RAM)

- Opteron 6134 (8 core / 8 thread at 2.3GHz. Single socket server. Max. 128GB RAM)

Misc. Notes:

- The E5620 and AMD 6134 have on-die memory controllers, but they do not have on-die PCIe. Both support 1GB superpages. The AMD chips do not have the CRC32 instruction yet.

- The crc32 instruction is extremely fast, especially for small input (10's to 100's of bytes). If we count on this, it will rule out the AMD chip.

- The X3470 has both on-die memory and PCIe controllers. However, it is not a westmere chip, so it doesn't support 1GB superpages. It should have the CRC32 instruction.

- The on-die PCIe controller doesn't appear to help latency very much. See results below.

CPUs

|

Xeon E5620 |

Opteron 6134 |

Xeon X3470 |

|---|---|---|---|

Clock |

2.4GHz |

2.3GHz |

2.93GHz |

Max Turbo |

2.66GHz |

N/A |

3.6GHz |

# Cores |

4 |

8 |

4 |

# Threads |

8 |

8 |

8 |

L1 Cache |

64KB / core |

128KB / core |

64KB / core |

L2 Cache |

256KB / core |

512KB / core |

256KB / core |

L3 Cache |

12MB shared |

12MB shared |

8MB shared |

On-die Memory Controller |

Yes |

Yes |

Yes |

On-die PCIe Controller |

No |

No |

Yes |

Max. CPU Sockets / Motherboard |

2 |

? (>= 4) |

1 |

Max. Memory Channels |

3 |

4 |

2 |

Max. Memory Clock |

1066MHz |

1333MHz |

1333MHz (NB: drops to 800MHz when >= 24GB RAM installed) |

Max. Memory Supported |

288GB |

? (>=128GB) |

32GB |

1GB Superpages |

Yes |

Yes |

No |

CRC32 Instruction |

Yes |

No |

Yes |

|

|

|

|

Systems

The following is a list of specs for the systems we're looking at. The SuperMicro ones have lots of sister configurations with slight differences that may be interesting. See the notes row.

|

Dell R410 |

SuperMicro 6016T-NTF (Xeon E5620) |

SuperMicro 1012G-MTF (Opteron 6134) |

SuperMicro 5016I-M6F |

|---|---|---|---|---|

Motherboard |

? |

X8DTU-F |

H8SGL-F |

X8SI6-F |

Chipset |

Intel 5520 (Tylersburg) + ICH10R |

Intel 5520 (Tylersburg) + ICH10R |

AMD SR5650 + SP5100 |

Intel 3420 (Ibex Peak) + 3420 PCH |

# CPU Sockets |

2 |

2 |

1 |

1 |

# DIMM Slots |

8 (4 / socket) |

12 (6 / socket) |

8 |

6 |

Max. Memory |

128GB |

192GB |

128GB |

32GB (board supports 48, cpu limits to 32) |

# SATA Ports |

6 (?) |

6 |

6 |

6 |

# Hard Drives |

4 x 3.5" |

4 x 3.5" |

4x 3.5" |

4x 3.5" |

# PCIe slots (electrical width) |

1 (x16) |

2 (x8) |

1 (x16) |

1 (x8) |

Notes: |

|

|

|

|

Evaluation Criteria

RAMCloud is concerned mostly with scale and latency. Since we have a limited budget and limited space, we cannot scale to a huge number of machines or load them with expensive high-density dimms (sweet spot looks like 4 or 8GB dimms). As such, most of our evaluation focuses on latency - infiniband network latency, ramcloud micro-benchmarks, and simple end-to-end ramcloud benchmarks. We will also consider miscellaneous features like number of pcie slots, dimm slots, cpu cores, etc.

RAMCloud Numbers:

Benchmark |

Xeon E5620 |

Opteron 6134 |

Xeon X3470 |

Notes |

|---|---|---|---|---|

Rabinpoly (min, max) |

206MB/s, 381MB/s |

57MB/s, 438MB/s |

|

|

VMAC (min, max) |

60MB/s, 2630MB/s |

45MB/s, 2294MB/s |

|

|

crc32c (7 / 31 / 63 / 127 / 1k) MB/s |

351 / 1343 / 2310 / 4037 / 6643 |

|

834 / 1137 / 5461 / 8651 / 10500 |

X3470's higher turbo frequency is 3.6GHz vs. E5620's 2.66GHz (~35% higher). |

HashTableBench (-h 128) |

84ns lookup, 98ns replace |

147ns lookup, 133ns replace |

75ns lookup, 88ns replace |

|

1000-byte Bench (infiniband, localhost, -m 128 server) |

7.6usec +/- 0.1usec RTT (272.76ns on server) |

7.1usec +/- 0.3usec RTT (338.81ns on server) |

7.4usec +/- 0.1 RTT (~230ns on server) |

|

100-byte Bench (infiniband, localhost, -m 128 server) |

6.6usec RTT (283.43ns server) |

6.2usec (348ns on server) |

6.4usec RTT (~235ns on server) |

|

100-byte Bench (same as above, but inline send) |

4.7usec RTT (+/- 0.1) |

|

4.5usec RTT (+/- 0.2) |

|

RecoverSegmentBenchmark (64-byte objects, 20 segments) |

392ms |

706ms |

322ms |

AMD has no crc32 instruction :( |

Infiniband Numbers (all are one-way, i.e. not RTT values, in microseconds):

Benchmark |

Xeon E5620 |

Opteron 6134 |

Xeon 3470 |

Notes |

|---|---|---|---|---|

ib_write_lat -s 128 localhost |

1.40 |

1.16 |

1.31 |

|

ib_write_lat -s 1024 localhost |

3.33 |

2.92 |

3.29 |

|

ib_send_lat -s 128 localhost |

1.59 |

1.42 |

1.44 |

Sends are inlined with the WQE up to 400 bytes |

ib_send_lat -s 1024 localhost |

3.44 |

2.99 |

3.35 |

|

ib_send_lat -s 128 -I 0 |

2.38 |

2.04 |

2.27 |

-I 0 disables inlining data in the WQE |

ib_send_lat -s 128 between E5620 and AMD 6134 |

1.58 |

1.58 |

N/A |

With 2 AMD machines, est. is ~3.02us RTT |

ib_send_lat -s 128 between E5620 and X3470 |

1.61 |

N/A |

1.61 |

|

ib_send_lat -s 128 between two E5620 machines |

1.65 |

N/A |

N/A |

~3.3us RTT |

ib_send_lat -s 128 -I 0 between E5620 and AMD 6134 |

2.30 |

2.30 |

N/A |

With 2 AMD machines, est. is ~4.24us RTT |

ib_send_lat -s 128 -I 0 between E5620 and X3470 |

2.45 |

N/A |

2.45 |

|

ib_send_lat -s 128 -I 0 between two E5620 machines |

2.48 |

N/A |

N/A |

~4.96us RTT |

LM Bench Numbers

Benchmark |

Xeon E5620 |

Opteron 6134 |

Xeon 3470 |

Notes |

|---|---|---|---|---|

bw_mem -P 1 -W 2 268435456 (read / write / copy) |

7800 |

6628 |

8441 |

These numbers are with only one dimm installed, hence only |

bw_mem -P 4 -W 2 134217728 (read / write / copy) |

|

9820 |

9630 |

AMD: taskset -c 0-3 (do not use remote memory controller) |

lat_mem_rd -N 1 -P 1 256M 512 (l1 / l2 / l3 / ram) |

1.7 |

1.3 |

1.2 |

AMD memory affinity bit us here previously. Cores 0-3 are 56ns to RAM, whereas 4-7 are 108ns! |

More realistic LM Bench Memory Bandwidth Numbers

The numbers above were with sample machines that had one dimm installed. This seriously limits throughput, as multiple memory channels cannot be used. What follows is a more fair comparison of loaded systems.

Benchmark |

Xeon E5620 (rc02, 3 channels, 3x4GB) |

Opteron 6134 (4 channels, 4x4GB @ 1333MHz) |

Xeon X3470 (2 channels, 2x1GB @ 1333MHz) |

Xeon X3470 (2 channels, 4x4GB @ 1333MHz) |

Xeon X3470 (2 channels, 6x4GB @ 800MHz) |

Notes |

|---|---|---|---|---|---|---|

bw_mem -P 1 -W 2 268435456 (read / write / copy) |

11995 |

6660 |

11292 |

12628 |

11293 |

The Lynnfield 800MHz case is important, as |

bw_mem -P 4 -W 2 134217728 (read / write / copy) |

19000 |

22000 |

17900 |

17643 |

11080 |

AMD case used taskset -c 0,1,6,7 to exercise |

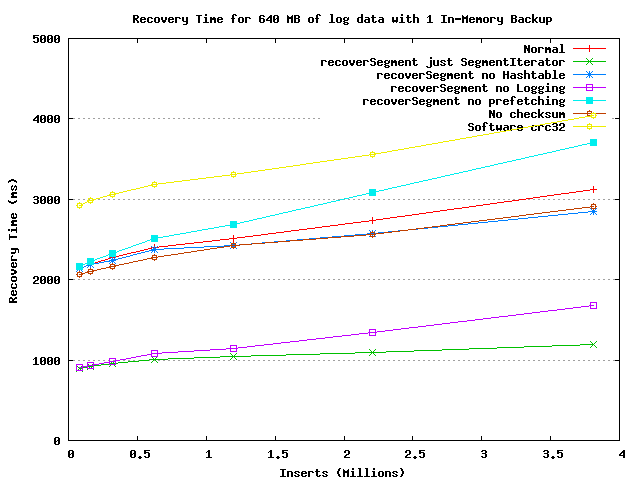

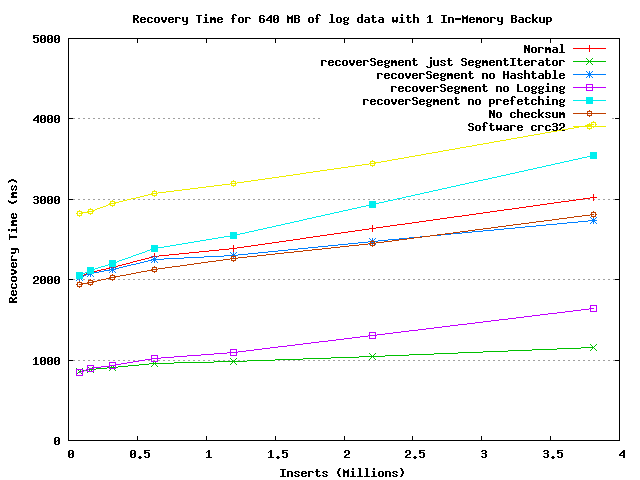

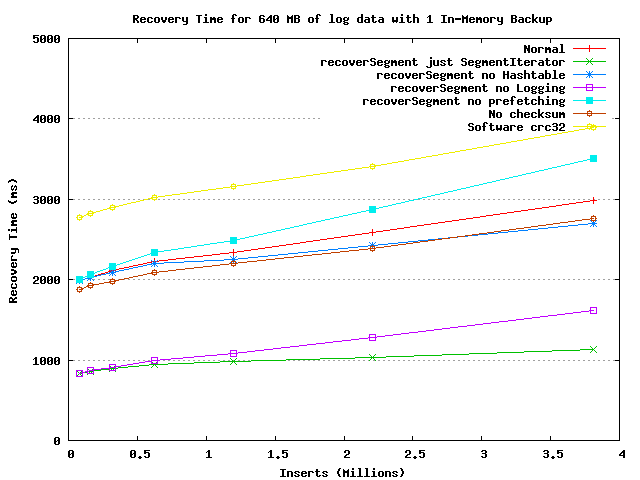

E5620 Recovery with 2 memory channels at 1066 and 800MHz (rc05, rc06)

Unfortunately, we cannot evaluate the X3470's limited memory bandwidth with large amounts of memory (we don't have enough of the right dimms, and the bios won't let us clock the frequency down manually). So, to get an idea of how important memory bandwidth will be to RAMCloud recovery, we'll run recoveries on our development E5620 machines at 1066MHz (default) and 800MHz memory clocks to see if it makes much of a difference. Note that we're using 2 of 3 memory controllers, as X3470 only has two.

|

|

|

|---|---|---|

2 memory channels, 800MHz clock |

2 memory channels, 1066MHz clock |

3 memory channels, 1066MHz clock |

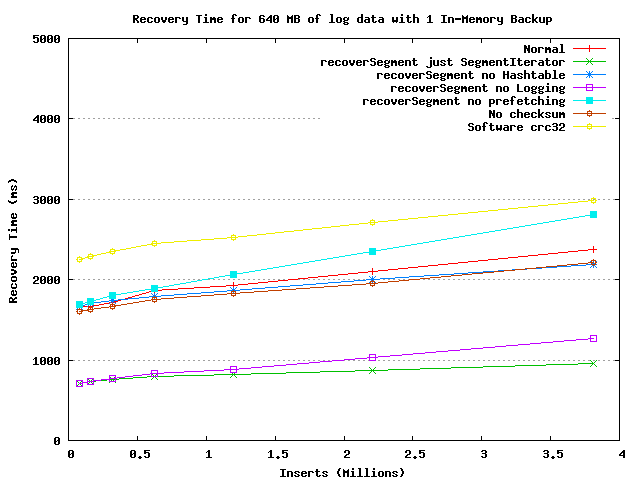

Lynnfield X3470 as the recovery master, with an E5620 as the failed master, coordinator, and backup.

The Lynnfield was running at 1333MHz memory clock. Our original result was kind of disappointing, but luckily Ryan recalled that we were converting ticks to nsec using the wrong divisor (2.4, not 2.93)! This one looks like what we'd expect, beating the E5620 by ~600ms (nearly 20% less time for a ~20% higher clock).

Of course, we'll pay a penalty for lowering the memory clock to 800MHz, but hopefully it will not be terribly important as the prior E5620 benchmarks showed (~100ms).